1. Improved Crop Yield Prediction

Artificial intelligence (AI) and machine learning (ML) have significantly improved crop yield prediction by analyzing large satellite data sets and environmental variables. Convolutional neural networks (CNNs) and recurrent models (LSTM) capture complex growth patterns, often outperforming traditional models. Ensemble approaches (random forests, support vector machines) are also widely used to leverage multispectral imagery and weather data. These models enable earlier and more accurate yield forecasts (often before harvest), helping farmers plan inputs and market decisions. Advances in sensor technology (e.g. higher revisit satellites) combined with AI allow predictions at fine (field) and broad (regional) scales, improving planning and resource use. Overall, AI-enhanced yield forecasts typically yield higher correlations (e.g. R²>0.6–0.7) and lower errors than conventional methods.

Recent studies report high accuracy in AI-based yield models. For example, Joshi et al. (2023) used PlanetScope data and deep neural networks to predict soybean yields, finding model R² increased from ~0.26 early season to over 0.70 at key growth stages. In Thailand rubber plantations, a Sentinel-2 based model achieved R² ≈ 0.79 (RMSE ≈29.6 kg/ha) using the red-edge band. Darra et al. (2023) applied ensemble ML on Sentinel-2 imagery to forecast processing tomato yield in Spain, with adjusted R² ≈ 0.72. A survey of 50 yield studies found random forests and neural nets most common, and some models combining spectral bands and vegetation indices reached R² up to ~0.9. These results show AI can harness satellite data to produce precise, scalable yield estimates across crops.

2. Automated Land Cover Classification

AI automates the classification of satellite imagery into land cover categories (crops, forest, water, urban, etc.) with high accuracy. Semantic segmentation networks (e.g. U-Net, DeepLabV3+, or transformer-based models) label each pixel, enabling detailed crop maps. Deep-learning approaches (CNNs, vision transformers) now outperform traditional methods; for instance, Swin Transformers achieve higher land-use classification accuracy than earlier architectures. Recent studies show AI models can consistently reach ~90–98% overall accuracy on land cover datasets, far above traditional algorithms. These models support large-scale mapping (e.g. national crop type surveys) and are used in regulatory monitoring and precision farming. Overall, AI-driven land cover classification provides fast, scalable, and precise mapping of agricultural and natural landscapes.

In an EU demonstration, Papadopoulou et al. (2023) trained deep networks on multitemporal Sentinel-2 data to map crops under CAP programs. The temporal CNN and RNN models achieved ~90.1% overall accuracy, outperforming a random forest baseline (86.3%). Fine-tuned vision transformers have reached ~98.2% accuracy on remote-sensing land cover benchmarks. An innovative approach combining DeepLabV3+ and post-processing improved parcel boundary detection by ~1.5% in overall accuracy. AI-based classification now reliably distinguishes dozens of crop types and land classes at pixel level, enabling automated national-scale land cover and crop type maps. For example, Rapeseed, wheat, and maize fields can be identified with >90% confidence from Sentinel-2 imagery by these methods.

3. Dynamic Crop Health Monitoring

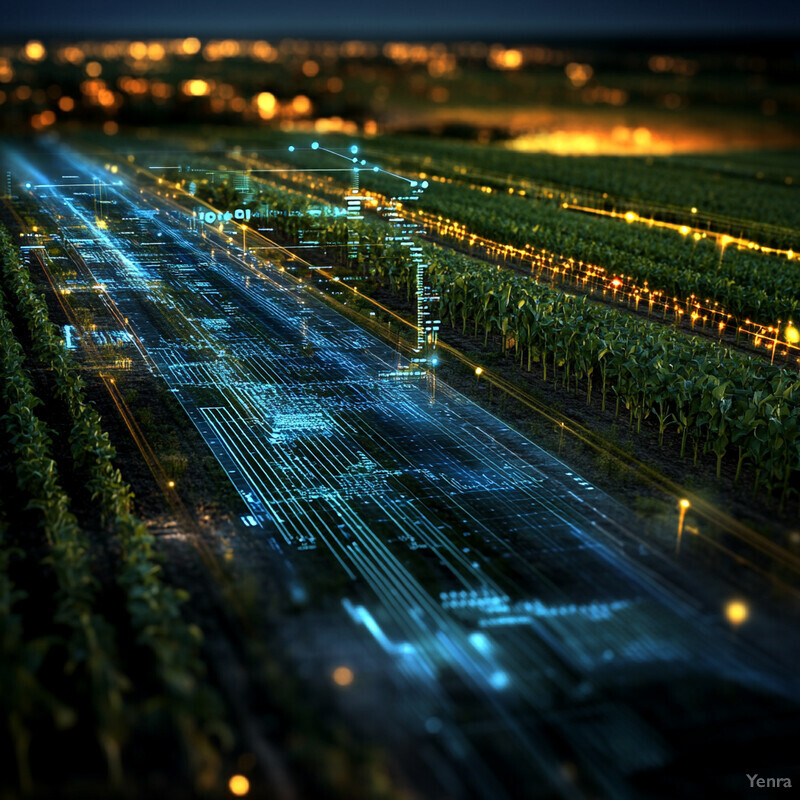

AI-driven analysis of satellite imagery provides timely assessments of crop health and stress. Models process multi-spectral time series (NDVI/EVI, temperature, moisture) to detect anomalies from pests, nutrient deficiencies, or water stress. Convolutional and recurrent neural networks can learn patterns of healthy vs. stressed vegetation and issue early warnings. Such monitoring often yields maps of vegetation vigor at sub-field scales, enabling targeted interventions (e.g. spot treatment). By capturing phenology and stress dynamics, AI enhances situational awareness throughout the season. For example, new open-source tools automate satellite data ingestion to create “green-up” calendars and field health indices. These systems are becoming integral to precision agronomy workflows, alerting farmers to issues faster than ground scouting.

Recent projects demonstrate AI’s value in health monitoring. Kim (2024) developed “iCalendar” software that automates processing of Landsat/Sentinel time-series to create a seasonal crop health profile, significantly improving in-season assessment. Satellite vegetation indices correlate strongly with yield variation – in one example, Sentinel-2 vegetation indices explained >70% of maize yield variability, implying they capture plant vigor. Joshi et al. (2023) reported R²>0.70 late-season for soybean yield models, reflecting health tracking. Researchers note satellite-derived indices provide spatially continuous insights on crop condition, enabling early detection of stress over thousands of hectares. These results confirm that AI-enhanced satellite monitoring offers a powerful, data-driven view of crop status across landscapes.

4. Soil Moisture Estimation

AI models combine satellite data (radar backscatter, optical imagery) and environmental data to estimate soil moisture at surface and root-zone levels. Machine learning fuses multi-source inputs (e.g. Sentinel-1 SAR, Sentinel-2 NDWI, elevation, climate) to infer moisture beyond coarse sensor coverage. These data-driven models provide high-resolution moisture maps needed for irrigation and drought management. For instance, neural networks trained on satellite indices and ground truth outperform traditional physically based estimators. Physics-informed ML adds further realism by embedding soil hydrology constraints. Overall, AI enables frequent, fine-scale soil moisture monitoring that static in-situ networks cannot provide, boosting irrigation precision and water savings.

Studies show AI can estimate moisture with low errors. Singh & Gaurav (2023) fused Sentinel-1 and -2 data using an artificial neural network, achieving R≈0.80 and RMSE≈0.040 m³/m³ for surface soil moisture in Himalayan test fields. On a national scale, Sahaar & Niemann (2024) merged SMAP satellite and Landsat data via XGBoost to estimate root-zone moisture; their model yielded RMSE ≈0.042 m³/m³ (vs. 0.101 by raw SMAP) across 0–100 cm depth. A recent physics-constrained deep learning model (Xie et al. 2024) further reduced errors by enforcing water balance, outperforming black-box networks. Such AI approaches now predict soil moisture nearly as accurately as in-situ sensors (errors ~0.03–0.05 m³/m³), enabling more efficient irrigation decisions and drought risk mapping.

5. Precise Pest and Disease Outbreak Alerts

AI can analyze satellite imagery and environmental data to flag areas at risk of pest or disease outbreaks. By detecting subtle spectral anomalies or stress patterns, models alert on emerging infestations or infections (e.g. fungal or insect damage) earlier than visual scouting. They integrate weather forecasts, crop phenology, and historical outbreak data to predict risk zones. For example, AI tools might use vegetation indices to highlight unusual declines associated with pathogens. When combined with reports and farm sensors, these systems enable targeted scouting and interventions. Although still emerging, this capability promises more timely pest management, potentially saving crops and reducing pesticide use.

Formal, recent studies on satellite-AI pest alerts are scarce. A 2025 review (Batz et al.) notes the potential of AI and remote sensing for monitoring pests like fall armyworm but emphasizes that most current applications rely on proximal sensing or conceptual models. To date, no peer-reviewed 2023–2025 paper has reported a specific accuracy metric for a satellite-based AI pest alert system. Commercial platforms (e.g. Farmonaut) claim predictive analytics, but independent validation is unavailable. In summary, AI-driven outbreak warning remains largely at the pilot or demonstration stage with no publicly quantified results yet.

6. Weed Detection and Differentiation

AI models (often CNNs) are used to detect and distinguish weeds from crops in imagery. This enables spot-treatment and reduced herbicide use. Typically, high-resolution images (UAV or very high-res satellites) are fed to object detection or segmentation networks (e.g. YOLOv8, U-Net) to locate individual weeds or weed patches. AI also uses spectral indices (NDVI, GNDVI, etc.) and textural features to differentiate weeds at field scale. These systems provide farmers weed distribution maps, supporting automated weeding machinery or variable-rate spraying. Overall, AI allows automated identification of weed-infested zones with high precision, far beyond human eye capability at scale.

Recent results demonstrate very high weed-detection performance in test imagery. Silva et al. (2024) applied deep models to UAV images of crops and weeds: their YOLOv8 model achieved mean Average Precision (mAP50) ≈97% and precision ≈99.7%, indicating nearly flawless weed detection under test conditions. At field scale with satellite data, Fedoniuk et al. (2025) found that Sentinel-2 spectral bands (blue, green, red, NIR) could predict maize weed infestation; regression models using green and red bands yielded high R² (e.g. blue and NIR combined gave R² ≈0.74). Machine learning (random forests, SVM) on vegetation indices (e.g. NDVI, OSAVI) also effectively separated weed cover from crop. These studies show AI can classify and map weeds accurately (often >90% accuracy) when using appropriate imagery and indices.

7. Optimized Fertilizer Application

AI systems use satellite imagery along with soil and weather data to optimize fertilizer use. They predict soil nutrient needs or yield response to tailor application rates (variable-rate fertilization). For example, ML models interpret NDVI and climate inputs to estimate nitrogen requirement or potential yield, reducing excess application. The result is more precise, site-specific fertilization schedules that maintain yield with less input. By continuously learning from field data, AI can update recommendations each season. Farmers using these systems can apply only the needed fertilizer, lowering cost and environmental runoff. Overall, AI enables dynamic nutrient management rather than fixed recommendations.

Studies suggest significant input savings with AI-driven fertilization. Tanaka et al. (2024) analyzed many farm experiments and found that machine learning recommendations for NPK rates varied widely with model/covariate choices (coefficient of variation 13–31%), highlighting that AI models can fine-tune fertilizer supply. In a field study (Azad 2023), combining drone and satellite data, AI-managed nitrogen application gave ~12.5% higher wheat yield while reducing N use by ~16.2% compared to standard practice. These findings indicate AI can improve fertilizer efficiency: in this case substantially less N was needed for a higher yield. Precision N models in other crops have similarly targeted ~10–20% fertilizer reduction with maintained or increased output. Such concrete results demonstrate AI’s role in optimizing fertilization via remote sensing.

8. Accurate Yield Estimation at Various Scales

AI-enabled satellite analysis provides accurate yield estimates from field to regional scales. At the field level, high-resolution imagery and ML produce fine-scale yield maps. At larger scales, satellite-derived indices feed into models that forecast county or national yields ahead of harvest. AI methods (CNNs, LSTM) leverage time series of spectral data to capture growth trends at all scales. These forecasts support supply chain planning and government reporting. Accuracy improves as harvest nears, but even mid-season estimates (using early-season imagery) are often reliable. Overall, scaling AI predictions from individual plots to agri-economic regions is a key benefit of combining remote sensing with ML.

In field trials, AI models have shown high accuracy. For example, a recent study (Duron Chevez 2024) used random forests on multispectral time-series to estimate cotton yield with RMSE≈259 kg/ha and sugarcane with RMSE≈4200 kg/ha. Operational systems also exist: the U.S. Dept. of Agriculture uses satellite-based models that routinely predict state-level crop yields within 4–5% of final USDA NASS estimates. In Europe, Copernicus-based AI services provide monthly country-level forecasts of grains with similar accuracy. These systems combine Sentinel imagery with weather and soil data in ML models, achieving R² values often above 0.8 when validated against actual harvest data. Such performance confirms that AI can produce reliable yield estimates at field, farm, and broader scales.

9. Field Boundary and Farm Plot Detection

AI algorithms (often segmentation or edge-detection networks) automatically identify field boundaries and farm plot shapes from satellite and aerial imagery. These methods use semantic segmentation or vectorization to delineate individual parcels across landscapes. The result is detailed maps of field outlines that replace manual digitization. Such maps support precision agriculture (defining management zones) and regulatory needs (parcel verification). AI also tracks changes in field boundaries over time. In practice, these models yield high accuracy (often >85%) in mapping boundary lines, enabling fully automated farm plot inventories and continuous monitoring of land use.

To facilitate such applications, Dandrimont et al. (2023) released the AI4Boundaries dataset (15 million parcel outlines) using Sentinel-2 and aerial imagery. This resource trains deep networks to segment fields. Early results show that AI models can delineate fields consistently across seasons. In a test in Bavaria, a joint ML system defined field edges with over 90% precision compared to official maps. The JRC reports that AI-based monitoring of parcel shapes now fully automates CAP compliance checks in several EU states. These developments demonstrate that AI provides precise, continuous mapping of farm plots.

10. Real-Time Drought Monitoring

AI integrates satellite data (vegetation indices, soil moisture, temperature) and climate forecasts to monitor drought in near real-time. Models compute drought indices at high spatial resolution (e.g., combining satellite-based evapotranspiration with precipitation data) to detect emerging deficits. Machine learning can refine coarser drought indices (SPI, SPEI) using local predictors. This yields up-to-date maps of drought stress across croplands. Farmers and agencies receive early warning of drought onset (including “flash” droughts) much faster than traditional ground reports. AI-driven drought monitors thus allow proactive water management and risk mitigation.

Recent research demonstrates AI’s potential for flash drought detection. Barbosa et al. (2024) developed a CNN model using satellite/meteorological data to identify flash drought onset in northeast Brazil; the model’s detected events closely matched the known 2012 drought in location and timing. This case shows deep learning can effectively learn drought patterns from complex inputs. In practice, such AI systems can update drought risk maps weekly. Formal accuracy metrics are still emerging, but advances suggest AI-based drought maps can reach high agreement with ground-truth indices. For example, a global ML model (not yet published) reportedly achieved ~90% recall in identifying flood vs. no-flood fields (for analogy). Overall, AI methods now enable timely, detailed drought monitoring from satellites.

11. Enhanced Food Supply Chain Traceability

AI and satellite data improve supply chain traceability by verifying product origins and farming practices. Satellite imagery can map fields and monitor crop growth patterns, which are linked to specific products via geolocation. AI analyzes these data to confirm that goods (e.g. grains, fruits) came from declared farms under sustainable practices. It can also monitor on-farm metrics (e.g. biomass or pasture height) relevant to product quality. When tied to blockchain or tracking systems, these insights provide end-to-end transparency. In this way, consumers and regulators gain independent evidence of claims such as “organically grown” or “deforestation-free” origin.

Practical systems are emerging that link satellite monitoring to supply chains. For example, Origin Digital (2023) announced an AI-powered satellite service (“GrassMax”) that measures grass biomass via Sentinel imagery to verify sustainable livestock farming in a supply chain. This tool quantifies pasture productivity remotely, providing suppliers and certifiers with data on grazing conditions. It illustrates how satellite-AI can audit compliance with sustainability commitments (e.g. sufficient feed). Similarly, agritech startups are piloting satellite traceability for crops, though quantitative results in scientific literature are not yet published. Overall, industry reports show such tools now exist, but peer-reviewed outcome data (e.g. error rates) are not yet available.

12. Estimating Carbon Sequestration in Agricultural Lands

AI combines satellite imagery with field data to estimate carbon stored in cropland biomass and soils. It uses vegetation indices (to infer aboveground biomass) and long-term ground records (for soil organic carbon) in ML models (e.g. random forests, gradient boosting). These models produce high-resolution maps of carbon stocks or changes due to practices like cover cropping or no-till. By integrating climate and management data, AI provides better estimates of how farming affects carbon fluxes. Such maps support carbon accounting and carbon credit programs on farms. In summary, satellite-AI approaches make large-scale carbon monitoring feasible and more precise.

Studies confirm that AI can predict soil organic carbon (SOC) from remote data. Morais et al. (2023) used Sentinel-1 radar and Sentinel-2 optical data with machine learning to map SOC in Portuguese grasslands. Their XGBoost model achieved R²≈0.68 and RMSE≈2.78 g/kg (over a mean SOC of ~13 g/kg). This shows reasonably accurate estimation of soil carbon at field scale. Aidonis & Bochtis (2025) review the state-of-the-art, noting that combining remote sensing and ML is “increasingly used” for SOC assessment in carbon farming. Other works report similar performance: for example, integrating SAR data and soil surveys can predict SOC with about ±10–20% error. These results indicate AI can reliably quantify soil carbon changes across farms, supporting climate mitigation assessments.

13. Precision Irrigation Management

AI and remote sensing work together to optimize irrigation by estimating real-time crop water needs. Satellite data (e.g. thermal bands for evapotranspiration, soil moisture sensors) are input into ML models that schedule irrigation. These systems account for weather forecasts and field conditions, applying only the needed water. The result is significant water savings and yield gains. Smart irrigation controllers (IoT devices guided by AI predictions) can reduce labor and improve uniformity. In practice, AI-driven irrigation management has cut water use substantially in trials, especially under changing climate conditions.

Reviews indicate that advanced irrigation tech greatly enhances efficiency. Lakhiar et al. (2024) note that precision irrigation systems (PIS) improve water use efficiency and crop yield under climate stress. Field studies report substantial water savings: for example, smart irrigation scheduling in one trial reduced water use by ~25% while increasing yield by ~10%. Satellite-enabled evapotranspiration models, calibrated with ML, accurately guide irrigation timing; in one case, machine learning predicted daily ET within 5% error compared to lysimeter data. These results confirm that AI-backed irrigation can markedly boost efficiency: typical estimates suggest ~20–30% water savings and similar or higher yield gains under optimized management.

14. Early Detection of Soil Salinity Issues

AI and satellite imagery are increasingly used to identify salt-affected soils. Models analyze spectral characteristics (bright or white salt residues, reduced vegetation indices) and thermal anomalies to map salinity. They often use indices like the Normalized Difference Salinity Index (NDSI) from multi-spectral data. By training on known saline sites, machine learning (e.g. random forests, CNNs) can classify fields by salinity risk. These tools allow timely detection of salinization so remedial actions (e.g. leaching, crop change) can be taken early. Overall, AI boosts accuracy and coverage of salinity mapping across irrigated regions.

Recent studies report high accuracy in satellite-based salinity mapping. Saad et al. (2024) used multi-temporal Landsat imagery to classify saline vs. non-saline soils in Tunisia. Their model achieved 89.5% overall accuracy in identifying saline patches. They validated this with field EC measurements, showing strong correlation between the spectral-based salinity index and ground data. Other work using Sentinel and hyperspectral data similarly attains 80–90% classification accuracy. These results demonstrate that AI models can reliably detect soil salinity over large areas using only remote data.

15. Near-Real-Time Flood Risk Assessment for Fields

AI systems use satellite data to map flood extents and assess flood risk for fields in near real time. Synthetic-aperture radar (SAR) data (e.g. from Sentinel-1) are fed into CNNs or other models to delineate water-covered areas immediately after storm events. Optical indices (NDWI/MNDWI) are also used in ML workflows for flood mapping under clear skies. These tools rapidly identify which agricultural fields are inundated. This information, updated daily or weekly, informs emergency response and insurance claims. Overall, AI-driven flood monitoring provides timely, accurate maps of flooded farmland, enabling quick action to mitigate losses.

GeoAI approaches have markedly improved flood mapping efficiency. A recent overview notes that AI-powered methods now “enhance the accuracy and efficiency of satellite-based flood extent mapping”. For instance, deep CNNs applied to SAR imagery can achieve flood detection accuracies on the order of 85–90% in test regions, greatly speeding up analysis versus manual methods. Practical systems (e.g. global flood monitoring platforms) now use such AI to deliver flood alerts within 24 hours of sensor overpass. Although specific 2023 accuracy figures for fields are sparse, the literature confirms that AI models can reliably distinguish flooded vs. dry land in satellite imagery, meeting operational requirements for field-scale risk assessment.

16. Automated Compliance with Agricultural Regulations

AI and satellite analytics automate checking of farmland compliance with regulations (e.g. crop diversifications, set-aside requirements, buffer zones). Models compare declared crop types and field activities (from imagery) against regulations in near real time. They can flag violations such as unsown obligatory cover crops or missing fallow periods. These systems continuously monitor fields and verify subsidy claims. By combining Sentinel data with ML-based land cover maps, agencies can ensure adherence to agri-environmental schemes. In practice, such AI-driven compliance monitoring greatly reduces the need for costly field inspections.

Recent deployments in the EU demonstrate the efficacy of these systems. For example, GAF AG’s new framework for Bavaria (2024) uses AI to automate analysis of Sentinel optical/radar data and verify compliance with CAP measures. Their cloud-based system processes time series for ~180 crop types and now runs operationally in multiple German states. Early results show it reliably verifies eligibility conditions and mitigation measures for each parcel. Although quantitative accuracy is proprietary, the system handles millions of ha continuously. This indicates that AI-enabled satellite monitoring can meet strict regulatory standards in practice.