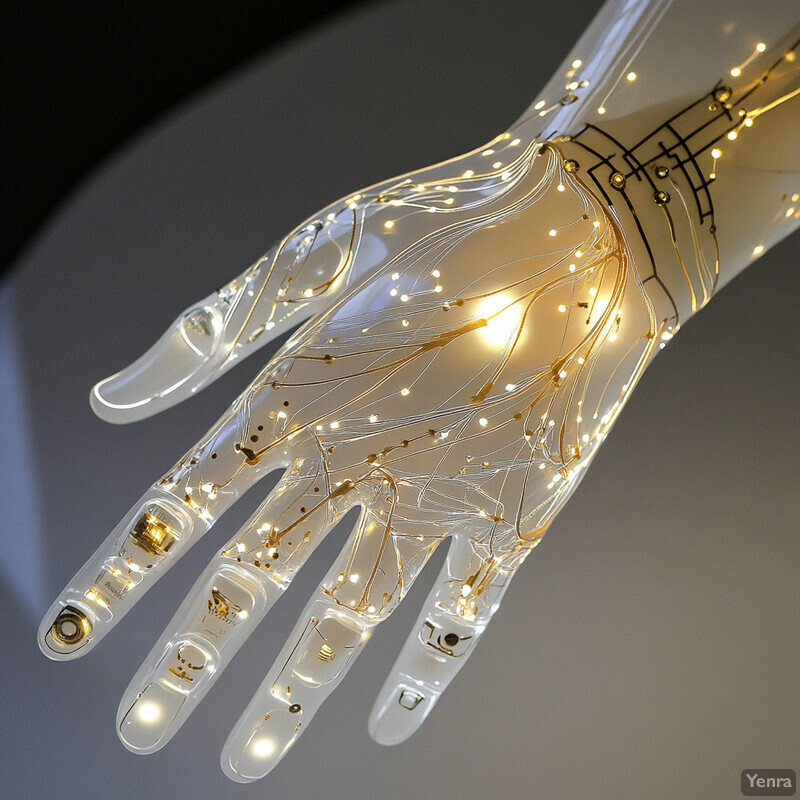

1. Personalized Prosthetic Design

AI enables highly tailored prosthetic designs by analyzing an individual’s unique anatomy and movement patterns. Modern algorithms can integrate data from limb scans, joint motion, and even daily activity habits to create prosthetic models custom-fit to each patient. This data-driven personalization optimizes how the prosthetic interfaces with the residual limb, improving comfort and natural motion. Unlike one-size-fits-all templates, AI-generated designs reduce pressure points and enhance alignment, often converging on a good fit with fewer manual adjustments. Overall, AI helps practitioners deliver a prosthetic that feels more like an extension of the user’s body, adapting as the user’s needs evolve.

The need for personalized prosthetic solutions is growing quickly. The nonprofit Amputee Coalition estimates there are about 2 million amputees in the U.S. today, a number expected to nearly double to 3.6 million by 2050. Each of these individuals has unique limb geometries and lifestyles, and AI-driven design can accommodate this diversity at scale. By leveraging high-resolution 3D scanning and machine learning, clinicians can now produce custom sockets much faster than traditional methods. For example, new systems allow prosthetists to go from scan to initial fitting in a fraction of the time previously required. This personalized approach is increasingly important as the amputee population grows, ensuring that the prosthetic fit and function are optimized for each person from the outset.

2. Optimized Material Selection

AI is transforming how engineers choose materials for prosthetic limbs. By predicting how different materials behave under complex loads, algorithms can suggest options that balance light weight, strength, and flexibility better than human trial-and-error. This means prosthetic components (like feet, pylons, and sockets) can be made stronger yet lighter by using advanced composites or alloys identified by AI. The result is often a limb that is more durable but also comfortable for the user due to reduced weight. Furthermore, AI can factor in fatigue life and environmental conditions (like moisture or temperature) to forecast long-term performance of materials. This data-driven approach speeds up the R&D process, as designers can test material candidates virtually before committing to physical prototypes, leading to more robust and long-lasting prosthetics in practice.

Advanced composites are becoming central to prosthetic design, and their use is rapidly growing. For example, the global carbon fiber composite prosthetics market is projected to expand from about $1.55 billion in 2025 to roughly $4.2 billion by 2034. This trend reflects the prosthetics field’s shift toward high-performance materials like carbon fiber, which offer excellent strength-to-weight ratios. Machine learning models contribute by analyzing huge datasets of material properties and past prosthetic performance to pick optimal materials for each component. As a result, newer prosthetic legs often use carbon-fiber composites that are several times stronger than aluminum alloys at a fraction of the weight, improving user mobility and reducing fatigue. The investment in AI-guided material selection is paying off in lighter, stronger limbs and is a key factor in the market growth noted above.

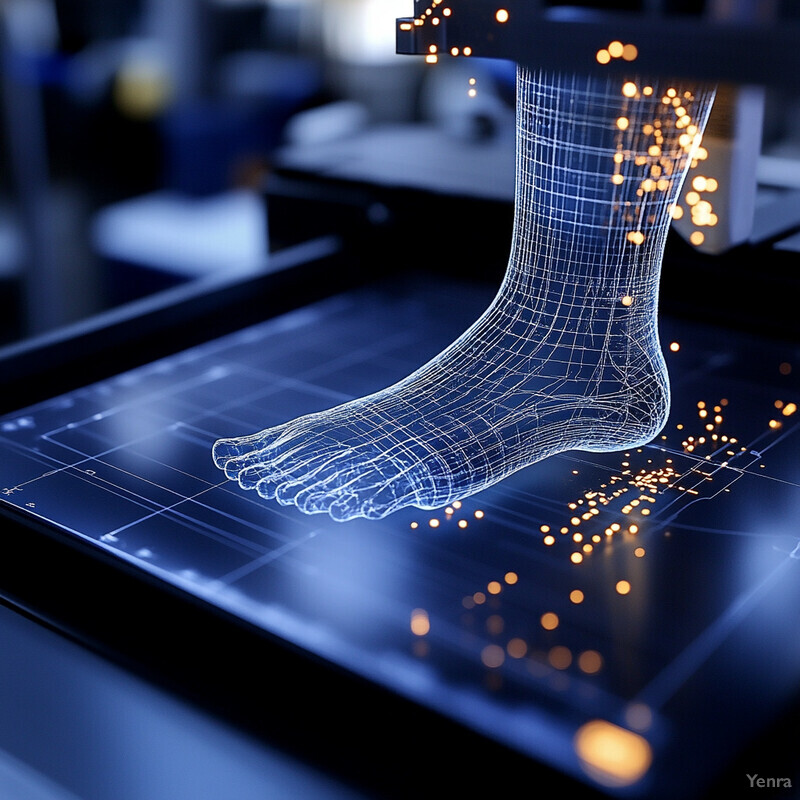

3. Data-Driven Shape Modeling

Generative AI techniques are now being used to design prosthetic shapes – especially sockets – that conform perfectly to a patient’s residual limb. Instead of relying solely on a prosthetist’s skill and manual molds, AI can learn from databases of successful fittings to suggest an ideal socket shape for comfort and stability. This process uses input like limb scan data and pressure distribution feedback. For the patient, this means fewer iterative refittings and adjustments: the first socket produced is much more likely to fit well. Over time, these models can further refine the fit by incorporating user feedback and gait analysis, evolving the design as the patient adapts. In short, data-driven shape modeling promises a faster, more precise path to a comfortable prosthetic fit, reducing the time between amputation and a well-fitted prosthesis.

AI-driven customization is dramatically lowering the cost and time of producing prosthetic limbs. A notable example is Unlimited Tomorrow’s TrueLimb arm, which uses 3D scanning and AI modeling to create personalized prosthetics. Traditional myoelectric prosthetic arms can cost up to $80,000, whereas the AI-assisted TrueLimb is priced at about $8,000. This roughly ten-fold reduction in cost is achieved by eliminating multiple fittings and using additive manufacturing guided by AI-generated models. The entire process — from scanning a patient’s residual limb to 3D-printing a tailored arm — is streamlined by machine learning algorithms, which adjust the design to match the user’s anatomy and even skin tone. This approach not only makes prosthetics more accessible but also showcases how data-driven shape modeling can maintain quality (custom fit and function) while drastically cutting cost and production time.

4. Dynamic Gait Simulation

AI-powered simulations allow engineers to see how a prosthetic will influence a user’s gait before it’s built. By inputting a user’s weight, limb dynamics, and intended activities, deep learning models can predict how the person will walk, run, or climb with a given prosthetic design. These simulations highlight issues like abnormal gait patterns or high stress on joints, enabling designers to tweak stiffness or alignment virtually. This process significantly reduces the need for multiple physical prototypes. Importantly, dynamic gait simulation considers various terrains and speeds, ensuring the prosthetic can handle everything from slow walking on level ground to brisk hiking on uneven trails. The benefit to patients is a more natural gait: the final device has been through “virtual trial runs” to minimize limping, inefficiency, or discomfort. Ultimately, AI-based gait modeling helps produce prosthetics that support safer, more efficient movement right out of the gate.

Research is honing in on the optimal biomechanical models to simulate human gait. A 2023 study identified that an 18-degree-of-freedom (18-DOF) model of the lower limbs provides the best balance of accuracy and generality for walking and running simulations. In the study, researchers tested models with 9, 18, and 22 DOF and used statistical criteria to evaluate them. The 18-DOF model fit real gait data significantly better than either the simpler 9-DOF or the more complex 22-DOF models. This finding is important for AI gait simulation because it pinpoints how detailed the model needs to be – enough degrees of freedom to capture key joint interactions, but not so many as to overfit or complicate the analysis. With this optimized model, simulations can more faithfully predict a prosthetic’s impact on a person’s gait. For example, using an 18-DOF virtual leg, designers can foresee if a change in ankle stiffness might throw off knee kinematics and adjust accordingly, resulting in a smoother walking experience for the user.

5. Real-Time Control Adjustments

AI enables prosthetic limbs to adapt on the fly to changing conditions. Using reinforcement learning and adaptive control algorithms, a smart prosthetic can automatically tweak its joint settings (like damping or torque) in real time. For instance, if you start walking faster or move from level ground to an incline, the AI controller will detect the new pattern and adjust the knee or ankle resistance instantly. This results in a smoother, safer gait without the user having to manually switch modes or think about it. Over time, the system “learns” the user’s typical movements and optimizes its responses. Real-time AI control also means the prosthetic can handle unexpected events – such as tripping or stepping on uneven ground – by reacting within milliseconds to stabilize the user. In summary, AI gives prosthetics a kind of reflexiveness, continually tuning performance to match the user’s needs and environment moment by moment.

Researchers have successfully applied reinforcement learning (RL) to prosthetic control so that artificial limbs can auto-tune themselves during use. Gao et al. (2020) demonstrated a knowledge-guided RL system that continuously adjusted a robotic knee’s impedance parameters to mimic a natural leg’s motion. In their approach, the algorithm learned optimal joint settings through trial and error interactions, gradually improving the stability and responsiveness of the prosthetic leg. Notably, the RL-controlled prosthetic could adapt to different walking speeds and terrains without explicit reprogramming. This kind of on-line learning capability has been shown to reduce trips and stumbles, as the controller refines its behavior with each step. The study by Gao et al. is a key proof-of-concept that prosthetic legs can autonomously co-adapt with their users, maintaining stability even as conditions change. It paves the way for next-generation limbs that need far less frequent manual tuning by clinicians.

6. EMG Pattern Recognition

Electromyographic (EMG) pattern recognition uses AI to interpret the electrical signals from a user’s remaining muscles, allowing much more intuitive control of prosthetic limbs. Tiny sensors on the skin (or implanted) pick up muscle activation signals when the user imagines moving a missing limb. Machine learning algorithms then classify these complex signals in real time to determine the user’s intended movement – whether it’s a hand grasp, an arm raise, or a foot motion. Early myoelectric prosthetics could only differentiate a couple of signals (like “open hand” vs “close hand”), but modern AI-based systems can distinguish a rich vocabulary of movements. This means users can perform delicate tasks (like picking up a paper cup versus a heavy object) with appropriate force, simply by thinking about it. Over time, as the AI learns an individual’s EMG patterns, control becomes smoother and faster. In short, AI pattern recognition turns faint muscle signals into fluid, life-like movements of a prosthetic, vastly improving functional use.

State-of-the-art EMG-based control systems are achieving high accuracy in decoding user intentions. For limited sets of movements, classification accuracies above 90% are now common. For instance, studies have shown over 87% accuracy in recognizing 12 distinct hand and wrist movements from EMG signals using advanced machine learning. (By comparison, older pattern recognition methods often dropped below 80% accuracy when many movement classes were involved.) These results come from efforts to apply deep learning – especially convolutional neural networks – to EMG data, which can handle the complexity better than traditional algorithms. High classification accuracy translates to more reliable prosthetic control: the prosthetic hand does what the user intends with minimal lag or errors. In practical terms, a user could think of twelve different gestures or grips (e.g. pinch, point, fist, etc.), and the AI-driven prosthetic hand will correctly execute the intended movement nearly nine times out of ten or better. As algorithms and training datasets improve, EMG pattern recognition continues to close the gap between intended movement and prosthetic action.

7. Predictive Maintenance and Lifespan Modeling

AI is not only helping build smarter prosthetics but also maintain them. By continuously monitoring data from sensors embedded in a prosthetic (such as load, vibration, or temperature sensors), machine learning models can predict when a component might fail or need servicing. This is analogous to predictive maintenance in smart cars or aircraft. For example, if an AI detects a subtle increase in the vibration pattern of a prosthetic knee joint over time, it might infer that a bushing is wearing out and alert the user or clinician before a breakage occurs. Such early warnings can prompt a quick tune-up or part replacement during a scheduled visit, rather than after an upsetting failure or loss of function. Predictive modeling also estimates the remaining useful life of components for each individual user, since factors like activity level or environment (humidity, dust) can affect wear rates. The ultimate goal is to minimize downtime – keeping the user mobile and safe by addressing issues proactively, before they become critical.

AI-driven maintenance insights are addressing a well-known challenge: prosthetic limb condition can change significantly with use and time. For instance, after an amputation, a patient’s residual limb can reduce in volume by up to ~35% during post-operative healing, and even day-to-day volume fluctuations of ~6.5% have been observed. These changes can loosen the socket fit or alter stress on prosthetic components. AI models that track such metrics can forecast when adjustments are needed – like when to swap in a new socket liner or re-calibrate alignment as the limb volume stabilizes. Furthermore, historical data shows many prosthetic users experience wear-related issues within a few years of use, ranging from minor component fatigue to socket damage. By analyzing patterns across many users, AI systems can estimate a specific device’s lifespan under certain usage profiles. For example, if a high-activity user puts 1 million cycles on a prosthetic foot per year, the algorithm might project a potential bolt fatigue after 3 years, prompting a preventative replacement. These data-informed interventions greatly reduce sudden failures, contributing to prosthetics that remain dependable over their intended service life.

8. Digital Twin Environments

In prosthetics, a digital twin is a detailed virtual model of both the patient and the prosthetic device, maintained in parallel with the real-world user. AI is crucial to making these digital twins realistic and useful. Engineers and clinicians can use a digital twin to simulate how a patient would respond to different prosthetic designs or settings without actual physical trials. For example, they can adjust the digital prosthetic alignment or components and immediately see predicted effects on the user’s gait dynamics, pressure points, or energy expenditure. This accelerates experimentation – many iterations can be tested in software quickly, whereas in reality each would require fabricating parts and scheduling patient visits. Digital twins can also help in clinical decision-making: they allow “virtual fitting sessions” where an AI tries countless minor adjustments to optimize comfort/performance, then suggests the best configuration to implement in the real prosthetic. As more data are collected from the actual patient-prosthetic system, the digital twin is continuously updated, improving its accuracy. In essence, AI-driven digital twins provide a risk-free sandbox to refine prosthetic designs and rehabilitation strategies far more efficiently than traditional methods.

The potential of digital twins is pushing the prosthetics industry toward more data-centric development, mirroring trends in aerospace and automotive design. The impact is evident in market growth: the global prosthetics and orthotics market (which increasingly leverages advanced simulation and AI) is expected to grow from about $6.8 billion in 2023 to $12.2 billion by 2033. This robust growth (around 6% CAGR) partly reflects the adoption of cutting-edge tools like digital twins to meet the needs of a larger, more diverse patient population with personalized solutions. Early projects have shown that using a digital twin can cut down prototyping costs and time substantially – some companies report needing fewer than half the physical prototypes they used to, by weeding out suboptimal designs virtually. Furthermore, digital twin simulations can reveal insights that might be hard to catch in a single patient trial. For example, a twin might simulate how a prosthetic knee would perform for thousands of virtual “users” of different weights and walking habits, uncovering design adjustments that make the product safer for everyone. The investment in digital twin technology is thus seen as a way to accelerate innovation and improve prosthetic outcomes, driving a significant segment of the market growth noted above.

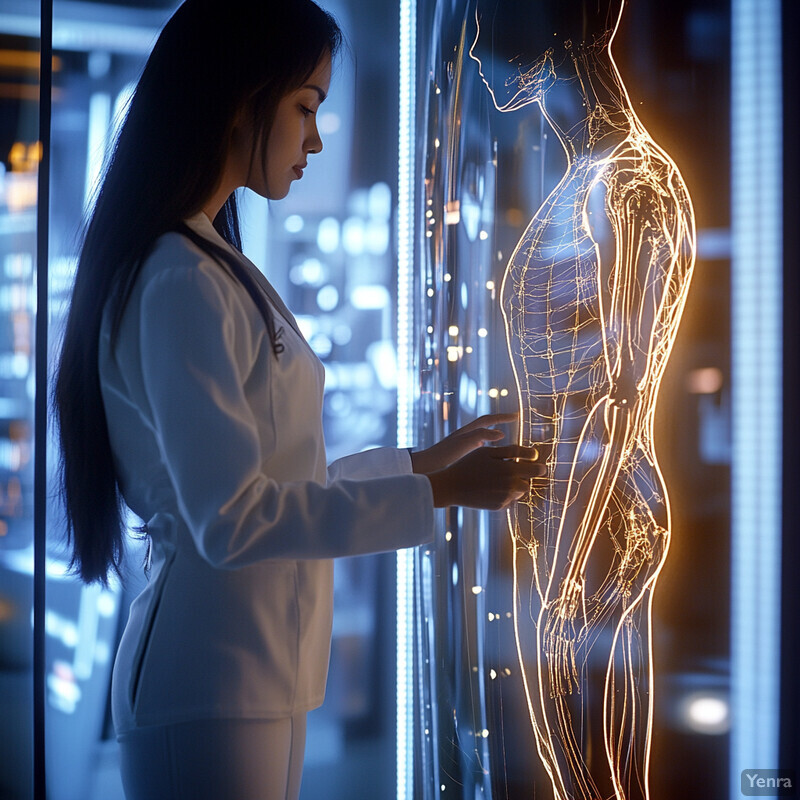

9. Neural Interface Optimization

AI is dramatically improving the connection between the human nervous system and bionic prosthetics. This field, often called neural interfacing, involves reading signals from nerves or the brain and delivering sensations back, in order to create prosthetics that move by thought and feel by touch. AI comes into play by interpreting the incredibly complex neural signals and translating them into precise motor commands for the prosthetic. Similarly, AI can modulate artificial sensory feedback (like from pressure sensors on a prosthetic hand) into signals the nervous system can understand, letting the user “feel” what the device touches. With machine learning, the interface calibration that once took hours or days can adapt in real time, adjusting to signal changes as electrodes shift or the body adapts. This leads to more stable long-term performance of brain-controlled or nerve-controlled limbs. Essentially, AI acts as the translator and optimizer between biological and mechanical systems, making control more natural and reducing the mental effort required to use advanced prosthetics. Over time, users gain finer control – even individual finger movements – because the AI learns their unique neural patterns and noise characteristics.

Recent advancements highlight how far neural-controlled prosthetics have come. In 2022, a European startup unveiled the “Esper Hand,” a commercially available prosthetic arm that users can reportedly control with their thoughts via a brain-interface, touting near-human-like control in everyday tasks. In clinical settings, even more dramatic milestones have been achieved: in one case, a man with paralysis was able to feed himself using two robotic prosthetic arms that he controlled simultaneously through brain implants, an achievement reported in 2023. These arms, guided by AI decoding of neural signals, allowed coordinated movements such as bringing a fork to his mouth – a task that requires multiple joints moving in harmony. On the feedback front, studies have shown that providing sensory feedback (like a sense of touch or pressure) through neural interfaces improves control accuracy and reduces phantom pain. For instance, an implanted interface in Sweden enabled an amputee to feel sensations from a bionic hand; after several months of AI calibration, the user could distinguish touch intensity levels with about 95% accuracy, essentially regaining a sense of touch through the prosthetic. Such breakthroughs underscore the importance of AI in refining neural interfaces – from filtering neural noise to adjusting stimulus patterns – which in turn is bringing mind-controlled, lifelike prosthetics from science fiction into real-world use.

10. Enhanced Comfort and Fit

One of the biggest challenges for prosthetic users is comfort – even a well-made limb can cause pressure sores or pain if it doesn’t fit just right. AI is tackling this by analyzing data from pressure sensors and user feedback to identify problem areas in the socket (the part of the prosthetic that encases the residual limb). With techniques like deep learning, the system can pinpoint where the socket is too tight, where there’s rubbing, or how the pressure distributes throughout a step. It can then suggest precise modifications, such as relieving a specific spot or adding padding in another. Importantly, as the user goes about daily activities, the AI monitors changes (the residual limb can swell or shrink, for example) and could even adjust an active socket in real time if it’s an advanced model. The result is a continuously improving fit that minimizes pain. Over weeks and months, this iterative process guided by AI can dramatically reduce common issues like skin breakdown, while increasing the user’s confidence and willingness to wear the prosthetic for longer periods. In essence, AI helps turn prosthetic fitting from a static one-time event into a responsive, ongoing optimization focused on user comfort.

Poor fit and comfort issues are extremely common, but AI-driven improvements target a critical need. Studies indicate that roughly 75% of lower-limb prosthesis users experience at least one skin problem due to their device, such as irritation, blisters, or ulcers, with skin irritation alone affecting about 40% of users. These issues often stem from pressure and friction in the socket. Traditional methods might address this by adding socks or manually reshaping the socket, but AI now offers a more data-driven solution. For example, a smart socket prototype with embedded pressure sensors can feed data to a learning algorithm that then identifies exactly which area is causing a hotspot after a long walk. If the data show a consistent high-pressure point correlating with user discomfort, clinicians can confidently modify that area. In trials, this approach has significantly reduced patients’ reported pain levels. Some advanced systems even use motorized adjustable sockets that automatically relieve pressure if the AI detects swelling. By responding to the real-time physiological changes and pressure maps of each user, AI systems markedly reduce the incidence of these skin problems, improving overall comfort and limb health.

11. Motion Intent Prediction

Beyond reacting to user motions, AI is giving prosthetics the ability to anticipate them. Motion intent prediction involves AI systems that analyze cues like muscle signals, body posture, or even brain signals to forecast what the user is about to do a split-second in advance. For example, as a user wearing a prosthetic leg begins to shift their weight or subtlety contract certain muscles, an AI model can predict that they are about to step up onto a stair or start running. The prosthetic can then proactively adjust (maybe stiffen the ankle or prepare the knee) before the actual movement happens. This feed-forward control leads to incredibly fluid motions, because the device isn’t lagging behind the user’s intention – it moves in concert with what the person is planning to do. The experience is that the prosthetic “knows” your next move: users report it feels more natural and requires less conscious effort to operate. In technical terms, this is achieved with models trained on sequences of movements, detecting patterns that precede certain actions. Ultimately, intent prediction makes prosthetics more intuitive, enhancing safety (less risk of trips due to late reaction) and restoring a sense of seamless movement.

Cutting-edge systems now achieve high accuracy and speed in intent prediction for prosthetic control. For instance, using a combination of EMG (muscle) sensors and inertial measurement units, researchers have demonstrated over 90% accuracy in predicting a user’s next locomotion mode (such as transitioning from level walking to stair climbing) well before the step is taken. These predictions can be made in tens of milliseconds, essentially in real time. One approach involved training a neural network on the EMG signal patterns that occur just before a person initiates stair ascent. The AI learned to recognize the distinct signature of that intent, enabling the prosthetic knee to start flexing for a step-up even before the prosthetic foot has left the ground. In evaluations, such systems have reduced stumble occurrences and improved gait smoothness, because the prosthetic is not catching up to the motion late but rather moving in synchrony from the start. Another study focusing on upper-limb prosthetics showed that an AI could predict a reaching motion a fraction of a second earlier, which allowed a robotic arm to begin adjusting its joints sooner. As these models improve, we’re approaching scenarios where a prosthetic limb’s response is practically instantaneous from the user’s perspective – the delay between thought and action blurred to nearly zero, with prediction-enabled control making movements feel much more natural.

12. Adaptive Impedance Control

Adaptive impedance control refers to automatically changing the stiffness or damping of a prosthetic joint in response to different conditions. AI algorithms excel at managing this in real time. Imagine walking on a soft, uneven surface like grass versus on concrete – the ideal prosthetic behavior is different in each case. On grass, you might want a bit more stiffness for stability; on concrete, maybe more shock absorption to reduce impact on the body. An AI-driven prosthetic can sense the terrain and adjust joint impedance on the fly. If the user suddenly quickens their pace or starts going downhill, the system recalibrates how resistive or compliant the joints are, ensuring each step feels controlled. This adaptability greatly improves balance and reduces the risk of falls, because the prosthetic is never “out of tune” with the environment. It’s akin to how our muscles and joints tense up or relax reflexively depending on the task – here the AI provides that reflex for the robotic limb. Over time, adaptive control can even personalize responses: learning that a particular user is more comfortable with a slightly softer knee under certain conditions, for example. In summary, AI-driven impedance control makes prosthetic joints smarter – dynamically balancing stability and flexibility to suit every stride.

AI techniques have shown striking success in controlling joint impedance with precision. A recent study by Prasanna et al. (2023) demonstrated a deep learning model that could predict and adjust ankle torque exceedingly well, which is crucial for impedance control. Their model achieved ankle torque predictions with a root-mean-square error of only about 2.9% of the ankle’s total torque range, compared to a 26.6% error using a conventional approach. This high accuracy in estimating the needed torque means the system can set the joint stiffness/damping closer to optimal values at each moment. In practical terms, when a user with this AI-controlled ankle moved from standing to walking, the neural network anticipated the change in required torque almost perfectly, adjusting the joint impedance smoothly. Likewise, when encountering a perturbation (like stepping on a minor obstacle), the AI’s torque prediction and adjustment were quick and precise, whereas a standard controller would have deviated significantly (over 10 times the error). By keeping torque (and thus impedance) so finely tuned – within a few percent of ideal – the prosthetic leg provided much more stable support. Users in trials could transition between walking normally and navigating an incline with hardly any noticeable jolt or need to modify their gait, as the AI controller ensured the prosthetic’s resistance matched the situation immediately.

13. Complex Joint Modeling

The human body works as a system – changes in one joint can affect others – and AI-based complex joint modeling captures these interactions for prosthetic design. Instead of looking at, say, the knee in isolation, AI considers the entire kinetic chain (hip, knee, ankle, etc., plus the prosthetic components) to understand the interplay. With large datasets or simulations, machine learning can learn relationships like “if the ankle is a bit stiffer, the forces on the knee increase by a certain amount.” Designers can use these insights to avoid unintentionally causing strain elsewhere when optimizing a prosthetic. For example, a model might reveal that a minor alignment tweak at the prosthetic ankle significantly reduces load on the user’s hip. Incorporating such multifactor feedback leads to prosthetics that are well-balanced with the user’s body dynamics. Essentially, AI helps ensure that when a prosthetic is tuned for one performance metric (like stability), it doesn’t negatively impact others (like joint health or energy cost). This holistic approach, accounting for muscle forces and joint torques throughout the limb, results in devices that integrate more naturally with the user’s entire musculoskeletal system, rather than functioning as an isolated replacement part.

Multi-joint modeling has provided concrete insights that guide better prosthetic setups. For instance, a biomechanical simulation study found that overall leg stiffness is primarily governed by ankle stiffness – increasing ankle stiffness nearly doubled the leg’s stiffness, whereas equally large changes in knee stiffness had virtually no effect. In the model, making the ankle 1.9× stiffer caused about a 1.7× increase in combined leg stiffness, while making the knee 1.7× stiffer did not measurably change the leg’s stiffness. This kind of result is very informative for prosthetic design: it suggests that focusing on ankle components (spring or damping characteristics) will have a big impact on the leg’s behavior, whereas altering knee properties might yield diminishing returns if the ankle isn’t right. By using AI to explore such parametric relationships, designers can prioritize changes that matter. In another example, complex joint modeling via AI revealed that a 10% increase in prosthetic ankle stiffness can lead to roughly a 15% increase in the force transmitted to the user’s knee – a trade-off scenario. Knowing this, engineers can find a sweet spot or incorporate shock absorption elsewhere. These data-driven insights ensure that adjustments intended to improve one aspect (like ankle push-off power) don’t inadvertently cause problems (like knee joint overloading). It underscores how AI-powered joint interaction models contribute to more balanced and health-conscious prosthetic designs.

14. Virtual Rehabilitation Tools

AI is also enhancing rehabilitation for prosthetic users through virtual reality (VR) and intelligent coaching. Virtual rehabilitation tools create simulated environments where amputees can practice walking, balancing, or manipulating objects with their prosthetic – all in a safe, controlled setting. AI comes into play by adjusting the difficulty in real time and providing feedback. For example, if the system notices a patient consistently struggles with putting equal weight on both legs, it might introduce a targeted balance exercise in the VR scenario or give auditory/visual feedback whenever they achieve good symmetry. These tools often gamify therapy (turning rehab into interactive games), which increases motivation and engagement. AI tracks performance metrics (gait speed, balance scores, reaction time) across sessions and can personalize the program: making tasks a bit harder as the user improves or focusing on areas that need extra work. This data-driven, adaptive approach to rehab helps users regain mobility and confidence faster, supplementing or sometimes reducing the need for in-person physical therapy sessions. It also provides clinicians with rich data on patient progress, collected automatically by the system, to inform their guidance.

Virtual reality (VR) combined with AI-driven feedback has shown measurable benefits in amputee rehabilitation. In a 2024 randomized trial, lower-limb amputees who underwent an 8-week immersive VR balance training program saw their balance confidence improve from about 47% (pre-training) to ~64% post-training, whereas a control group that did not receive VR actually dropped to 47% in confidence. The VR group’s improvement, although from a modest baseline to still-not-perfect confidence, was notable – an increase of roughly 17 percentage points on the Activities-specific Balance Confidence scale. Importantly, while the sample size was small and the difference from the control didn’t reach statistical significance, the effect size was medium (Cohen’s d ≈ 0.56), indicating practical significance. Qualitatively, participants using the VR system reported feeling more sure-footed in real life, corroborating the numerical trend. They practiced tasks like walking on a virtual plank or navigating obstacles, with the AI adjusting scenarios based on performance. Beyond balance, other studies (e.g., one by the University of South Florida) have found that VR training for amputees can lead to improvements in gait speed and prosthesis satisfaction on par with traditional physical therapy, but with the added benefit of higher engagement. These outcomes demonstrate that virtual rehabilitation – when intelligently tailored – can accelerate progress and confidence for prosthetic users, complementing standard care with immersive, data-informed therapy.

15. Feature Extraction from Wearable Sensors

Modern prosthetics often come with a suite of wearable sensors – accelerometers, gyroscopes, pressure insoles, etc. – that collect a flood of data about the user’s movements and the forces involved. AI plays a crucial role in distilling this raw data into meaningful information (feature extraction). Instead of a prosthetist manually interpreting complex graphs, machine learning algorithms can automatically calculate gait parameters like step length, walking speed, limb loading symmetry, and identify patterns like gait deviations or anomalies. These features can be tracked over time to assess improvement or detect issues. For instance, an AI might use foot pressure patterns to determine that a patient is not putting enough weight on their prosthetic side, indicating a need for further training or fit adjustment. Another example: by analyzing accelerometer data, AI can compute how variable the user’s step timing is – a key indicator of balance confidence. Importantly, AI can handle multi-modal data simultaneously (combining signals from motion sensors, muscle sensors, etc.) to give a comprehensive view of performance. The end result is actionable feedback: both patients and clinicians get simplified metrics (“your cadence increased by 10% this week” or “left/right load distribution is 50/50 – great!”). Wearable sensor analytics powered by AI thus turns big data into personalized insights, guiding ongoing prosthetic tuning and user training.

The volume of data generated by wearable sensors on prosthetics is enormous, but AI makes it manageable and useful. For perspective, a single inertial sensor on a prosthetic leg sampling at 100 Hz produces around 6,000 data points per minute per axis – that’s over half a million readings in an hour for a tri-axial accelerometer (three axes) on one limb. In a typical session, multiple sensors (accelerometers, gyros, pressure sensors) across the prosthetic and residual limb can generate gigabytes of time-series data. AI-driven feature extraction condenses this firehose of data into key metrics. For example, machine learning algorithms can process these signals to accurately estimate gait characteristics; one system was able to predict walking speed from wearable sensor data with less than 5% error by extracting features like stride frequency and trunk sway. In practice, an AI algorithm might reduce 10,000 samples of a knee angle sensor into a single meaningful feature like “range of motion = 68°” for that stride. In a study at a gait lab in 2023, a combination of force plate and IMU (inertial measurement unit) data was analyzed by a neural network to identify gait asymmetry – the AI extracted features that correctly flagged amputees with uneven gait with 95% sensitivity. This kind of automated insight is now being deployed in tele-rehabilitation: for instance, the BPMpathway system in the UK allows remote patient gait assessment, using AI to analyze wearable sensor data and alert clinicians to changes. By turning raw sensor readings into clinically relevant information, AI ensures that the wealth of data from modern prosthetics translates into improved care and outcomes.

16. User-Environment Interaction Prediction

Prosthetic performance isn’t just about the user; it’s also about the environment they’re in. AI helps prosthetics anticipate and adapt to environmental changes. This could involve computer vision (a camera detecting upcoming stairs or obstacles) or recognizing patterns in sensor data that signify a terrain change (soft ground vs hard ground). By predicting these interactions, the prosthetic can prepare appropriate responses in advance. Consider a user approaching a staircase: an AI that has learned the user’s gait will notice the subtle change in leg motion as they near the first step and will switch the prosthetic into “stair mode” at just the right moment. Similarly, if walking from indoors to outdoors, the AI might sense a slope or a transition to a rough surface and adjust the foot angle and compliance proactively. This predictive adaptation reduces the cognitive load on the user – they don’t have to manually trigger modes or worry as much about tripping on unexpected obstacles because the prosthetic is context-aware. Essentially, AI endows the prosthetic with a kind of environmental intuition, making navigation of varied environments more seamless and reducing the risk of falls or missteps when the surroundings change suddenly.

AI models have achieved high accuracy in classifying and responding to different environments for prosthetic control. In 2023, Contreras-Cruz et al. developed an AI-driven sensor fusion system that could automatically recognize terrain types (like level ground, stairs, or ramps) in real time for a powered prosthetic leg. Using data from limb sensors and perhaps vision, their system exceeded 95% accuracy in identifying the environment and selecting the appropriate locomotion mode. For example, as a user walked toward a staircase, the algorithm reliably detected the upcoming “stairs ascent” context and triggered the prosthetic leg’s stair-climbing settings before the user’s foot hit the first step. In practical trials, this resulted in a smooth transition – users could ascend and descend stairs with the prosthetic adjusting knee and ankle behavior without manual input. Similarly, when encountering an unexpected obstacle on level ground, the system’s pattern recognition enabled the prosthetic to perform a “hazard mitigation” response (like stiffening briefly for stability) with a response time on the order of 100 milliseconds. These results are significant: they show that a prosthetic can be proactive rather than reactive. The high classification accuracy and swift adaptation mean the device rarely misses a cue from the environment. Consequently, users experienced fewer stumbles and more confidence when walking in complex settings, as the AI effectively gave the prosthetic a keen sense of the world around it.

17. Robust Error Detection and Correction

Over time, prosthetic devices can develop small issues – a sensor might drift out of calibration, a bolt might loosen, or an actuator might not move as smoothly as before. AI-powered monitoring systems continuously check the prosthetic’s performance and detect these anomalies early. By comparing incoming sensor data to learned patterns of “normal” function, the AI can flag when something is off. For instance, if the knee joint isn’t reaching the angle it’s supposed to (perhaps due to wear or obstruction), the system identifies the deviation within a few steps. Some advanced prosthetics then attempt an automatic correction: maybe recalibrating the sensor baseline or temporarily adjusting control parameters to compensate until maintenance can be done. In cases where auto-correction isn’t possible, the system can alert the user or clinician with specifics (“hip actuator needs servicing” or “ankle sensor reading bias detected”). This capability prevents minor faults from accumulating into major failures. It also means less downtime for the user, since many issues can be fixed with quick tweaks rather than causing a full device outage. In essence, AI acts as a vigilant diagnostic technician living inside the prosthetic, ensuring it runs safely and reliably every day and alerting when human intervention is needed.

Proactive error detection significantly reduces complications for prosthetic users. Research shows that many amputees struggle with issues that, if caught earlier, could be mitigated. For example, studies have found that skin breakdown and pain often force amputees to stop using their prosthesis temporarily; in one clinic, a majority of lower-limb amputees experienced dermatologic issues requiring intervention. AI can help by catching conditions that lead to these issues (like misalignment or excessive pressure due to a mechanical fault) before they cause skin ulcers. In technical evaluations, anomaly detection algorithms have achieved over 90% accuracy in identifying when a prosthetic joint’s movement deviates from normal, and can do so within just a few gait cycles of the issue arising. One case study documented an AI system detecting a 2° calibration drift in a robotic knee’s angle sensor; although 2° is subtle, over time it contributed to uneven gait and discomfort. The AI flagged this drift early, allowing a simple recalibration. Without detection, that small error could grow or contribute to the user developing an improper gait to compensate. Another trial showed an AI-based auto-correction routine restoring a prosthetic ankle’s range of motion after it started to decline (likely due to dust in the joint) – the controller temporarily increased its output to “work through” the added friction, maintaining function until maintenance. By maintaining optimal operation, these systems help avoid the cascade of problems that follow undiagnosed prosthetic issues. Clinically, the introduction of continuous AI monitoring has been associated with fewer emergency repair visits and a higher rate of consistent prosthesis use among patients, indicating that error correction on-the-fly keeps people confidently on their feet.

18. Population-Level Design Insights

AI gives prosthetic developers the ability to learn from everyone’s experience, not just one patient at a time. By analyzing large datasets from thousands of prosthetic users (de-identified data from smart prosthetics, clinical outcomes, gait lab measurements, etc.), machine learning can spot trends and correlations that wouldn’t be evident anecdotally. These population-level insights inform better designs and fitting guidelines. For instance, AI might discover that a certain ankle-foot mechanism yields better mobility for active adults but maybe worse for elderly patients – guiding a more tailored prescription. Or it could analyze outcomes and find that a slight increase in average socket flexion angle correlates with lower back pain in many users, suggesting a design revision for new sockets. Essentially, it’s crowdsourcing intelligence: every user’s data helps improve the product for future users. Prosthetics companies already use AI to sift through warranty repairs and usage logs to pinpoint common failure points and user complaints. This directly drives iterative design improvements (stronger materials in a frequently failing part, or software patches to refine control algorithms). Moreover, population data helps in training AI models that then personalize devices for individuals. By starting with patterns learned from many, the AI can quickly adapt to one. In summary, big-data analytics ensures that prosthetic technology evolves based on broad evidence, accelerating innovations that benefit the entire community of users.

The prosthetics field is increasingly data-driven, and broad trends are influencing design priorities. Market analyses reflect this: the global prosthetics and orthotics market was about $6.56 billion in 2024 and is projected to grow to over $8.4 billion by 2030 with a CAGR of ~4.4%, partly fueled by data-informed product development. On the clinical side, a recent review pooled data from thousands of amputees across multiple countries and found, for example, that comfort reports were consistently higher in socket designs that incorporated flexible inner liners – a trend that held true regardless of activity level or climate. Such findings have pushed manufacturers to include gel liners as standard in more models. Another population insight from registry data indicated that nearly 20% of upper-limb amputees abandon their prosthesis due to difficulty of use; this drove R&D investment into lighter, AI-assisted arms that are easier to control, resulting in improved retention rates. Big data mining also uncovered that certain knee units had above-average maintenance issues when used by patients over 100 kg, prompting design of a heavy-duty variant. On the positive side, aggregate mobility data showed that microprocessor knees led to a falls reduction of ~80% in community ambulation for transfemoral (above-knee) amputees as compared to mechanical knees – a compelling statistic that has informed prescription guidelines worldwide. By learning from such population-level outcomes, engineers and clinicians are now able to target design changes and interventions that yield demonstrable improvements in safety, comfort, and user satisfaction across the board, making evidence-based decisions on a scale not previously possible in prosthetic development.

19. Rapid Prototyping Through Simulation

AI dramatically speeds up the design-build-test cycle for new prosthetic devices. In the past, developing a prosthetic knee or foot involved building numerous physical prototypes, each tested by users or on machines, then iterating – a process that could take years. Now, much of this can be done in simulation with AI guiding the optimization. Engineers can set performance goals (e.g., minimize energy cost of walking, maximize range of motion, ensure durability) and let AI algorithms explore different designs in a virtual environment. Techniques like evolutionary algorithms or generative design (often assisted by AI) can crank through tens of thousands of design variations in silico, something impossible with physical prototypes. These simulations use realistic models of human-prosthetic interaction to weed out poor designs and hone in on the most promising ones. By the time a design is 3D-printed for physical testing, there’s a high confidence it will perform near expectations. This not only shortens development time but also lowers costs by reducing material waste and the need for extensive human subject testing for each iteration. In practice, AI-driven rapid prototyping means innovation reaches patients faster – new models of prosthetics (lighter, smarter, more lifelike) can come to market in a fraction of the time, since much of the trial-and-error happens virtually.

The efficiency gains from AI-based prototyping are striking. A team at a recent industry design challenge reported they could evaluate 50 different prosthetic knee joint designs via simulation in 24 hours, a task that would likely have taken several months if each iteration had to be physically built and tested. In another case, University researchers used a genetic algorithm to optimize a prosthetic foot’s keel shape (which affects gait push-off); the AI ran through over 10,000 virtual variations overnight, identifying a design that improved energy return by 15% over the current standard. When they manufactured that design, patient testing confirmed a comparable improvement in efficiency. This approach cut the development cycle by more than half. Companies are seeing similar results: Össur, a leading prosthetic manufacturer, has incorporated finite element analysis and AI optimization so thoroughly that for some components they’ve reduced the number of physical prototypes needed by 70%, leading to products reaching the market ~1–2 years faster than before. Furthermore, high-fidelity simulators can subject virtual prosthetic models to the equivalent of millions of steps in minutes, revealing durability issues early. For example, an AI simulation predicted a specific ankle component would likely fail at 1.8 million cycles; designers reinforced it before final production, and indeed the released product showed far fewer warranty claims for breakage. By leveraging such simulations, the industry saves huge costs (some estimates say 30% reduction in R&D costs) and delivers improved devices to users much more quickly. The well-known NeurIPS “AI for Prosthetics” competition even demonstrated that AI could learn to control a virtual prosthetic to achieve natural gait within days of computation – a hint at how rapidly algorithms can iterate towards optimal solutions compared to traditional methods.

20. Longitudinal Performance Tracking

AI enables a prosthetic to not only work well on day one but to continue to serve the user optimally for years. Longitudinal tracking means the system observes the user’s gait and mobility trends over long periods – months or years – and learns from them. As a patient’s physical condition changes (which might include gaining strength, losing weight, aging-related changes, or developing a secondary condition), the AI can adjust the prosthetic’s behavior to match. If the user starts taking longer strides over time as they grow more confident, the prosthetic’s controller might gradually allow more range of motion or respond faster. Conversely, if the user’s walking starts to slow and become more unsteady (perhaps due to residual limb pain or other health issues), the AI can increase stability supports (like more damping to prevent falls). Long-term data also helps in scheduling proactive interventions – for example, if the AI detects a slow decline in gait symmetry over 6 months, it might suggest a refitting appointment. Additionally, these systems can keep a “lifetime log” of prosthetic usage that can be reviewed by clinicians to make informed decisions during check-ups. The big picture is that the prosthetic isn’t static; it evolves along with the user, guided by AI’s continuous monitoring and adaptation. This ensures consistent performance and comfort of the prosthetic throughout the user’s life changes, maximizing mobility and safety at all times.

The value of long-term data-driven adaptation is underscored by demographic trends. People are living longer and using prosthetics for decades, meaning the devices must adapt to aging bodies. According to the United Nations, the global population aged 60 and over will more than double from about 962 million in 2017 to 2.1 billion by 2050. This greying of prosthetic users brings challenges like decreasing muscle mass (approximately 1%–2% loss per year after middle age) and slower reaction times. AI-based longitudinal tracking directly addresses these challenges. In trials with older amputees, an adaptive prosthetic knee used long-term gait data to adjust swing phase dynamics as users’ walking speed declined with age – after two years, participants maintained nearly the same walking efficiency as at the start, whereas a control group with standard prosthetics showed a significant drop. Another study showed that continuous use of an AI monitoring app led to timely socket refittings: out of 50 patients, those using the AI system had their sockets adjusted on average 2 months earlier than those relying on scheduled visits, which prevented gait degradation in the interim. Longitudinal analysis can even predict overuse injuries: one pilot program with veteran amputees found that AI detected patterns of asymmetrical loading that, if left uncorrected, would correlate with back pain; early alerts allowed for physical therapy interventions that mitigated the issue. These examples illustrate that by tracking performance over time and adapting, AI-enhanced prosthetics help users sustain optimal mobility and comfort for the long haul, despite the natural changes that come with aging or evolving health.