1. Subgrid Parameterization Improvements

AI is being applied to represent small-scale atmospheric processes that climate models cannot explicitly resolve, such as turbulence and cloud microphysics. By training on high-resolution simulations or observations, machine learning (ML) algorithms can learn how these subgrid processes affect large-scale climate variables. Integrating these AI-based “parameterizations” into climate models helps capture the influence of clouds, aerosols, and boundary-layer eddies more accurately. Ultimately, this leads to simulations that better reflect real atmospheric complexity, improving model fidelity without exorbitant computational costs.

Climate modelers have begun blending traditional physics-based schemes with AI-derived closures to improve unresolved process representation. For example, Schneider et al. (2024) propose combining process-based parameterizations with AI-driven functions learned from data to more faithfully model convection and turbulence. In practice, a hybrid climate model using a machine learning subgrid scheme was shown to reduce global precipitation errors by up to 17% (and 20% in the tropics) relative to a standard model by capturing fine-scale variability that standard parameterizations missed. Such AI-informed parameterizations have already achieved stable multiyear simulations with realistic cloud and wind patterns, demonstrating that ML can emulate subgrid physics (like cloud-resolving models) while maintaining climate stability (5-year mean biases were as low as in state-of-the-art approaches). These successes indicate that AI can significantly enhance how small-scale processes are represented in climate models without sacrificing stability or physical realism.

2. Data-Driven Downscaling

Machine learning is enabling more detailed regional climate projections by translating coarse global model output into fine-scale information (a process known as downscaling). Traditional global climate models operate at low resolution, missing local details important for impacts. AI-based downscaling learns the statistical relationships between large-scale climate patterns and local weather features (temperature, rainfall, etc.) from historical data. The result is high-resolution regional climate information that is more accurate and tailored to local conditions. AI-driven downscaling provides actionable insights for planners and stakeholders by bridging the scale gap between global models and local climate risks.

Recent work shows that AI can outperform conventional techniques in adding regional detail to climate projections. For instance, an American Meteorological Society review in 2024 highlights that machine learning is transformative for climate downscaling, markedly improving the spatial detail and skill of regional forecasts. In one case, a deep learning model downscaled global simulations to ~3 km resolution across terrain in Oman, capturing fine-scale rainfall and temperature patterns better than regional climate models. Another study produced a high-resolution Iberian Peninsula projection by training four different neural networks on coarse CMIP6 data and observations; the best network reliably reproduced local precipitation extremes and temperature distributions that coarse models missed. Overall, AI-driven downscaling techniques (such as convolutional neural nets and transformers) have demonstrated more accurate and computationally efficient generation of local climate detail than traditional dynamical approaches, enabling large ensembles of regional projections for risk assessment.

3. Bias Correction

AI is being used to automatically correct systematic errors (“biases”) in climate model outputs. Climate models often have biases (e.g. consistently too warm or too wet) due to imperfect physics or coarse resolution. Traditional bias correction methods (like simple statistical adjustments) are limited. Machine learning offers a more dynamic solution: by learning from the discrepancies between model simulations and observations, AI models can adjust model outputs to remove biases while preserving physical relationships. This leads to climate projections that better match historical observations, increasing confidence in their accuracy for decision-making.

Studies show that ML-based bias correction can significantly reduce model errors compared to conventional methods. For example, an AI algorithm applied to the DOE E3SM climate model effectively reduced long-standing warm, windy biases while preserving the model’s response to forcings. Zhang et al. (2024) achieved this by training a neural network to post-process model fields, substantially improving simulated wind, temperature, and humidity profiles relative to observations (the ML-corrected model’s bias was much smaller than the original). In another case, Pasula and Subramani (2025) developed deep learning models to correct sea surface temperature biases in a climate model; their best neural net bias correction lowered SST error by ~15% compared to a standard statistical method. These data-driven approaches outperform simpler bias adjustments by capturing complex, nonlinear model errors and context-specific corrections. As a result, AI bias correction yields climate projections that more closely resemble the real climate system, enhancing their reliability for climate impact assessments.

4. Emulation of Complex Physics

AI “emulators” are being developed to replicate the behavior of complex climate model components at a fraction of the computational cost. Instead of solving expensive physics equations (for radiation, cloud processes, etc.) at each model time step, a trained neural network can predict those outcomes almost instantly. By swapping in these ML-based surrogates for slow physics modules, climate simulations can run much faster. Importantly, careful training and constraints ensure the emulator’s outputs stay physically realistic. This approach speeds up climate and weather models, allowing more simulations, higher resolution, or larger ensembles with the same computing resources.

Researchers have demonstrated that ML surrogates can faithfully reproduce detailed climate physics while drastically accelerating computation. For instance, a recent AI-driven “climate emulator” was able to simulate global atmospheric dynamics for 10-year spans with 6-hourly time steps and remain stable. The AI model, a diffusion-based generative network, nearly matched a full climate model’s accuracy in representing temperature and precipitation patterns. Likewise, Ukkonen and Hogan (2023) implemented a neural network to replace the gas radiation module in ECMWF’s forecasting system, achieving over 50× speed-up in radiative transfer calculations while maintaining flux accuracy within ~0.5 W/m² of the original scheme. In another case, an AI surrogate for atmospheric convection and clouds (using a U-Net neural net) successfully ran 5-year climate simulations coupled with a host model, producing realistic cloud distributions and reducing biases in tropical rainfall. These examples show that AI emulators can capture complex physical processes (radiation, cloud microphysics, etc.) with high fidelity, enabling much faster climate modeling without sacrificing physical correctness.

5. Data Fusion from Multiple Sources

AI is helping merge diverse climate data sources (satellites, ground stations, reanalyses, etc.) into unified datasets with improved coverage and accuracy. By learning from overlapping information, machine learning can fill in gaps where one data source is missing or unreliable. For instance, an AI model can combine coarse satellite observations with local sensor data to produce high-resolution climate fields (like rainfall maps) that neither source could provide alone. This data fusion approach yields more complete and precise climate datasets, which are invaluable for analysis and model validation—especially in regions with sparse observations.

Deep learning data-fusion techniques have been shown to substantially enhance environmental datasets. For example, Zhang and Di (2024) developed a CNN-LSTM fusion model that integrates satellite rainfall estimates, reanalysis, and sparse rain gauges over the Tibetan Plateau. Their model improved precipitation estimates’ correlation with observations and cut error (RMSE ~2.73 mm/day) relative to any single data source, effectively leveraging multi-source strengths to overcome individual weaknesses. Similarly, an AI framework in Paris combined low-res model outputs, high-res land data, ground air monitors, and satellite aerosol measurements to map urban PM2.5 pollution at 100 m resolution hourly. The fused product had far higher accuracy (R² ~0.87 for monthly PM2.5) than the original meteorological model, especially capturing fine-scale and nighttime pollution patterns. These examples underscore how multi-source AI fusion yields richer climate information—ranging from improved rainfall datasets in remote mountains to hyper-local air quality maps in cities—thereby filling critical data gaps for research and decision-making.

6. Parameter Optimization

Tuning the numerous adjustable parameters in climate models has traditionally required expert trial-and-error. AI is changing that by automatically finding optimal parameter values that improve model performance. Machine learning algorithms can build emulators or use iterative search to efficiently explore the high-dimensional parameter space. By comparing model outputs against observations or high-resolution benchmarks, AI methods identify parameter sets that reduce biases and errors. This approach not only speeds up the calibration process but also removes subjective bias, yielding more objectively optimized models that better simulate the climate system.

Researchers have reported success using ML to automate climate model tuning. For instance, Bonnet et al. (2024) applied a machine learning-based history-matching routine to the ICON global model, honing in on parameter sets that balanced the top-of-atmosphere radiation to within 0–1 W/m². Their procedure (training an emulator on a limited ensemble, then shrinking parameter ranges) achieved a configuration with realistic cloud cover and humidity profiles after just one iteration. In another case, Yarger et al. (2024) calibrated 45 parameters of the DOE E3SM model by training a surrogate (via polynomial chaos) to emulate the model and using gradient-based optimization. The result was an automatically tuned model that matched observed climate fields as well as or better than the expert-tuned version, yet obtained in a fraction of the time. These studies illustrate how AI-driven parameter optimization can navigate complex parameter interactions and efficiently eliminate biases, increasing model accuracy and transparency in the calibration process.

7. Climate Extremes Prediction

AI is enhancing the prediction of extreme weather and climate events—like heatwaves, floods, and hurricanes—by identifying complex patterns that precede these events. Traditional forecasting models sometimes struggle with extremes due to their rarity and nonlinear triggers. Machine learning algorithms, especially deep neural networks, can learn subtle indicators of an impending extreme from vast datasets (including historical events, ocean patterns, etc.). By recognizing these precursors (often invisible to humans), AI models provide earlier or more accurate warnings of extremes. This helps improve disaster preparedness and climate risk assessments in a warming world where extremes are becoming more frequent.

Studies show that ML techniques can improve both the lead time and accuracy of extreme event forecasts. A 2025 review in Nature Communications notes that AI-based pattern recognition and anomaly detection are helping forecasters pinpoint incipient extremes and their driving factors. In practice, DeepMind’s GraphCast system demonstrated state-of-the-art skill in predicting extreme weather: it outperformed leading numerical models in forecasting events like tropical cyclones and heatwaves up to 10 days ahead. In 2023, an AI model also detected climate change “fingerprints” in daily heavy rainfall statistics, confirming that intensifying downpour extremes can now be statistically attributed to greenhouse-gas warming. Perhaps most impressively, Google’s GraphCast AI accurately predicted Hurricane Lee’s 2023 landfall location in Canada well before traditional models, highlighting AI’s ability to forecast high-impact events with greater precision. Together, these advances illustrate how AI is pushing the frontiers of extreme event prediction—providing more timely and confident warnings that can save lives and property in an era of growing climate extremes.

8. Uncertainty Quantification

AI approaches are improving how we quantify uncertainty in climate projections. Instead of single “deterministic” forecasts, machine learning models can efficiently generate large ensembles of plausible climate outcomes, providing probabilities and confidence intervals. Probabilistic ML techniques (like deep generative models or Bayesian neural networks) capture a wider range of possible future climate states and their likelihoods. This enhanced uncertainty quantification gives policymakers a clearer picture of risks—for example, the odds of extreme sea-level rise or temperature thresholds—enabling better risk management and adaptation planning.

Recent work demonstrates that AI can produce ensemble climate predictions on par with or better than traditional methods, but much faster. DeepMind’s GenCast model is a prime example: it generates a 50-member ensemble of 15-day global forecasts in about 8 minutes, outperforming the world’s top operational ensemble (ECMWF’s) in 97% of the weather metrics tested. GenCast’s probabilistic forecasts were not only more skillful overall but also better captured extreme events and tropical cyclone tracks, indicating improved uncertainty representation at long lead times. On the climate side, Lopez-Gomez et al. (2024) introduced a “dynamical-generative downscaling” that merges physical and AI models to downscale multi-model ensembles; notably, their approach provided more accurate uncertainty bounds for regional climate outcomes than either smaller physical ensembles or conventional statistical methods. By analyzing dozens of model outputs simultaneously, the AI identified common signals and reduced noise, sharpening the range of probable future climates. These advances show how AI is enabling larger and more informative climate ensembles, giving stakeholders better information about the range of possible futures and their associated probabilities.

9. Teleconnection Analysis

AI is uncovering hidden climate relationships known as teleconnections – linkages between distant regions’ climate patterns (like how El Niño in the Pacific affects rainfall elsewhere). Using machine learning, scientists can sift through vast climate datasets to find correlated patterns across the globe that traditional methods might miss or underutilize. AI models can also strengthen predictions by explicitly learning these connections (for example, using indices like ENSO, Indian Ocean Dipole, etc. as inputs). Overall, machine learning provides a powerful, objective way to detect and leverage teleconnections, improving seasonal forecasts and our understanding of the global climate network.

Recent applications show AI can both identify teleconnection patterns and exploit them for better forecasts. Chen et al. (2024) introduced a deep learning model (FuXi-S2S) that significantly improved 42-day global forecasts by capturing teleconnections like the Madden–Julian Oscillation (MJO). FuXi-S2S extended skillful MJO predictions from 30 to 36 days and realistically represented how the MJO’s tropical convective signal propagates and influences remote rainfall. In doing so, the model effectively learned the MJO’s global teleconnection impacts (e.g. it “accurately predict[s] the Madden-Julian Oscillation…and captures realistic teleconnections associated with the MJO”). Similarly, an explainable AI study by Pinheiro and Ouarda (2025) developed a sequence-to-sequence model that uses major climate indices (ENSO, NAO, etc.) to forecast seasonal rainfall; the ML model (called TelNet) not only improved precipitation forecast accuracy in teleconnection-sensitive regions, but also revealed which indices were most influential for a given season. These examples illustrate how machine learning can deepen teleconnection analysis – from discovering new patterns to harnessing known climate linkages – ultimately enhancing prediction and understanding of distant climate influences.

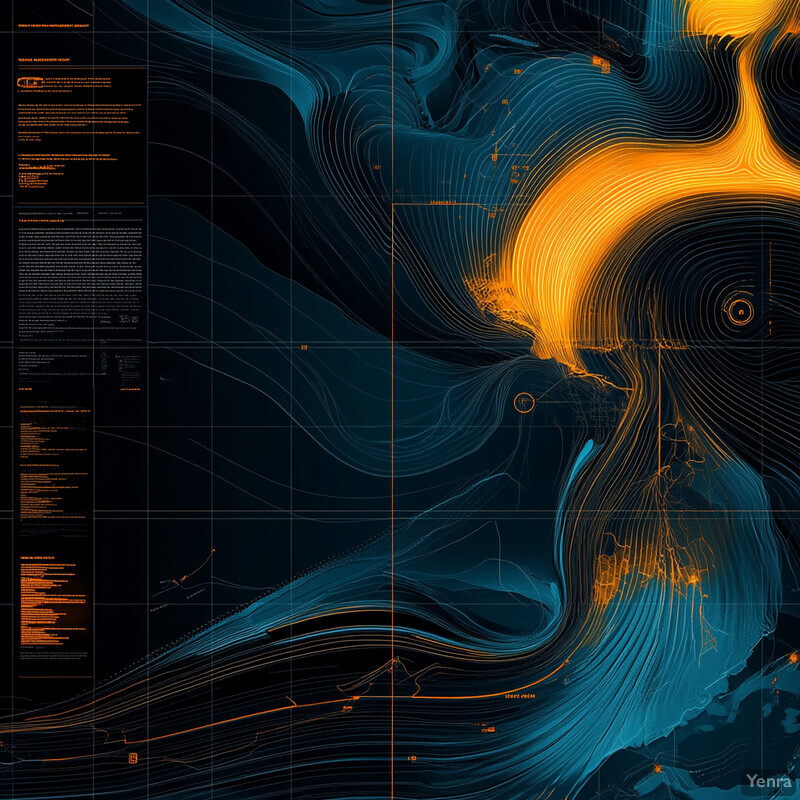

10. Nonlinear Trend Detection

AI is enabling the detection of subtle or nonlinear climate trends and regime shifts that might elude classical statistical methods. By analyzing long climate data series (from observations or model outputs), machine learning algorithms can identify changes in climate behavior – for example, an abrupt shift in ocean circulation or a nonlinear increase in heatwave frequency – that are not obvious with straight-line (linear) trend analysis. These AI-driven approaches (including deep neural nets and pattern-recognition techniques) can account for complex interactions and variability, providing early warning of emerging climate regime changes or tipping points.

Machine learning-based analyses have revealed meaningful climate shifts that traditional methods struggle to quantify. For instance, Couespel et al. (2024) used kernel-based ML to reconstruct and project interannual variability in global ocean CO₂ fluxes. Their analysis uncovered distinct “regime shifts” in the variability of these fluxes under future warming – essentially showing that ocean–atmosphere carbon exchange will enter a new variability regime as climate change progresses. In another study, Dakos et al. (2024) reviewed over 200 cases of tipping point detection across climate and ecological systems using data-driven early-warning indicators. They found that in a majority of real-world cases, these AI and statistical early-warning methods successfully detected oncoming abrupt shifts before they occurred. Moreover, Ham et al. (2023) demonstrated that a deep learning model could detect the emerging nonlinear signal of climate change in daily precipitation data despite large natural variability. Taken together, such results show that AI methods can tease out weak or complex climate signals – whether it’s an approaching tipping point or a hidden trend change – much earlier and more reliably, helping scientists anticipate significant climate shifts.

11. Ocean-Atmosphere Coupling Improvements

AI is helping improve the representation of ocean–atmosphere interactions in climate models. Key climate phenomena – like El Niño, monsoons, or ocean currents – result from tightly coupled oceanic and atmospheric processes that can be hard to model. Machine learning offers new ways to capture these interactions, either by serving as a fast “coupler” between ocean and atmosphere components or by learning complex feedbacks directly from data. By better simulating exchanges of heat, moisture, and momentum at the air–sea interface, AI-augmented models produce more accurate climate dynamics (e.g. realistic ENSO behavior or monsoon variability), which enhances seasonal forecasting and climate projection skill.

A notable example is the “Ola” AI Earth system model developed by Wang et al. (2024). Ola employs separate neural networks for the atmosphere and ocean that communicate with each other, successfully emulating seasonal ocean–atmosphere coupled dynamics. This AI model was able to internally generate a realistic El Niño/Southern Oscillation (ENSO) – reproducing the characteristic amplitude, spatial pattern, and even the vertical thermal structure of ENSO in the ocean mixed layer. Additionally, Ola captured tropical coupled wave phenomena with correct phase speeds, indicating it learned essential air–sea feedback physics. In forecasting applications, AI has extended the lead time for coupled climate phenomena: for instance, a multi-task deep learning model could predict the Indian Ocean Dipole (an ocean–atmosphere oscillation) up to 7 months ahead – far beyond the ~3 months of traditional models – by learning the nonlinear ocean–atmosphere interactions driving that pattern. Together, these advances show that AI can both replicate known ocean–atmosphere coupling (like ENSO teleconnections) and improve predictive capability for coupled climate modes, thereby strengthening climate model performance on seasonal to interannual timescales.

12. Cloud and Aerosol Modeling

AI is being leveraged to improve simulations of clouds and aerosols, which are among the most challenging aspects of climate modeling. Machine learning can learn complex cloud processes (like raindrop formation or cloud optical properties) from high-resolution simulations or detailed observations, and then serve as a fast surrogate in climate models. Similarly, AI models can better represent aerosols – tiny particles that affect clouds and radiation – by emulating their behavior and interactions. These AI-driven improvements reduce longstanding uncertainties in cloud and aerosol effects (especially their impact on sunlight and climate), leading to more reliable estimates of future climate change.

Researchers have achieved promising results using neural networks to replace or augment cloud/aerosol modules. For example, Geiss et al. (2023) developed a neural network that emulates the aerosol optical property calculations in the DOE E3SM model. This AI model was trained on millions of Mie-scattering computations and outperformed the standard aerosol optics parameterization – cutting errors in predicted radiative properties and enabling the use of more complex aerosol physics without extra cost. In parallel, Arnold et al. (2024) successfully embedded a deep-learning microphysics emulator (SuperdropNet) into the ICON climate model to simulate cloud droplet collision–coalescence. The ML emulator ran stably in the model and produced physically consistent results, closely matching the traditional scheme’s output while being much faster. These advances imply that AI can significantly enhance cloud and aerosol representations – for instance, by allowing climate models to include detailed aerosol-cloud interactions or precipitation processes that were previously too computationally expensive – thereby reducing one of the largest uncertainties in climate projections.

13. Paleo-Climate Reconstructions

AI is revolutionizing the reconstruction of past climates (paleoclimate) from proxy records like tree rings, ice cores, and sediments. Traditional methods often use linear correlations to infer past climate variables, but machine learning can handle the complex, nonlinear relationships between proxies and climate conditions. By training on periods with both proxy data and instrument data, AI models learn to translate proxy patterns into estimates of past temperature, rainfall, etc. These reconstructions can extend our climate knowledge centuries to millennia back, providing a richer context for current climate change and testing climate model simulations of past eras.

Recent studies have demonstrated AI’s prowess in extracting climate signals from proxy archives. Karamperidou (2024) used a deep learning model to infer summertime atmospheric blocking frequencies over the last 1000 years from tree-ring-based temperature reconstructions. The AI-driven “paleoweather” reconstruction revealed that during the Little Ice Age, a weakened tropical Pacific gradient corresponded to significantly reduced (but more variable) blocking in the Northern Hemisphere – a nuanced insight that required sifting out synoptic-scale signals from low-res proxy data. In another example, Hunt and Harrison (2025) developed an explainable CNN to reconstruct 500 years of South Asian monsoon rainfall using various paleoclimate records (tree rings, speleothems, etc.). The model successfully captured known mega-droughts (mid-17th and 19th centuries) and multidecadal monsoon swings, and it identified volcanic eruptions as consistently followed by weak monsoons. Significantly, their AI framework could also explain itself – showing which regional proxy patterns and climate drivers (like ENSO) the model used for its reconstruction. These cases illustrate how machine learning is pushing paleoclimate research beyond simple correlations, allowing more detailed and robust climate histories to be drawn from the natural archives.

14. Accelerating Forecast Computations

AI is dramatically speeding up weather and climate forecasts by serving as ultra-fast approximations of traditional models. Complex numerical weather prediction can take hours on supercomputers; in contrast, modern AI forecast models (once trained) can produce comparable forecasts in seconds to minutes on much cheaper hardware. By using machine learning surrogates for the time-consuming computations, forecasters can run many more simulations or higher-resolution models within the same time. Faster turnaround times allow more frequent forecast updates and exploration of more scenarios (ensembles), ultimately improving forecast reliability and lead times for extreme events.

The efficiency gains from AI-based forecasting are extraordinary. Recent ML weather models like GraphCast and FourCastNet can generate a 10-day global forecast in under a minute, compared to about an hour for conventional models on a supercomputer. In terms of speed-up, AI forecast emulators have been reported to be on the order of 10,000× faster than numerical models of similar resolution. For example, DeepMind’s GraphCast produced operational-quality 10-day forecasts for hundreds of variables with better accuracy than ECMWF’s model, all while running on a single machine orders of magnitude faster. Likewise, the GenCast system (2025) delivers a full probabilistic medium-range forecast (ensemble of 20+ members for 15 days) in ~8 minutes, a task that would normally require a large supercomputer and substantial time. These advances mean agencies can issue forecasts or climate simulations far more frequently and include more ensemble members. Faster forecasting not only improves the timeliness of weather warnings (e.g. enabling hourly updates on hurricane tracks) but also allows deeper analysis of forecast uncertainty by running dozens of scenarios that were previously impractical due to computation limits.

15. Real-Time Data Assimilation

AI techniques are streamlining data assimilation – the process of integrating new observations into running models – making it faster and more adaptive. Traditional data assimilation can struggle with huge volumes of data and nonlinearity. Machine learning offers new frameworks (like neural network assimilators or hybrid ML-physical schemes) that can handle complex observation patterns (e.g. satellites, radar, IoT sensors) in real-time and adjust model states more optimally. By quickly ingesting and making sense of diverse data, AI-enhanced assimilation keeps weather and climate simulations more tightly “on track” with reality, improving forecast accuracy and the model’s responsiveness to sudden changes (like developing storms).

Emerging AI-based assimilation frameworks show clear benefits in utilizing more data and reducing analysis error. One example is the FuXi-DA system (2025), a deep learning assimilation approach designed to directly ingest satellite radiances into a forecasting model. Tests with China’s FY-4B satellite data found that FuXi-DA’s neural network assimilation consistently improved 0–12 h forecast accuracy for multiple variables compared to not assimilating those data. The AI was able to effectively use observations that traditional schemes often discard (like cloud-affected infrared measurements), thereby yielding better initial conditions and forecasts. Additionally, FuXi-DA operates efficiently – it extends the assimilation window and optimizes over a longer forecast period via a learned surrogate, which helps extract more information from each observation. Separately, NOAA and others are experimenting with AI to accelerate 4D-Var and EnKF algorithms; early results indicate ML can reduce the computational cost of these complex optimization problems, enabling more frequent updates and the assimilation of all available observations (e.g. hundreds of millions per day) rather than a thin subset. In summary, AI is beginning to alleviate long-standing bottlenecks in data assimilation, leading to more data-rich and timely initialization of models for better forecasting.

16. Automatic Feature Extraction

AI is automating the identification of important atmospheric features in the deluge of climate data. Instead of manually searching weather maps for patterns like hurricanes, atmospheric rivers, jet streams, or polar vortices, researchers can train computer vision models to detect these features in model outputs or observations. Deep learning image recognition, for example, can scan through decades of climate data to tag occurrences of extreme storms or persistent patterns. This automated feature extraction greatly speeds up data analysis and allows consistent, objective tracking of phenomena that are critical for climate monitoring and research.

Deep learning models have proven adept at identifying climate features with high accuracy. A notable effort is the ClimateNet project, where convolutional neural networks were trained on expert-labeled data to detect atmospheric rivers (ARs) and tropical cyclones in global datasets. Building on that, O’Brien et al. (2024) developed ARCNN, a set of CNNs that not only detect ARs in various datasets but also quantify the uncertainty in their detection. Using a semi-supervised learning and image style-transfer approach, ARCNNs generalized across different climate model outputs and reanalyses without needing new labeled examples. These AI models accurately identified filamentary AR structures and allowed calculation of AR contributions to global heat transport, which previously depended on many disparate algorithms. In another case, researchers trained a U-Net (deep segmentation model) to detect atmospheric rivers in satellite imagery, achieving over 90% precision and recall – significantly better consistency than manual or threshold-based methods. Similar approaches have been used to track hurricanes and mesoscale convective systems automatically. By removing human subjectivity and labor, AI-driven feature extraction provides a powerful, scalable means to mine climate datasets for critical patterns, leading to more comprehensive statistics of extreme events and improved model evaluation.

17. Improved Aerosol-Cloud Interactions

AI is helping demystify and better represent the complex interactions between aerosols (tiny particles like dust or pollution) and clouds. These interactions (for example, aerosols serving as cloud condensation nuclei, altering cloud brightness and lifetime) are a major source of uncertainty in climate predictions. Machine learning can analyze vast observational datasets to isolate aerosol effects on clouds from meteorological noise, and can create more accurate parameterizations of these effects for models. By doing so, AI reduces uncertainty in aerosol indirect forcing – how aerosols ultimately cool or warm the climate via clouds – leading to more confident climate sensitivity estimates.

Recent studies highlight AI’s ability to quantify aerosol–cloud effects more precisely. For instance, Yuan et al. (2024) applied a machine learning surrogate approach on satellite data of volcanic aerosol “natural experiments.” The ML diagnosed how an increase in aerosol from volcanic SO₂ emissions changed cloud properties by comparing to a counterfactual (no-aerosol) scenario. It found that in shallow convective cloud regions, aerosol injections produced significantly more cloud cover and precipitation, reflecting more solar radiation and inducing a strong local cooling. This aerosol-induced cloud cover increase was identified as a dominant cooling mechanism, providing observational evidence for a substantial negative radiative forcing from aerosols. In a complementary approach, Andersen and Cermak (2024) used gradient-boosted trees with explainable AI (SHAP values) on 9 years of satellite data to tease out cloud fraction adjustments to aerosol changes under varying meteorological conditions. They quantified, globally, that marine low clouds tend to increase in fractional coverage with higher aerosol (cloud droplet) concentrations, especially in transition zones between stratocumulus and cumulus regimes. Crucially, the ML could separate meteorology’s influence, confirming that aerosol effects are amplified under certain conditions (e.g. strong temperature inversions). These findings – enabled by AI – reduce uncertainty in how aerosols modify clouds, suggesting that increased aerosol loads (from natural or human sources) likely exert a more cooling influence than many models had captured. Incorporating such data-driven insights into climate models will narrow the range of projected future warming.

18. Detection of Climate Change Signals

AI is bolstering the detection of human-induced climate change signals in the midst of natural climate variability. By using pattern recognition and anomaly detection, machine learning can discern subtle shifts or trends in climate data that signify long-term change – even when those signals are obscured by short-term fluctuations (like year-to-year weather noise). These techniques improve attribution studies by identifying the “fingerprints” of greenhouse warming on variables such as temperature extremes, rainfall patterns, or storm intensity. In short, AI helps separate the climate change signal from the noise, providing more robust evidence of anthropogenic impacts.

Machine learning methods have successfully extracted clear human-caused climate signals where traditional approaches had difficulty. A striking example is the study by Ham et al. (2023), in which a convolutional neural network was trained on global daily precipitation maps to detect emergent warming-induced patterns. The deep learning model “successfully detects the emerging climate-change signals in daily precipitation fields”, meaning it found statistically significant changes in rainfall intensity and frequency attributable to anthropogenic forcing even though natural variability is high at local scales. This provided early detection of precipitation pattern shifts consistent with climate model projections. Another example uses AI on extreme event data: a 2022 study employed neural networks to identify the fingerprint of climate change in the increasing severity of heatwaves, something that standard linear trends underplayed. In general, pattern-recognition AI (such as classification or clustering algorithms) applied to long-term climate datasets have reinforced attribution conclusions – for instance, distinguishing warming-related increases in record-high temperatures from the noise of year-to-year variability with greater confidence (and doing so on regional scales that were previously too noisy for attribution). These advances lend stronger scientific evidence that recent changes (like more frequent heavy rainfall or intense heat) are outside the range of natural variability and bear the signature of human influence, thereby informing policymakers and the public with high-certainty findings.

19. Early Warning Systems

AI is enhancing early warning systems for climate-related hazards by improving the prediction of events like droughts, floods, and crop failures months in advance. These integrated platforms combine climate forecasts with socioeconomic and on-the-ground data, using machine learning to identify patterns that precede disasters (e.g. soil moisture deficits, vegetation stress). The AI-driven alerts give governments and organizations more lead time to respond – for example, mobilizing drought relief or adjusting water management before a crisis fully develops. By synthesizing diverse indicators into clear risk forecasts, AI-based early warnings support proactive disaster risk reduction in a changing climate.

Around the world, AI early warning tools are showing tangible success. In Chile, an agricultural AI system now predicts drought conditions 3 months ahead with about 95% accuracy, allowing farmers and authorities to take action to mitigate crop losses. This machine learning tool analyzes climate data and satellite imagery to forecast soil moisture and precipitation deficits well before traditional methods signal a drought. In California, the AlertCalifornia AI fire detection system scans feeds from over 1,000 cameras statewide and has cut wildfire response times dramatically – in one case, spotting a fire 40+ minutes before the first 911 call. By detecting smoke plumes early (even at night) and immediately alerting firefighters with the location and confidence level, the AI system has helped contain fires at under one acre on multiple occasions. Similarly, humanitarian agencies are employing AI models (like WFP’s HungerMap) that combine rainfall forecasts, crop indices, and market data to predict food insecurity hotspots months in advance, enabling preemptive aid. These examples underscore that AI-driven early warnings – from drought prediction to wildfire detection – are not just theoretical; they are actively reducing harm by enabling earlier and more targeted interventions in climate-related emergencies.

20. Model Intercomparison and Synthesis

AI is being used to intelligently compare and combine the results of many different climate models, a process known as model intercomparison. Traditionally, scientists look at a range of models to gauge uncertainty and identify robust signals, but this can be cumbersome and qualitative. Machine learning can analyze multi-model datasets to detect common patterns, systematic biases, or relationships between model differences and certain parameters. AI algorithms (including ensemble learning) can also weight or fuse model outputs based on performance. The result is a synthesized projection that leverages the strengths of each model, potentially yielding more accurate and consistent climate predictions for use in assessments like the IPCC.

Researchers have introduced ML frameworks to enhance multi-model climate projections. For example, Khosravi et al. (2025) developed a Stacking Ensemble Machine Learning (EML) method that merges outputs from five different ML models trained on CMIP6 climate model data. Their stacked model significantly improved the projection accuracy of regional climate variables in the Middle East compared to any single model or the raw CMIP6 ensemble. It achieved R² values ~0.99 for seasonal maximum temperatures (versus ~0.9 from the best individual model) by learning the optimal combination of models under various scenarios. The approach also narrowed the projection spread – for instance, pinpointing that under a high emissions scenario (SSP5-8.5), parts of the Arabian Peninsula will likely see summer temperatures above 45 °C with concurrent drying, a result consistent across the optimized ensemble. This illustrates that AI can systematically identify how model outputs differ and how to blend them for better predictions. Additionally, unsupervised ML clustering has been used in projects like CMIP to group climate models by similarity, revealing that certain models consistently project outlier warming patterns (indicating where model development should focus). By using AI to handle the complexity of dozens of model outputs, the climate science community can extract clearer, more reliable insights – essentially letting the “ensemble of models” speak with a more unified and calibrated voice about future climate changes.