1. Increased Accuracy

Artificial intelligence has dramatically improved the accuracy of image recognition over the past decade. Modern deep learning models can identify objects and patterns in images with precision that often exceeds human performance on benchmark tasks. This leap in accuracy enables reliable applications in fields like medical imaging, autonomous vehicles, and quality control, where even minor errors can be costly. AI systems trained on massive datasets have learned to generalize and handle variations in lighting, angle, and background, reducing misclassifications. As a result, image recognition has reached a point of near-saturation on many standard benchmarks, prompting researchers to seek new challenges beyond basic accuracy gains.

A recent analysis by Stanford’s Institute for Human-Centered AI found that top image classification algorithms have virtually plateaued at very high accuracy levels. For example, the best ImageNet image classifier of 2021 achieved about 91% accuracy, and 2022’s best was only 0.1% better, indicating that these models are approaching the dataset’s upper limits. In many cases, AI image classifiers now outperform human baseline accuracy. This near-perfect performance underscores how AI’s pattern recognition capabilities have improved to unprecedented levels, effectively solving general image classification on well-defined tasks.

2. Real-time Processing

AI-powered image recognition can now operate in real-time, transforming video and live camera feeds into actionable insights instantly. This real-time processing is possible due to more efficient algorithms and powerful hardware acceleration (GPUs and AI chips) that handle complex computations in milliseconds. Improvements in model design – such as optimized neural networks for speed – allow systems to detect and track objects on-the-fly without noticeable lag. The impact is evident in autonomous drones, self-driving cars, and live surveillance systems that depend on split-second image analysis. AI’s ability to process images in real-time has unlocked interactive applications like augmented reality filters and responsive robotics, where immediacy is essential.

Cutting-edge object detection models demonstrate how far real-time image recognition has come. In 2023, researchers introduced YOLO-NAS, an AI model created via neural architecture search, which is significantly faster and more accurate than previous generation models. The smallest version of YOLO-NAS achieves about 47.0% mean average precision (mAP) on the COCO dataset while processing an image in only 2.36 milliseconds – roughly 420 frames per second. In practical terms, this means a single AI system can analyze hundreds of video frames each second, detecting objects almost instantaneously. Such performance indicates that modern AI vision systems can perform complex recognition tasks in real-time without sacrificing accuracy.

3. Contextual Understanding

Beyond identifying individual objects, AI has advanced in contextual understanding of images – grasping relationships and the broader scene. This means AI can interpret an image more like a human would, understanding not just “what” is in the image but also “how” and “why” elements are present. For instance, vision-and-language models can generate captions or answer questions about an image, capturing context like actions (“a cat sitting on a sofa”) or even the mood or implication of a scene. This deeper understanding comes from AI models that combine visual processing with reasoning, often using multiple modalities (vision + language). The impact is richer image descriptions, improved content moderation (by recognizing context of potentially sensitive images), and more nuanced image searches where context matters.

Recent AI systems illustrate this leap toward human-like image comprehension. OpenAI’s GPT-4, a multimodal model, can analyze and explain images with remarkable depth. In tests, GPT-4 correctly explained the humor in a visual meme (an image of an iPhone charger incorrectly attached to a large VGA connector) by recognizing the objects and the absurd situation, something that requires understanding the image’s context and implied joke. In another example, GPT-4 examined a photograph and predicted what might happen next under hypothetical scenarios, demonstrating a grasp of physical context in the scene. These abilities – explaining jokes and anticipating outcomes from images – highlight how AI now goes beyond raw object recognition to interpret situational context and relationships within images.

4. Facial Recognition

AI has significantly improved facial recognition, making it faster and more accurate to identify or verify individuals from images. Modern face recognition systems use deep convolutional neural networks to encode facial features into embeddings, allowing reliable matching even across different lighting or angles. The accuracy gains have enabled widespread use cases: unlocking smartphones with a glance, automatically tagging friends in social media photos, streamlining passport control with e-gates, and assisting law enforcement in finding missing persons. However, the power of AI-driven facial recognition also raises privacy and ethical considerations, as the technology has become robust enough to identify people in crowds or CCTV footage in real time. Overall, the improvements in this domain showcase AI’s ability to recognize extremely subtle patterns in facial imagery, distinguishing among millions of individuals with high confidence.

Facial recognition accuracy has followed a “Moore’s Law”-like improvement trajectory over the last thirty years, thanks to AI. According to the U.S. National Institute of Standards and Technology (NIST), error rates in face recognition have been halving every two years from 1993 up to 2023. This exponential progress means that today’s best algorithms are orders of magnitude more accurate than those from a decade ago. In practical terms, current top-tier face recognition systems can correctly identify faces 99%+ of the time under ideal conditions, whereas early 2010s systems were far less reliable. NIST’s evaluations show that modern AI face matchers not only outperform earlier technology but often even surpass human performance in recognizing faces under certain test conditions. This drastic improvement has led to near-perfect verification rates in applications like smartphone face unlock and security checks, as well as significantly lower false-match and false-non-match rates in critical deployments.

5. Pattern Recognition

AI excels at pattern recognition in images, finding intricate structures and anomalies that might elude human vision. This capability extends image recognition beyond just classifying known objects – AI can detect subtle textures, repeating motifs, or irregular patterns indicative of specific conditions. For example, in medical imaging, AI systems analyze scans for minute patterns (like slight tissue density changes) that signal early disease. In environmental monitoring, AI can spot patterns of deforestation or urban development from satellite imagery over time. The impact of AI’s pattern recognition is profound: it enables early detection of problems (e.g. tumors, manufacturing defects) and the discovery of new patterns in large image datasets, aiding scientific research. By learning from vast amounts of visual data, AI finds correlations and markers that humans might miss, making image analysis both more comprehensive and more precise.

In healthcare, AI’s pattern-recognition prowess is achieving expert-level diagnostic accuracy from medical images. Recent studies report that AI algorithms can detect tumors in medical scans with about 94% accuracy, outperforming experienced radiologists in certain tasks. For instance, in one 2023 study an AI system slightly outscored pathologists (0.980 vs 0.969 AUC) in identifying colon cancer from histology images, demonstrating virtually equivalent performance to human experts. AI has also been used to analyze subtle patterns in retinal photographs to predict cardiovascular risk factors – something traditionally impossible by eye. These achievements underscore that AI isn’t just classifying obvious objects, but is capable of discovering and interpreting complex visual patterns (like early disease markers) with a level of accuracy and consistency that can rival top specialists. Such high accuracy in pattern recognition holds promise for earlier detection of diseases and more reliable analysis of any imagery where hidden patterns carry crucial information.

6. Object Tracking

AI has greatly improved object tracking – the ability to follow moving objects across video frames. Using advanced algorithms, AI systems can maintain identities of multiple objects even as they change orientation or partially occlude each other. This is vital in applications like security surveillance (tracking intruders or vehicles across cameras), sports analytics (tracking players and the ball), and autonomous driving (following cars and pedestrians in real time). Deep learning has enabled trackers that combine detection and re-identification, so an object detected in one frame is recognized in subsequent frames through learned visual features. The impact is a more stable and accurate tracking performance, even in crowded or complex scenes. AI-based tracking systems today can handle dozens of objects simultaneously at high frame rates, making sense of dynamic visual environments with a level of reliability that manual tracking could never achieve.

Thanks to deep learning, the accuracy and speed of multi-object tracking systems have seen significant improvements in recent years. Modern trackers leverage robust object detectors and appearance models to keep consistent labels on objects through hundreds of frames. An analysis by computer vision experts noted that recent advances in neural networks have markedly boosted both tracking precision and frame processing rates, enabling real-time performance in practical scenarios. For example, an AI tracking engine developed by OpenCV.ai can run on small edge devices while still tracking people with high accuracy and stability. Likewise, academic benchmarks report substantial jumps in tracking metrics (like higher MOTA and IDF1 scores) with each new generation of algorithms. In summary, AI-driven trackers are now far more reliable, handling challenges like occlusion or fast motion such that they can follow multiple targets in complex scenes without losing track. This translates to smoother video analytics in everything from traffic monitoring to wildlife documentaries.

7. Image Classification

Image classification – assigning an image to one or more categories – has been revolutionized by AI, turning it into a ubiquitous, highly accurate tool. Convolutional neural networks and vision transformers trained on millions of images can categorize photos into thousands of distinct labels (such as identifying a scene as “beach,” “forest,” or “city skyline”). This capability is foundational for organizing the vast amount of visual data generated every day: companies use AI to automatically tag and sort images, enabling features like searching your camera roll for “dogs” or filtering a database for “manufacturing defect.” The current role of AI in image classification is not limited to academic benchmarks; it’s deployed widely via cloud services and smartphone apps, making sophisticated vision available to developers and end-users. The impact includes streamlined workflows (no more manual tagging), improved content discovery, and new services like visual search engines that let users search using images themselves.

The rapid adoption of AI for image classification is reflected in the market’s growth and scale. In industry, the technology has become so pervasive that the global image recognition market (which includes image classification services) is projected to reach nearly $60 billion in value by 2025. Tech giants like Google, Microsoft, and Amazon report that their AI vision APIs are processing billions of image classification requests annually, from identifying products in e-commerce to filtering inappropriate content on social platforms. By 2024, the sector was estimated around $50+ billion, and continued investment is expected to drive it to over $160 billion in the next decade. This explosive growth is fueled by the proven reliability of AI classifiers – for example, classifying everyday images (e.g., cats vs. dogs) is essentially a solved problem – and the expanding use cases across virtually every industry. In summary, AI-powered image classification is now a mainstream technology, underpinning everything from photo management in consumer apps to large-scale image analysis in enterprise and government, with a correspondingly large economic footprint.

8. Image Restoration

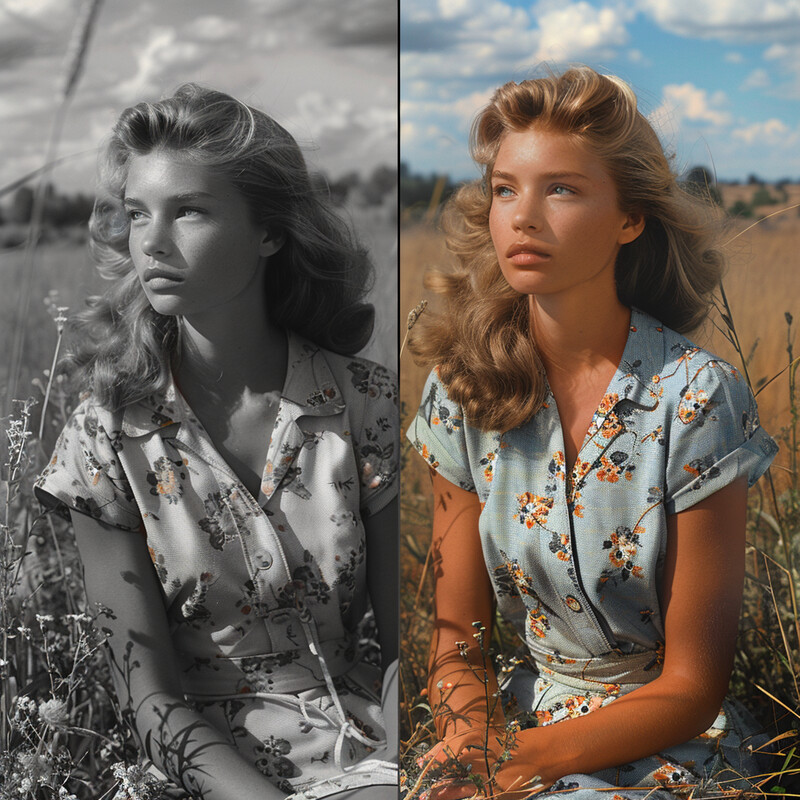

AI is transforming image restoration, allowing damaged or low-quality images to be enhanced with unprecedented ease. Using deep learning models trained on countless examples of pristine vs. degraded images, AI can intelligently fill in missing details (inpainting), remove noise and scratches, sharpen blurry regions, and even colorize black-and-white photos. Tasks that once required skilled manual retouching can now be done automatically or with minimal user input. The impact is particularly felt in areas like preservation of historical photographs and films – AI tools can revive century-old photos or improve old video footage to HD quality. Consumers too benefit via apps that can deblur a shaky photo or upscale a low-resolution image for printing. Overall, AI-based restoration not only saves time but often produces results that are better than manual efforts, as the AI can infer realistic textures and details (like a face in an old photo) by learning from vast image data.

A striking example of AI’s role in image restoration is Adobe Photoshop’s “Neural Filters” and Generative Fill, introduced in 2023, which use AI to repair and enhance photos. With Generative Fill, users can prompt the AI to reconstruct missing parts of an image or remove blemishes, and the AI will seamlessly fill in content that matches the context. This has made it feasible for people to restore old, damaged photographs in a matter of seconds, a task that previously required hours of meticulous cloning and patching. One user demonstration showed an 80-year-old torn photo being digitally healed by the AI – cracks vanished and missing corners were recreated with plausible background detail. The advent of these tools means that anyone with a PC can now revive faded family photos or enhance low-quality images using AI. The convenience and quality are such that professionals have embraced AI restoration in their workflows, trusting it for tasks like noise reduction and super-resolution that match or surpass traditional methods.

9. Automated Tagging

AI enables automated tagging of images, meaning it can generate descriptive labels or keywords for photos without human intervention. This is incredibly useful for managing large image collections – for example, a social network can automatically tag content (identifying that a photo contains “two people, smiling, outdoors”) to improve search and feed algorithms. Photo organizing software uses AI tagging to let users sort pictures by subject (like “food” or “sunset”) instantly. In professional settings, news organizations auto-tag incoming images (with tags like “protest” or “wildfire”) to route them to the right channels. Automated tagging powered by AI not only saves enormous labor that manual tagging would require but also can be more consistent and detailed, as the AI can apply hundreds of tags capturing fine details. This technology underpins features we take for granted, such as being able to search your smartphone gallery for “dog” and get all dog pictures, or content warnings on social media images (AI tagging them as “graphic” or “spoiler”).

The sheer scale of visual content online makes automated tagging indispensable. Consider Facebook: users upload over 300 million photos per day to the platform. The volume is so vast that relying on humans to label or moderate these uploads is infeasible – it would require an army of moderators and still fall short. Instead, Facebook and other companies deploy AI systems that automatically scan and tag images (for instance, flagging images containing nudity or violence, and adding descriptive alt-text for accessibility). As of 2020, Facebook’s AI could **identify primary objects or scenes in 97% of images, providing tags like “image may contain: 2 people, smiling, sunglasses” within seconds of upload. This automated pipeline, driven by neural networks, allows content to be sorted and filtered at scale. In essence, without AI-based tagging, modern social media and photo services simply could not manage the flood of daily images, whereas with AI, each image can be analyzed and annotated in milliseconds.

10. Augmented Reality

AI is a core driver of Augmented Reality (AR) improvements, enabling digital content to be overlaid on the real world in a convincing and interactive way. AI-based image recognition allows AR systems to understand the environment – for example, detecting surfaces, objects, and people – so that virtual elements can be placed believably (a virtual cat sitting on a real table, or an AR game character running on the ground). Computer vision algorithms track a user’s surroundings and motions in real time, which is crucial for stabilizing AR visuals and making them respond to the scene (like an AR shopping app recognizing a empty wall to place a virtual couch there). Facial recognition and tracking (another AI task) power popular AR face filters on apps like Snapchat and Instagram, mapping virtual masks or effects onto faces that move and change expressions. As a result of these AI capabilities, AR experiences have become more smooth, realistic, and widely accessible – used in everything from entertainment and education to navigation (e.g., AR directional arrows on live street views).

The popularity of AR owes a lot to AI’s image recognition behind the scenes. On Snapchat, for instance, AR lenses use AI to detect and track facial features and surroundings, allowing millions of users to apply fun or useful effects in real time. Snap Inc. reports that as of 2025, over 300 million Snapchat users engage with AR features every single day on average. These AR lenses can do things like recognize your hand and project a dancing cartoon on your palm, or identify your dog and give it virtual sunglasses – all enabled by computer vision models trained on those targets. Similarly, other platforms use AI to map environments for AR: Apple’s ARKit uses scene understanding AI to place objects, and Google’s Live View uses AI recognition of landmarks for AR navigation. The hundreds of millions of daily AR users highlight how AI-driven image recognition has made AR a mainstream feature, blending virtual content with the real world effortlessly and at scale.