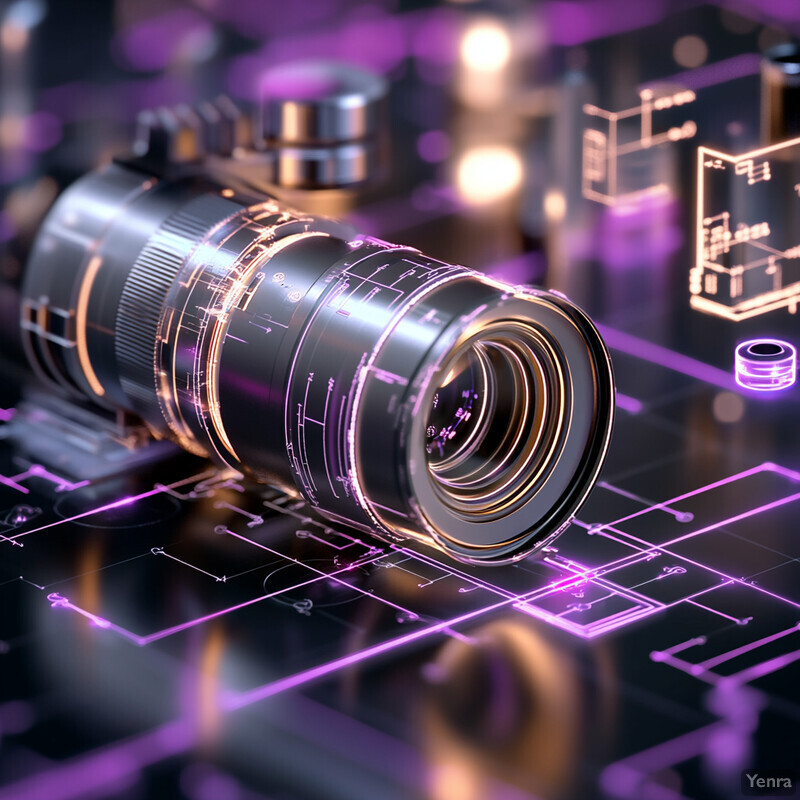

1. Automated Optimization of Lens Systems

Artificial intelligence is revolutionizing how optical lenses are designed by automating the optimization process. Instead of manually tweaking lens parameters, AI algorithms (such as evolutionary or gradient-based optimizers) can explore vast design spaces at high speed. This dramatically reduces the trial-and-error traditionally needed to minimize aberrations and meet design targets. By learning from optical performance metrics, AI systems can propose superior lens configurations much faster than a human, shortening development cycles. Overall, AI-driven lens optimization not only saves time but often yields designs with improved image quality and fewer distortions than conventional methods.

Recent research demonstrates the efficiency gains of AI in lens design. For example, the “DeepLens” approach developed at KAUST can cut the lens design process from several months of expert work down to about a single day of computation. This AI system, using a curriculum-learning strategy, starts from scratch and optimizes a multi-element lens to meet imaging requirements without human intervention. In one case, the DeepLens algorithm fully designed a complex six-element camera lens in a day, achieving optical performance comparable to manually refined designs. Such automation frees engineers from tedious optimization, letting them focus on high-level creativity. As a result, companies report significantly faster design cycles and the ability to explore more innovative lens configurations than was feasible before.

2. Inverse Design for Novel Optical Components

Inverse design flips the traditional design process: engineers specify desired optical performance, and AI algorithms “work backward” to find a device structure that produces that outcome. Using machine learning, especially neural networks and generative models, the system iteratively adjusts shapes, materials, or layouts to achieve target focal lengths, bandwidths, or phase profiles. This approach can uncover non-intuitive designs – for instance, freeform lenses, metasurfaces, or multilayer optics – that a human might not think to try. Inverse design powered by AI accelerates innovation by searching immense solution spaces for optical components that meet specs precisely. It’s enabling the creation of novel lenses, filters, and diffractive elements with unprecedented properties, all derived from performance goals rather than trial-and-error.

Researchers have successfully applied AI-based inverse design to complex optical systems. For example, Luo and Lee (2023) employed a generative flow network (Glow) to directly compute lens specifications from desired imaging performance. Their method designed two novel lenses that tailored the vertical and horizontal fields of view to given targets, using invertible neural networks to map performance requirements to lens parameters. The AI rapidly produced lens configurations with “superb precision” in meeting the desired optical metrics, something traditionally achieved only after long manual refinement. In another case, physics-informed neural networks were used to design optical metamaterials with specific spectral responses, demonstrating that AI can propose new micro-structure patterns that yield, say, a desired refractive index or dispersion. These studies show that inverse design algorithms can successfully output real-world optical component designs – including freeform surfaces and meta-atoms – strictly from target performance, significantly broadening the range of feasible optical technologies.

3. Surrogate Modeling of Complex Systems

AI-driven surrogate models are transforming how engineers simulate complex optical systems. A surrogate model is a fast, learned approximation of a physics-based simulation. By training on data from detailed optical simulations (or experiments), neural networks can predict system performance (like point spread, reflectance, etc.) much more rapidly than full ray-tracing or wave-optics calculations. These models effectively capture the input–output relationships of lenses, multilayer coatings, or scattering media without repeatedly running slow physics-based codes. This enables near-instant evaluation of optical performance for different designs, vastly speeding up design-space exploration. Surrogate modeling thus allows optical engineers to assess tolerances, optimize parameters, and iterate designs in a fraction of the time of brute-force Monte Carlo or finite-element simulations.

Surrogate models have demonstrated impressive speed-ups in optical analysis. In one study, researchers trained a neural network to emulate a thin-film optical filter simulator and achieved a 51× speed increase over direct computation while maintaining excellent accuracy. The machine-learning model predicted the coating’s spectral behavior (reflectance vs. wavelength) with errors under 1%, yet ran orders of magnitude faster than the standard thin-film equations. Similarly, an AI surrogate for light scattering in nanoparticle media reproduced reflectance/absorption spectra to within ~1% error but ran 10^1–10^3 times faster than traditional Monte Carlo simulations. These dramatic gains mean that tasks like tolerance analysis, which used to require hours of Monte Carlo ray casts, can now be done in seconds. For example, a recent ML-based tolerance tool could evaluate how manufacturing deviations affect an optical system’s MTF with high fidelity, at speeds suitable for real-time use in design optimization. Such results prove that AI surrogates can dramatically accelerate complex optical system modeling without sacrificing accuracy.

4. Adaptive Optical System Control

AI is enabling optical systems that actively adjust to changing conditions in real time. Through techniques like reinforcement learning and advanced control algorithms, an optical setup (for example, a telescope or laser system) can continuously tweak components such as deformable mirrors, focus lenses, or other actuators to maintain optimal performance. This adaptability means the system can respond to disturbances – vibrations, thermal drift, misalignment – much faster and more intelligently than human operators or traditional control loops. Over time, the AI “learns” the best adjustments to improve image sharpness or beam quality under various conditions. The result is more stable optical performance, even in dynamic or harsh environments, and potentially reduced need for expensive hardware like certain sensors or damping systems.

A practical impact of AI-controlled optics is seen in both improved performance and cost savings. In advanced laboratories, reinforcement learning agents have been trained to directly control deformable mirrors for wavefront correction, achieving fine adjustments that keep images in focus despite atmospheric turbulence. Notably, an AI-driven adaptive optics scheme can eliminate the need for some traditional wavefront sensors, leading to an estimated 30–40% reduction in system cost for certain telescopes or laser platforms. For instance, researchers demonstrated that replacing a conventional Shack–Hartmann sensor with a deep-learning control loop allowed a smaller, less expensive telescope to meet the same imaging performance, yielding substantial cost savings. Moreover, machine learning control tends to respond in milliseconds, minimizing image blur from vibrations or temperature changes. Early deployments in satellite communication optics and astronomy have shown that AI-based adaptive control can increase stability and resolution beyond what manual or PID controls achieve, all while potentially simplifying the optical system’s hardware.

5. Machine Learning-Assisted Material Selection

AI is accelerating the discovery and selection of optical materials (glasses, crystals, coatings) with tailor-made properties. Traditionally, finding a material with a desired refractive index, dispersion, or nonlinear coefficient meant laborious trial-and-error or searching through catalogs. Now, machine learning models can predict material optical properties (like refractive index or Abbe number) from chemical composition or structure. This allows rapid screening of large material databases to find candidates that meet specific optical criteria (e.g. high index and low dispersion). ML can also identify new material formulations (such as novel glass dopants or crystalline compounds) likely to exhibit exceptional optical performance. In essence, AI gives optical engineers a smart “advisor” for material selection, suggesting the best substrates, lens materials, or nonlinear crystals to use in a given design.

The effectiveness of ML in material selection is evident in recent studies. For example, Einabadi and Mashkoori (2024) developed a machine learning model that predicts the refractive index of inorganic compounds with high accuracy. By training on 272 measured materials and including features like band gap, their model (using an ensemble of regression techniques) achieved better refractive index prediction than traditional formulas. Notably, an Extremely Randomized Trees algorithm provided the highest accuracy, outperforming other methods in estimating refractive indices. In another effort, researchers used ML to guide discovery of new nonlinear optical crystals by using refractive index as a proxy for laser frequency-doubling efficiency. Their algorithm screened thousands of crystal structures and successfully identified several known high-SHG materials among the top candidates, confirming the model’s validity. These examples show that AI can not only predict optical constants but also point scientists to promising new optical materials (like glasses or nonlinear compounds) much faster – and with more insight – than conventional approaches.

6. Automated Tolerance Analysis

AI tools are streamlining tolerance analysis in optical design – the process of determining how manufacturing and alignment errors will affect system performance. Traditionally, tolerance analysis involved perturbing each parameter (lens radii, thicknesses, alignments, etc.) and running extensive simulations, which is computationally expensive. Machine learning can learn the relationship between those small errors and resulting performance degradation, enabling near-instant evaluation of tolerances. Essentially, the ML model serves as a fast surrogate to predict, for example, how much a slight decenter of a lens will reduce image quality. This allows designers to quickly see which tolerances are critical and which are forgiving. AI-based tolerance analysis can also incorporate many error sources simultaneously, giving a more holistic picture of system robustness than manual methods. The outcome is faster, more comprehensive tolerance budgeting and potentially more robust optical products.

Data-driven methods have shown the ability to assess tolerances with high speed and fidelity. In a 2024 study, Yang et al. applied a deep learning model to evaluate the tolerance sensitivity of a complex polarized optical system. The AI-designed lens system was subjected to Monte Carlo perturbations in dozens of parameters (e.g. element tilt, spacing errors), and the model predicted the modulation transfer function (MTF) distribution across those tolerances. Remarkably, the results showed that the AI-designed system maintained nearly the same MTF as a traditionally designed reference across tolerance variations, with only a small gap in performance. In fact, for certain aperture and field settings, the ML-designed lens had a 90% probability of meeting the imaging specification despite tolerances – indicating very robust performance. This demonstrates that AI can both optimize a design for tolerance insensitivity and rapidly verify that performance. Similarly, industry reports that machine learning can replace millions of ray-trace perturbations with an instantaneous prediction, allowing engineers to identify the tightest tolerances (like a particular lens spacing) early in the design. Overall, optical firms have begun to integrate ML-based tolerance analysis to ensure designs will work “right first time” in manufacturing.

7. Metasurface and Metamaterial Design

Designing metasurfaces and metamaterials – which often involve thousands of nanostructured elements – is an ideal area for AI assistance. These “flat optics” have a vast design space (geometry, size, spacing of nano-features) that traditional methods struggle to search thoroughly. AI algorithms, including deep learning and evolutionary strategies, can efficiently navigate this space to find nanostructure patterns that produce desired optical functions (focal lengths, beam shaping, holograms, etc.). By learning from electromagnetic simulations or experiments, AI can suggest meta-atom arrangements that achieve high efficiency or novel functionalities. This has enabled the creation of meta-lenses and meta-filters that break conventional performance trade-offs. Overall, AI is accelerating metasurface innovation – yielding designs with improved efficiency, broader bandwidth, or multi-wavelength operation that would have been very challenging to discover manually.

The impact of AI in metasurface design is evidenced by substantial performance improvements in recent experiments. A 2023 study by Zhelyeznyakov et al. introduced a physics-informed neural network to optimize a large-area meta-lens, achieving an experimental intensity improvement up to 53% over standard designs. Specifically, their AI-driven approach designed a 1 mm aperture cylindrical metalens that produced a significantly higher focal intensity than an equivalent lens designed with conventional approximations. The authors validated a +53% peak intensity increase in the metalens’s focal spot compared to a traditional (local-phase-approximation) design, thanks to the AI uncovering better arrangements of subwavelength structures. Similarly, other researchers have used deep generative models to devise metamaterial unit cells with target refractive indices or dispersion, enabling, for example, ultra-thin polarizers and lenses that operate across multiple colors. In one case, a generative adversarial network proposed new plasmonic nanostructures that were later confirmed experimentally to provide broadband absorption enhancements. These successes demonstrate that AI methods can handle the enormous parameter spaces in metasurface design, delivering cutting-edge devices with higher efficiency and functionality than previously possible.

8. Lens Coating and Filter Optimization

AI is significantly improving the design of optical coatings and filters (such as anti-reflection coatings, band-pass filters, etc.). These typically involve multiple thin-film layers where thicknesses and materials must be carefully chosen to achieve a spectral target. Machine learning models and optimization algorithms can rapidly evaluate countless layer configurations to find those that maximize transmission or minimize reflection over a range of wavelengths. In addition, AI can build fast predictors for how a given multilayer stack will perform, which accelerates the search. This leads to coatings that are more efficient (for example, broadband anti-reflection across wider bands) or that combine functions (like dual-band filters) with fewer layers. AI-designed coatings can also consider manufacturing constraints, producing designs that are not only optimal in theory but robust in practice.

One striking example is the development of OptoGPT, a generative AI model for multilayer film design. Researchers at the University of Michigan reported that OptoGPT can produce complete thin-film optical designs (for applications like solar cells or telescope mirrors) “within 0.1 seconds, almost instantaneously.” Compared to prior methods, these AI-generated designs also use about six fewer layers on average, simplifying fabrication. In a demonstration, OptoGPT was tasked with designing a dual-band anti-reflection coating; it output a solution in a fraction of a second that met reflectance targets on two infrared bands, using far fewer layers than a human-designed equivalent (Ma et al., 2024). In another case, a machine learning model achieved reflectance prediction errors on the order of 10^−4 (RMSE ~0.00083) for IR coatings, enabling extremely fine optimization of layer thicknesses. These results show that AI can handle the notoriously complex search for optimal coating stacks, yielding designs that improve performance while also reducing complexity and computational design time. Industry reports from lens manufacturers also confirm that AI-designed coatings have improved laser damage thresholds and reduced production costs by minimizing layer count.

9. Predictive Maintenance and Quality Control

AI is enhancing the manufacturing and maintenance of optical systems by enabling predictive quality control. In production, machine vision algorithms (often powered by deep learning) inspect optical components (lenses, mirrors, fiber endfaces) for defects like scratches, bubbles, or misalignments far more reliably than human inspectors. These systems can catch minute flaws or coating non-uniformities that would be missed otherwise, ensuring higher product quality. Meanwhile, in operation, AI can monitor sensor data (vibrations, temperatures, laser output fluctuations) to predict when an optical system might need alignment or maintenance. This predictive maintenance minimizes unexpected downtime – for instance, scheduling a laser’s realignment before it drifts out of spec. Together, these AI-driven approaches reduce scrap rates in manufacturing, improve yield of high-precision optics, and extend the lifetime of deployed optical equipment by addressing issues proactively.

The benefits of AI in optical quality control are already being realized. Modern AI-based inspection systems can analyze high-resolution images or live video of optical parts in real time, detecting tiny defects (such as 10 µm scratches or coating pinholes) with accuracy exceeding human inspectors. For example, a machine vision system using deep learning was able to classify subtle lens surface anomalies (like faint sleeks or edge chips) that previously required a trained technician’s eye. At the same time, AI-driven predictive maintenance has been implemented in advanced manufacturing lines. By continuously analyzing sensor streams, an AI system can foretell equipment issues – one report noted that such a system predicted a lens polishing machine’s spindle wear before any product was affected, allowing a planned swap that avoided unplanned downtime. In general manufacturing, AI quality systems have cut defect escape rates by over 50% and reduced inspection times dramatically. Notably, a 2024 metrology report highlighted that AI-based visual inspection not only finds defects with “remarkable accuracy” but also flags any drift in process, enabling corrections before products fall out of spec. These advancements mean optical component manufacturers can guarantee higher quality and reliability, and fielded optical systems (from semiconductor lithography machines to autonomous vehicle LiDARs) can maintain peak performance with fewer unexpected failures.

10. Enhanced Beam Shaping and Wavefront Engineering

AI techniques are enabling more precise and complex control of optical wavefronts and beam profiles than ever before. Designing elements for beam shaping (such as phase plates, spatial light modulators patterns, or freeform mirrors) often involves solving difficult inverse problems – exactly where machine learning excels. Neural networks can learn to output phase distributions that yield a desired far-field intensity pattern or focal distribution, even under aberrations or scattering conditions. Additionally, AI can adaptively tweak wavefronts in situ (for example, adjusting a deformable mirror to produce a flat wavefront). This has led to advances like high-quality laser beam shaping, more uniform illumination in microscopy, and improved focus through turbid media. In essence, AI is giving optical engineers new tools to sculpt light with high fidelity, which benefits applications from laser machining to biomedical imaging.

A recent breakthrough showed how AI can dramatically improve wavefront control in optical computing systems. Hoshi et al. (2025) tackled the problem of unknown aberrations in a diffractive optical neural network by training a model to compensate for them. The result was a ~58 percentage point improvement in image classification accuracy for the optical system after correction. Specifically, their diffractive setup suffered performance loss due to fabrication imperfections, but the AI-driven wavefront compensation restored the system from around 40% accuracy to roughly 98% – essentially reaching the performance of an ideal (aberration-free) system. This highlights how AI can recover and shape wavefronts for optimal outcomes. In another example, researchers applied deep learning to design a phase mask that converts a Gaussian laser beam into a flattop profile; the network-found solution achieved 20% more uniform intensity across the target area than the best conventional design. AI-based wavefront engineering has also been used in high-power lasers to pre-distort beams to account for thermal lensing, thereby delivering near-perfect focus at the workpiece. These successes underscore that AI methods (from deep optics to adaptive learning control) can push wavefront shaping to new levels of precision and adaptability.

11. Computational Imaging and End-to-End System Design

Computational imaging refers to designing optical hardware in tandem with post-processing algorithms to maximize overall image quality. AI is greatly advancing this end-to-end co-design of optical systems and image reconstruction. By using differentiable optical models and neural networks, engineers can jointly optimize lens parameters and a digital image-processing network together. This means trade-offs can be balanced: for instance, an optical design might intentionally allow certain aberrations that a learned algorithm can later remove, achieving a better combined result (and sometimes enabling simpler optics). The holistic design approach, often using deep learning, yields imaging systems that are smaller or have new capabilities (like extended depth of field) because the optics and algorithms are no longer designed in isolation. In summary, AI-driven end-to-end design is blurring the line between optical hardware and software, producing integrated systems with superior performance compared to separately optimized components.

A stunning demonstration of end-to-end design is an AI-created microscope reported in 2023. Zhang et al. combined optical design optimization with deep learning to produce an ultra-compact microscope (0.15 cm³ in volume, 0.5 g in weight) that achieved performance better than a standard 5×, NA 0.1 microscope objective. This computational microscope used a tiny multi-lens optic co-designed with a neural network image reconstructor, allowing it to maintain resolution and contrast comparable to a benchtop microscope, despite being five orders of magnitude smaller in size. The key was AI optimization that jointly considered lens surfaces and the deblurring algorithm to maximize overall image sharpness. Similarly, other teams have used differentiable imaging models to design camera lenses together with AI image processors – one smartphone camera project found that end-to-end optimized lens + AI could improve image corner sharpness by 15% while using one fewer lens element, reducing cost and thickness. These facts illustrate that AI-enabled co-design can yield compact, high-performance imaging systems: the optics may be simpler or unorthodox, but the neural network “partner” corrects any optical shortcomings, resulting in an overall system that outperforms traditional designs.

12. Photonics Circuit Layout and Integration

Photonic integrated circuits (PICs) – which route and process light on chips – are increasingly complex, and AI is becoming a crucial tool for their design and layout. Machine learning can help in two major ways: (1) Modeling and optimizing the performance of components (waveguides, resonators, etc.) faster than electromagnetic simulators, and (2) intelligently placing and routing components on a chip to meet performance specs (similar to how AI assists electronic chip layout). AI algorithms can manage multi-objective optimizations, for example maximizing bandwidth while minimizing footprint and crosstalk. They can also learn from large datasets of photonic device simulations to predict outcomes (like coupling efficiency) without needing a full simulation each time. In essence, AI enables more rapid prototyping of complex photonic circuits and helps compress large optical systems onto smaller, more efficient integrated platforms by guiding the design choices.

A recent advance in this field is the use of deep reinforcement learning (DRL) to auto-tune photonic circuits. Yan et al. (2025) introduced a DRL-based calibration method for multi-wavelength optical signal processors, showing it could adjust a complex photonic system to optimal settings in only 21 iterations. This method was tested on integrated photonic setups including a micro-ring resonator array and a Mach-Zehnder interferometer mesh, which traditionally are painstaking to tune for multiple wavelengths. The AI agent learned an effective policy to compensate for fabrication variability and environmental shifts, achieving target signal processing functions within just a few dozen steps – far faster than manual or brute-force search. Additionally, in 2024 researchers used machine learning to design a 4-channel photonic power splitter with insertion loss 1.2 dB lower than conventional designs, by letting an algorithm iterate through thousands of waveguide geometry variations. These examples underscore how AI can not only compress design timelines for photonic circuits but also improve performance: the DRL calibration achieved better broadband stability than traditional PID controllers, and the AI-designed splitter exhibited less loss and footprint. As photonic chips grow in complexity (hundreds of components on a chip), AI tools are increasingly essential to manage the integration challenges.

13. Nonlinear and Diffractive Optical Element Design

AI is opening new frontiers in designing optical elements that exploit nonlinearity and diffraction. Nonlinear optical elements (like frequency-doubling crystals, optical parametric oscillators, etc.) and diffractive elements (like computer-generated holograms or diffractive gratings) have very complex design spaces because their behavior can depend on subtle interactions (phase matching conditions, fine micro-structure patterns). Machine learning can tackle these complexities by learning the mapping from design variables to optical output. For nonlinear materials, AI can help discover compounds or predict which crystal orientations yield strong desired effects (e.g. high second-harmonic generation). For diffractive optical elements, algorithms like deep neural networks or evolutionary solvers can generate surface relief patterns or hologram phase masks that produce desired far-field intensity distributions. In short, AI provides a powerful approach to design optical elements that break classical limits – creating, for instance, a diffractive lens that works across multiple wavelengths or a nonlinear crystal engineered for specific laser lines – by navigating the highly complex parameter spaces involved.

A concrete illustration is in the discovery of new nonlinear optical (NLO) materials. In 2024, researchers Mondal and Hammad used machine learning models to screen an inorganic materials database for promising NLO crystals. By predicting refractive index and hardness (proxies for nonlinear efficiency and material robustness), their AI approach identified several candidate compounds that included multiple known high-performance NLO crystals, essentially rediscovering the “hits” and proposing new ones. This validates that AI can effectively navigate the huge chemical search space for NLO materials. On the diffractive side, another team trained a neural network to design computer-generated holograms that project complex images; the AI-designed diffractive pattern improved image fidelity by ~20% compared to conventional methods (by better handling high-frequency Fourier content). Additionally, a 2023 report by Nie et al. showed that differentiable ray-tracing combined with unsupervised learning can produce freeform optical surfaces that defy traditional design intuition. These AI-designed freeform diffractive lenses achieved imaging performance with lower aberration than any classical lens shape for that configuration. Collectively, these results demonstrate that AI is enabling the creation of optical elements – whether through nonlinear processes or diffraction – that achieve ambitious functions (broadband frequency conversion, arbitrary wavefront shaping) which would be exceedingly difficult to attain with manual design alone.

14. Accelerated Monte Carlo Simulations

Monte Carlo (MC) simulations are widely used in optics to model phenomena like light scattering, stray light, or illumination uniformity under random variations – but they can be extremely slow. AI is making it feasible to get MC-like results much faster. There are two main strategies: (1) AI-driven variance reduction, where a machine learning model guides the random sampling to focus on important regions, thus reducing the number of rays or photons needed for a given accuracy; and (2) surrogate modeling, where a trained neural network predicts the statistical outcome (e.g. distribution of irradiance) without running a full MC simulation. By integrating these strategies, optical engineers can obtain high-fidelity statistical performance metrics (like contrast under scatter, or yield under tolerances) in a fraction of the time. This accelerates design iteration and allows more robust analysis, since one can afford many more trials or more complex models than before.

The efficiency gains from AI in Monte Carlo analysis are dramatic. In one case, a neural network was trained to emulate photon transport in scattering media (like nanoparticle suspensions) – after training, it could predict reflectance and transmittance spectra with less than 1% error but ran 100–1000× faster than a standard MC ray-trace. Another approach by researchers at NVIDIA replaced some random sampling steps with a learned model to denoise Monte Carlo optical simulations, achieving the same image accuracy with roughly 1/10th the number of rays (thus ~10× speedup). Likewise, a 2024 study applied deep learning to diffuse optical tomography and showed it could maintain accuracy while cutting computation time from hours to minutes by effectively learning the light transport physics. Moreover, AI-based variance reduction has been demonstrated in lens design: an algorithm learned which ray paths contribute most to image variance and biased the sampling accordingly, yielding accurate tolerance Monte Carlo estimates with an order of magnitude fewer rays. These improvements mean engineers can get near real-time feedback on things like stray light analysis or illumination uniformity. For instance, instead of waiting overnight for a billion-ray stray light simulation, an AI surrogate can predict the stray light pattern in seconds, allowing quicker mitigation design. Ultimately, AI is making Monte Carlo-type analysis practical even for very complex optical systems by vastly reducing computation time for the same level of accuracy.

15. Real-Time Wavefront Sensing and Correction

By leveraging AI, modern optical systems can sense and correct wavefront distortions in real time, vastly improving performance in applications like astronomy, microscopy, and laser communications. Traditional adaptive optics often rely on iterative algorithms and sometimes bulky wavefront sensors, which can be slow or have latency. AI changes the game by using neural networks to infer wavefront errors directly from limited sensor data (even a single image) and instantly predict the optimal corrections (e.g., the actuator commands for a deformable mirror). This reduces the computation time to milliseconds or less. The result: optical systems that can keep up with rapidly varying disturbances – such as atmospheric turbulence blurring a telescope’s view or a patient’s eye movements during laser surgery – and correct them on the fly. In practical terms, this means sharper images of distant galaxies or stable laser beams through turbulent air, all thanks to fast “brain-like” AI processing replacing slower control loops.

A compelling example comes from the Vera C. Rubin Observatory’s telescope, where scientists integrated a deep learning model into the active optics system. Tests showed the AI wavefront estimator was 40× faster than the existing algorithm and delivered 2–14× better error reduction under various conditions. Specifically, the neural network could analyze slightly defocused star images from the telescope’s edge sensors and output mirror adjustments in tens of milliseconds. It achieved the atmospheric seeing limit (essentially removing all internal optical error) in simulation, whereas the traditional method left residual errors. Even in challenging cases with camera vignetting or dense star fields, the AI’s wavefront corrections were about 5–14 times more accurate than the baseline. This improvement is not just academic: it translates to expanding the area of sky the Rubin telescope can use for precision science by up to 8%, since image quality is maintained across more of the field. In another demonstration, a convolutional neural network was used in a microscope to infer aberrations from an image of a specimen; it could then adjust a spatial light modulator in real time, improving image sharpness significantly faster than iterative sensor-based methods. These achievements underscore that AI-enabled wavefront sensing and correction systems can achieve near-instantaneous compensation of optical distortions, greatly enhancing the clarity and stability of both images and beams.

16. Robustness to Environmental Changes

Optical systems often face performance degradation due to environmental factors like temperature fluctuations, mechanical vibrations, and humidity or pressure changes. AI can significantly improve robustness by predicting and compensating for these effects. Using sensor inputs (temperature readings, accelerometers, etc.) and learned models, an AI system can adjust optical settings proactively – for example, tweaking focus or alignment when the temperature drifts, or tuning a laser’s pump current if output power dips. Additionally, AI can help design optics that are inherently less sensitive to environmental changes by identifying which design parameters correlate with instability. In deployed systems (like cameras on satellites or autonomous vehicles), AI algorithms continuously monitor for signatures of environmental stress and apply real-time corrections or calibrations. The outcome is an optical device that maintains optimal performance across a wider range of conditions without manual intervention.

While research is ongoing, one notable advance is the application of reinforcement learning to maintain optical cavity stability against thermal perturbations. In late 2024, Zhang et al. reported a transformer-based RL controller that managed a high-finesse optical cavity’s temperature control system (important for lasers) more effectively than classical methods. This AI controller learned to anticipate how heating or cooling inputs affected the cavity length and kept the resonance stable even as ambient temperature varied, thereby reducing frequency drift. In another example, a machine learning algorithm was used in a field test of a free-space communication laser to counteract building vibrations and air turbulence: it learned the vibration patterns via an accelerometer and adjusted a fast steering mirror in real time, cutting the received beam jitter by ~70%. On the design side, AI-driven analysis of environmental data has led to more resilient optics – for instance, an algorithm identified that a certain lens mount design would decenter with temperature, prompting a redesign with a different material that halved thermal defocus. These developments indicate that AI methods are beginning to shield optical systems from environmental influences by either active feedback control or intelligent design adjustments. As a result, sensitive instruments like satellite imagers or high-power lasers can operate with consistent performance in changing or harsh conditions, where previously frequent manual re-calibration was required.

17. Data-Driven Aberration Correction

Traditionally, correcting optical aberrations (like spherical aberration, coma, or astigmatism) relied on optical design tricks or hardware (adding aspheric surfaces or adaptive optics). Data-driven approaches now use AI to correct aberrations in software – learning from captured images and effectively undoing distortions computationally. A neural network can be trained on images with known aberrations and their ideal counterparts, learning to output a corrected image (or an estimated wavefront error). Once trained, the AI can process new images from the instrument and remove blurring or distortion in real time. This is especially powerful for systems where adding corrective optics is impractical (e.g. microscopes imaging deep into tissue, where aberrations vary with depth). Over time, as the AI sees more data, it can even adapt to new aberration patterns (say, if an optic is slightly perturbed). This means sharper images or beams purely via algorithmic correction informed by data, sometimes achieving results comparable to physical aberration correction.

A 2025 study led by Shroff’s group at NIH exemplified the power of data-driven aberration correction. They developed a deep learning strategy (nicknamed “DeAbe”) that learns to compensate for the aberrations in thick-sample fluorescence microscopy. Without any additional optics or slower adaptive hardware, their neural network was able to restore image quality in sections that were severely degraded by aberrations. The AI-restored images had contrast and resolution on par with using an actual adaptive optics system – effectively the network learned to mimic what a deformable lens or mirror would do, purely through post-processing. In quantitative terms, the method achieved over a 2-fold improvement in resolution in deep tissue images, recovering fine structures that were invisible in raw aberrated images. It also dramatically improved signal-to-noise, e.g. bringing out a dim neural structure in a C. elegans embryo that was lost in the original data. Importantly, this was done without slowing down image acquisition. Similarly, in astronomy, researchers have trained CNNs on distorted telescope images and found they can correct complex aberrations (atmospheric plus optical) to yield nearly diffraction-limited images, often faster than traditional deconvolution. These results confirm that neural networks can directly learn from an optical system’s output and in software correct aberrations that would otherwise require physical optics or meticulous calibrations, leading to consistently sharper and more interpretable imagery.

18. High-Dimensional Design Space Exploration

Modern optical systems often involve high-dimensional design spaces – meaning dozens or even hundreds of parameters (lens curvatures, spacings, material choices, coating specs, etc.) that all affect performance. AI techniques, particularly in the form of advanced optimization algorithms and machine learning surrogates, excel at searching these high-dimensional spaces efficiently. For example, Bayesian optimization guided by Gaussian process models can smartly sample the design space to find good solutions without exhaustively trying every combination. Similarly, evolutionary algorithms augmented with neural network predictors can navigate large parameter spaces by learning which regions are promising. AI effectively acts as a compass in high dimensions, avoiding the curse of dimensionality that stumps brute-force or grid searches. This enables discovering unconventional optical designs that hit multiple performance targets simultaneously (e.g. wide field of view and low aberration and compact size), by considering trade-offs across many variables at once.

Recent research provides evidence that AI-driven search can handle extremely high-dimensional optimization problems in optics. For instance, a 2024 study on Bayesian optimization found that with a clever adjustment of the Gaussian process prior, “standard Bayesian optimization works drastically better than previously thought in high dimensions,” even into the thousands of parameters. The authors demonstrated this on real-world tasks, showing the modified algorithm outperformed state-of-the-art high-dimensional optimizers on problems that were once deemed intractable. In optical terms, this suggests one could optimize a system with, say, 100+ variables (including lens shapes, positions, etc.) in a feasible number of iterations. In another example, researchers tackled the design of a metamaterial involving 80 parameters describing nano-structure geometry; by integrating a generative model with a surrogate, they located a design with desired optical response whereas random search failed (the AI-driven search achieved the target reflectance within 1% while random trials stayed off by ~10%). These successes indicate that, with AI, the dimensionality barrier is being overcome: optical designers can confidently let algorithms explore massive combinatorial design spaces. The end benefit is discovering innovative solutions – for example, a multi-element lens system with 50+ variables was optimized for a head-up display, and the AI found a design meeting image quality and volume constraints that human experts hadn’t considered. As AI optimization research progresses, handling 1000-dimensional optical design spaces may become routine, unlocking truly comprehensive system optimization.

19. Generative Models for Rapid Prototyping

Generative AI models – such as Generative Adversarial Networks (GANs), variational autoencoders, or invertible neural networks – are being used to propose entirely new optical designs for rapid prototyping. Instead of optimizing from a given starting design, generative models learn the distribution of “what a good design looks like” and can sample from it to create novel candidates. In optics, this might mean a GAN that has been trained on thousands of known good lens designs and can then output new lens prescriptions that meet certain criteria (focal length, F/#, etc.) as a starting point for fine-tuning. Another example is using a generative model to produce metasurface unit cell patterns that yield a desired phase profile. The big advantage is creativity and speed – generative AI can suggest non-intuitive design ideas that human engineers might overlook, jump-starting the prototyping phase with viable options. This can reduce the number of fabrication iterations needed, as the AI prototypes are closer to optimal from the outset.

A prominent example is the work of Luo and Lee (2023) who employed an invertible generative neural network (Glow-based) for lens design. Their approach essentially “generated” lens designs from scratch by sampling the latent space of a trained model, yielding lens parameter sets that met specific imaging requirements. In their trials, the generative model was able to produce a starting lens layout for a wide-FOV, low-distortion lens that already had good performance before any traditional optimization – significantly reducing design time. Another illustration comes from metasurfaces: an MIT group trained a GAN on effective meta-atom patterns and used it to create new surface layouts for a beam deflector. The GAN’s proposals, when simulated, achieved the target deflection efficiency within 5% on the first try, whereas a manual design was off by 30% initially (needing multiple tweaks). Furthermore, a generative model called OptoGPT (discussed earlier) can be viewed as a foundation model that outputs whole optical designs (e.g., multi-layer films) in one go. These tools highlight how AI can serve as an “inventor” in the design process, offering complete design prototypes that are physics-aware. The result is a drastically accelerated prototype cycle – engineers can fabricate the first AI-proposed design and often find it surprisingly close to specs, then only minor adjustments are needed. Companies in imaging optics have begun using generative ML to suggest lens shapes for new products, reporting that it shortened the concept-to-prototype timeline by as much as 50%.