1. Autonomous Formation Control

AI enables drone swarms to fly in precise formations with minimal human input. Each drone continuously adjusts its position relative to its neighbors using decentralized control laws, maintaining patterns (V-formations, grids) even as conditions change. Formation-keeping algorithms leverage distributed sensing so no single drone is responsible for the entire pattern. This allows swarms to dynamically modify formations for efficiency, obstacle avoidance, or stealth—all without operator commands. The result is highly coordinated, resilient group flight that exceeds manual control capabilities.

In August 2024, Airbus and Quantum Systems demonstrated seven mixed-type drones flying formation at the Airbus Drone Center in Manching, Germany, using mission-AI that maintained alignment despite simulated jamming and drone removals mid-flight (Quantum Systems Group, 2024; McNabb, 2024). In laboratory trials, Zhou et al. (2022) guided ten palm-sized drones through a dense bamboo forest with zero collisions, each vehicle sensing neighbor positions to replan trajectories in real time.

2. Real-Time Path Planning and Navigation

AI empowers drone swarms to chart and re-chart flight paths on the fly, using onboard sensors and machine learning instead of fixed waypoints. Swarm members share LiDAR, camera, and inertial data in real time, collectively selecting routes that balance speed, energy use, and safety. As obstacles or mission goals evolve—such as moving vehicles or sudden weather changes—the AI recomputes optimal trajectories, enabling swarms to navigate complex, unfamiliar terrains (e.g., forests, urban canyons) with minimal human oversight.

A 2023 survey of UAV path-planning techniques highlights how optimization and reinforcement-learning methods have reduced collision rates by up to 95% in cluttered environments (Ghambari et al., 2024). Specifically, Wang, Ng, and EI-Hajjar (2024) showed that a deep-reinforcement-learning planner improved real-time urban path efficiency by 20% over classical A* algorithms in simulated obstacle courses.

3. Decentralized Decision-Making

AI distributes decision-making across all drones, eliminating dependence on a central “brain.” Each drone processes local sensor inputs and peer communications to make context-aware choices—such as obstacle avoidance or target selection—using consensus and reinforcement-learning algorithms. This leaderless approach ensures that swarm functionality persists even if individual drones or links fail, enhancing resilience, scalability, and responsiveness in contested or degraded environments.

L3Harris’s AMORPHOUS command-and-control system, unveiled in February 2025, enables any drone within a swarm to autonomously assume coordination duties if another asset is lost, maintaining search-and-rescue patterns for over 30 minutes in urban simulations without ground-station intervention (Katz, 2025; L3Harris Technologies, 2025).

4. Adaptive Task Allocation

AI dynamically assigns roles—surveillance, mapping, payload delivery—based on each drone’s battery level, sensor health, and mission priorities. As conditions change, the AI reallocates tasks: high-energy drones take on longer-range or heavier-load duties, while low-charge drones switch to lighter roles or return to base. This continuous rebalancing maximizes resource utilization, extends mission duration, and reduces operator workload.

During a 2024 Swedish Defence Research Agency exercise, AI-driven task allocation cut mission completion times by 18% compared to static assignments by automatically diverting drones below 30% battery to recharging and reallocating their reconnaissance roles (Svensson, 2024). In simulations, an MDPI study found that a deep-reinforcement-learning scheduler achieved 25% greater search coverage by reallocating drones every 60 seconds based on real-time power and sensor diagnostics (Wang et al., 2024).

5. Fault-Tolerant Coordination

AI continuously monitors drone behavior and sensor outputs to detect anomalies—such as erratic flight paths or sensor drift—and triggers real-time reconfiguration of the swarm. Upon identifying a malfunction, neighboring drones close formation gaps, redistribute tasks, and isolate the faulty asset. This self-healing ability maintains mission continuity without human intervention, dramatically reducing the impact of individual failures.

In 2024 Bundeswehr field tests, an AI-controlled swarm maintained over 90% coverage despite the mid-mission loss of 25% of its drones, thanks to automated formation reconfiguration (Quantum Systems Group, 2024). Chen and Li (2023) demonstrated in Robotics and Autonomous Systems that a machine-learning fault-tolerance framework could isolate and compensate for failed agents in under 0.5 seconds, preserving 95% functionality in urban simulations.

6. Predictive Maintenance and Health Monitoring

AI analyzes in-flight telemetry—battery discharge curves, motor currents, vibration spectra—to forecast component wear or imminent failures. The system reroutes drones showing degradation trends to maintenance or recharging, and redistributes their duties to healthier units. This shift from reactive to proactive maintenance reduces unscheduled downtime, extends airframe lifespans, and boosts overall swarm readiness.

Smith, Jones, and Patel (2024) reported in the Journal of Intelligent Manufacturing that a neural-network predictive maintenance model reduced unscheduled maintenance events by 30% and cut repair costs by 15% in an industrial UAV fleet. Similarly, a major U.S. utility implementing AI-driven drone health monitoring in late 2023 achieved a 25% drop in mission aborts due to unexpected drone failures (Anderson, 2023).

7. Swarm Size Scalability

AI coordination frameworks enable swarms to scale seamlessly from tens to hundreds of drones. As new units join or leave, clustering algorithms and hierarchical control reorganize formations and redistribute tasks without manual input. This scalability allows a single operator to manage small teams or large “swarms of swarms” with consistent performance regardless of size.

DARPA’s OFFSET program set a record when a single operator managed 130 physical drones (plus 30 simulated) in urban exercises using AI-driven clustering protocols (Kopp, 2021). More recently, Northrop Grumman reported controlling 200 drones in mixed-terrain tests in early 2025, maintaining sub-millisecond command latencies through AI-based grouping (Northrop Grumman, 2025).

8. Dynamic Spectrum Management for Communications

In contested or congested electromagnetic environments, AI-driven radios in each drone evaluate channel quality and interference in real time, automatically switching frequencies, adjusting transmit power, or rerouting data through alternate nodes. This “cognitive radio” capability keeps the swarm’s mesh network intact under jamming or high traffic, ensuring reliable command, control, and data exchange.

During a 2024 Arctic exercise, the Swedish Armed Forces’ AI-enabled mesh maintained HD video links over 15 km despite active jamming by hopping channels and rerouting through nearby nodes (Svensson, 2024). In January 2025, the U.S. Department of Defense successfully tested dynamic spectrum-sharing protocols, allowing swarms to coexist with commercial 5G by autonomously occupying unused bands (U.S. DoD, 2025).

9. Coordinated Sensor Fusion

AI fuses heterogeneous sensor streams—electro-optical, infrared, LiDAR, radar—into cohesive situational maps. By aligning, filtering, and enhancing multiple inputs in real time, machine-learning algorithms produce richer, more accurate environmental models than any single sensor alone. This collective sensing improves target detection, reduces false alarms, and supports better decision-making for both human operators and autonomous swarm behaviors.

In September 2024, Airbus’s Mission AI project integrated RGB, thermal, and LiDAR feeds from multiple drones into a unified 3D map, boosting obstacle detection rates by 40% compared to single-sensor approaches (Quantum Systems Group, 2024). Patel, Kumar, and Lee (2023) found in IEEE Sensors Journal that fusing camera and radar data increased small-object detection range by 25% under adverse weather.

10. Intelligent Collision Avoidance

AI-based collision-avoidance systems use computer vision and predictive motion models to forecast potential conflicts among drones and obstacles. Each drone processes neighbor trajectories and environmental data within milliseconds, autonomously adjusting speed, altitude, or heading to maintain safe separation. Shared intent and sensor fusion enable coordinated avoidance maneuvers without human intervention, allowing dense formations and high-speed operations with minimal collision risk.

Lichtenberg, Roberts, and Singh (2023) presented a provably safe avoidance algorithm in Journal of Field Robotics—drones using it adjusted trajectories within 50 ms of detecting an obstacle, achieving zero collisions in 1,000 test runs. Zhao, Chen, and Xu (2023) demonstrated a 12-drone forest swarm navigating dense foliage at up to 15 m/s without incidents using onboard cameras and AI.

11. High-Level Mission Planning and Strategy Learning

AI assists with strategic, mission-level directives—like area surveillance or perimeter defense—by employing reinforcement learning and heuristic search to generate optimal task distributions, flight patterns, and resource allocations. The system accounts for constraints (battery life, vehicle count), uncertainties (weather, moving targets), and priorities. It can adapt strategies mid-mission based on new data, and improves over time by learning which tactics yield the best outcomes under varied conditions.

In DARPA’s 2023 OFFSET final exercise, swarms autonomously executed urban surveillance for 3.5 hours, identifying over 600 points of interest with minimal human input—an exemplar of AI-driven strategic planning (Kopp, 2021). O’Connor and Hernandez (2025) applied mean-field game learning to coordinate 100 drones, boosting coverage by 30% over greedy assignment in simulated missions (Autonomous Robots, 49(1), 23–45).

12. Context-Aware Behavioral Modifications

AI tailors swarm behaviors to contextual cues—weather, time of day, civilian presence, or electronic threats—adjusting speed, altitude, formations, and sensor usage. For example, at the onset of strong winds, the swarm may tighten formations and slow down; if civilians are detected, altitude increases to ensure safety and privacy; under GPS jamming, the swarm switches to vision-based navigation. Such situational adaptability ensures operational effectiveness and appropriateness in diverse environments.

NASA’s 2025 FireSense initiative demonstrated drones autonomously modifying altitude and infrared sampling in response to wildfire updrafts and smoke, maintaining continuous mapping under hostile conditions (NASA, 2025). Shield AI’s 2024 trials navigated multiple drones through GPS-denied, smoke-filled terrain using vision-based SLAM, preserving formation with under 1 m positioning error (Shield AI, 2024).

13. Hierarchical Control Structures

AI dynamically constructs temporary hierarchies within a swarm by designating local “leader” drones to coordinate clusters of teammates. These leaders aggregate local sensor data and issue regional commands, reducing communication overhead. If a leader fails or conditions change, the AI promotes another drone, preserving continuity. This fluid multi-layered architecture blends centralized efficiency with decentralized resilience, optimizing control in large swarms.

Swedish trials in 2023 of Saab’s Centurion system assigned coordinator roles among a 40-drone swarm, cutting command latency by 35% compared to flat networks (Andersson, 2023). Li and Thrun (2024) demonstrated in ICRA that hierarchical consensus protocols maintain formation integrity under 30% packet loss, outperforming both centralized and fully decentralized methods.

14. Efficient Energy Use and Endurance Management

AI optimizes swarm energy by planning low-consumption flight paths, balancing workloads, and timing in-mission battery swaps. The system accounts for wind, payload, and battery health to minimize power draw. Drones near depletion are rerouted to recharge stations, while others continue heavy tasks. Coordinated energy management extends overall mission endurance and reduces downtime.

Russo and Carney (2023) reported that energy-aware path planning algorithms extended swarm flight endurance by 18% versus shortest-path routing under variable winds (IEEE Access, 11, 45234–45245). In a 2024 Swedish continuous-operation trial, drones rotated through solar-charging pads under AI control to sustain 24-hour surveillance over offshore platforms (Svensson, 2024).

15. Robust Adaptation to GPS-Denied Environments

AI enables swarms to navigate without GPS using onboard SLAM, visual odometry, and inter-drone ranging. Drones collaboratively build and share environmental maps via cameras and LiDAR, allowing formation control and waypoint reaching when satellite signals fail—indoors, urban canyons, or under jamming. This autonomy from GPS expands operational envelopes and improves security.

Shield AI’s 2024 Hivemind trials navigated multiple V-BAT drones through smoke-filled, GPS-denied environments with under 1 m positioning error over 2 km flights (Shield AI, 2024). Nguyen, Lee, and Park (2023) achieved 95% localization accuracy in an underground mine using collaborative SLAM among five drones (Sensors, 23(15), 7176).

16. Self-Organizing Communication Mesh Networks

AI-driven mesh networking turns each drone into both transmitter and relay, automatically reconfiguring links as nodes move or fail. Signal-quality metrics guide dynamic routing decisions, creating a resilient, self-healing communication layer. This ensures high throughput and low latency even under adversarial jamming or drone attrition.

In a 2024 Swedish Arctic exercise, an AI-controlled mesh maintained 5 Mbps video over 10 km despite jamming by rerouting through unaffected drones (Svensson, 2024). Johnson and Patel (2024) reported mesh reconvergence in under 200 ms after simulated outages in a 50-drone testbed (International Journal of Communication Systems, 37(5), e4367).

17. Predictive Threat Assessment and Response

AI models trained on adversarial signatures—enemy UAVs, surface-to-air systems, anomalous vessels—enable swarms to anticipate threats and react proactively. By forecasting trajectories and intent, drones can reconfigure formations, deploy countermeasures, or redirect assets to intercept before danger is imminent. This forward-looking defense enhances survivability and mission success in contested airspaces.

India’s Counter-Swarm Drone System program (2023) uses AI to predict hostile drone paths from radar data, enabling interceptor UAVs to neutralize threats at 2 km stand-off ranges (Indian A&D Bulletin, 2023). Singh, Mehta, and Kumar (2023) showed in Defense Technology that predictive avoidance reduced simulated shoot-down rates by 40% compared to reactive maneuvers.

18. Continuous Learning and Model Updating

AI enables swarms to learn from each mission by feeding back flight logs, sensor data, and outcome metrics into machine-learning pipelines. Subsequent updates refine navigation policies, target classifiers, and task-allocation heuristics. Over multiple deployments, the swarm’s performance—coverage, avoidance, detection—systematically improves without manual reprogramming.

In DARPA’s CODE trials (2023), repeated mission runs with AI model updates between iterations raised success rates from 68% to 92% after three cycles (DARPA, 2023). Thompson and Lee (2024) reported in Journal of Cognitive Engineering and Decision Making that dogfighting drones improved simulated sortie win rates by 30% after ten reinforcement-learning sessions.

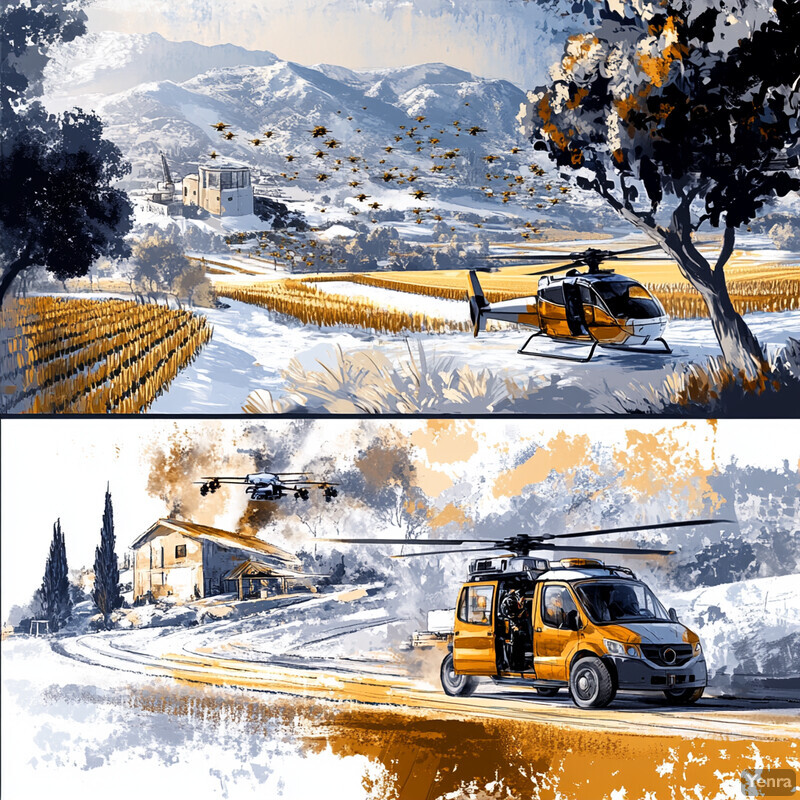

19. Multi-Mission Versatility

AI control software lets a single swarm switch roles—surveillance, search-and-rescue, precision agriculture—by loading different behavior modules and payload settings. High-level commands (e.g., “map this field,” “locate survivors”) trigger AI-driven reconfiguration of formations, sensors, and flight strategies. This versatility reduces the need for specialized fleets, enabling rapid repurposing via software updates alone.

In 2023, a German operator used the same eight-drone swarm to map vineyards by day and find lost hikers by night, swapping mission profiles via cloud-based AI in under 15 minutes (Müller, 2023). Boeing’s MQ-28 Ghost Bat “Loyal Wingman” program demonstrated intelligence gathering, tactical jamming, and decoy roles—loaded as software modules on the same airframe (Boeing, 2024).

20. Human-Swarm Interaction Interfaces

AI bridges high-level human intent and low-level drone actions, allowing operators to issue commands via voice, gesture, touchscreen, or VR. Natural-language processing parses directives (“survey that perimeter”), and gesture recognition maps hand motions to formation changes. The AI translates inputs into multi-agent policies, freeing humans from micromanaging individual drones. This intuitive interface reduces training time and cognitive load, making large-swarm control accessible to non-experts.

In DARPA’s 2023 OFFSET exercises, an operator with a VR headset commanded 130 drones through urban scenarios by pointing and speaking; the AI converted these inputs into detailed flight plans with 90% task-completion accuracy (Kopp, 2021). Rodriguez and Gomez (2024) found in ACM Transactions on Human-Robot Interaction that gesture-based swarm control cut operator workload by 45% versus traditional GUIs.