AI image generation has rapidly evolved, enabling anyone to turn text descriptions into vivid pictures. From the open-source Stable Diffusion to the artistically savvy Midjourney, modern systems have pushed the boundaries of creativity. This article dives into the foundations of how these models work, their architectural components, the craft of prompt engineering, Midjourney’s advancements, real-world applications, and a look at the road ahead. Along the way, we’ll explore how noise becomes art and how words become images.

1. Foundational Technology

AI text-to-image generators are built on diffusion models, a class of generative models that create images by progressively denoising random noise. The key idea, first proposed in 2015, is to destroy data structure by adding noise, then learn to reverse that process to recover the data. In simpler terms, the model starts with pure noise and learns to transform it into a coherent image that matches a given description. This happens through a gradual, step-by-step refinement: at each timestep, a neural network predicts some of the noise in the current image and removes it, inching the result closer to reality.

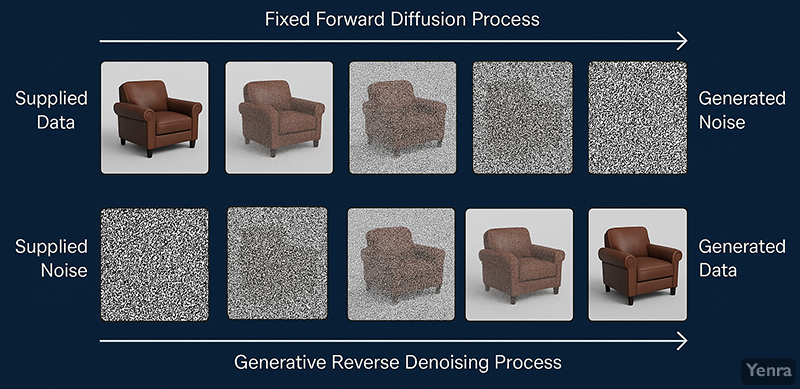

During training, the model is shown many images that have been corrupted with varying levels of noise. It learns to predict the noise added (or equivalently, the denoised image) for each step. Mathematically, if an image is represented as $x_0$ and pure noise as $x_T$, the model learns the reverse diffusion $p_\theta(x_{t-1}|x_t)$ that gradually converts $x_T$ back to $x_0$ over $T$ steps. Each step’s task is small – remove just a little noise – but after enough steps, even random pixels can form a clear picture. Figure 1 illustrates this process: the forward diffusion adds noise until the image becomes static fuzz, while the reverse diffusion removes noise to reveal a generated image.

Early diffusion models operated in the image’s pixel space, which is computationally expensive for high resolutions. Stable Diffusion introduced a breakthrough by using a latent diffusion model, operating in a compressed latent space. An encoder (often a Variational Autoencoder, VAE) first compresses images into a lower-dimensional latent representation. Diffusion is performed on these latent vectors (which capture high-level image features), and after the denoising process, a decoder (the VAE’s decoder) reconstructs the final image. Working in latent space makes generation much more efficient: as the authors reported, latent diffusion speeds up image generation by ~2.7× and trains ~3× faster than equivalent pixel-space models.

Another cornerstone of these systems is the massive scale of training data. Models like Stable Diffusion were trained on billions of image-text pairs scraped from the internet. For example, Stability AI used the LAION-5B dataset (5 billion captions and images) to teach Stable Diffusion the broad visual language of the world. Such extensive exposure allows the model to learn a huge variety of styles, objects, and concepts. The initial Stable Diffusion model was trained at 512×512 resolution on this data using hundreds of GPU hours (around 150,000 GPU-hours, costing an estimated $600k). This large-scale training is what enables these AI systems to paint anything from “a medieval castle at sunrise” to “a futuristic city floating in space” with surprising fidelity. In essence, the foundation of text-to-image AI combines an elegant noise-removal process with the diversity of the internet’s imagery.

2. Architecture Components

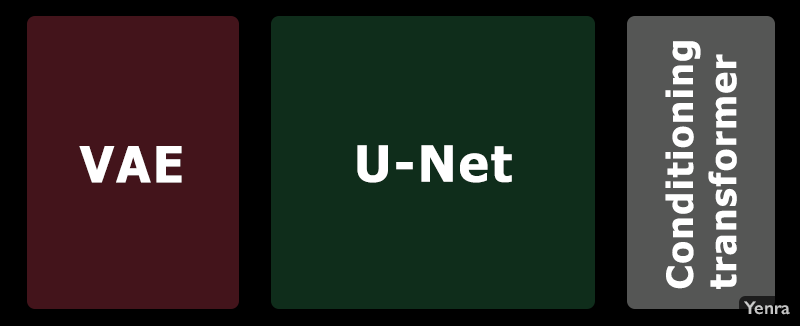

Under the hood, Stable Diffusion is an ensemble of specialized parts working together. It consists of three core components – a CLIP text encoder, a U-Net diffusion model, and a VAE decoder – orchestrated to turn a text prompt into an image. Each component plays a distinct role in the generation pipeline:

- Text Encoder (CLIP) – Interprets the input text prompt and encodes it into a numerical embedding.

- U-Net Denoiser – The “artist” neural network that iteratively refines noisy latent images into the desired outcome, guided by the text embedding.

- VAE (Variational Autoencoder) – Compresses images into latent space before generation, and decodes the final latent back into a full-resolution image.

Let’s break down how these components interact when you type in a prompt:

- CLIP Text Encoder – Understanding the Prompt: Stable Diffusion leverages OpenAI’s CLIP model to convert a natural language prompt into a vector representation (an embedding). CLIP (Contrastive Language-Image Pre-training) was originally trained on millions of (image, caption) pairs to align images and text in the same latent space. In Stable Diffusion, only the text encoder part is used. For a given prompt like “a mystical forest with neon lights”, the CLIP encoder produces a fixed-size embedding (a list of numbers) capturing the semantic meaning of “mystical forest with neon lights.” This embedding serves as a conditioning signal for the image generator. Essentially, the model now has a mathematical description of what it needs to draw.

- U-Net Denoiser – Generating in Latent Space: The U-Net is a type of convolutional neural network originally developed for image segmentation tasks, but here it’s tasked with denoising images. In Stable Diffusion’s latent diffusion, the U-Net operates not on pixel images but on latent codes – the compressed representation produced by the VAE. The U-Net is repeatedly applied to gradually remove noise. At each timestep $t$, it takes two inputs: (a) the current noisy latent image $x_t$, and (b) the text embedding (from CLIP) that guides it. The model predicts the noise to remove or the direction to adjust the latent in order to make it look a bit more like what the text describes. Because the text embedding is injected via a cross-attention mechanism, the U-Net at every step “knows” what content and style the prompt is asking for. Over, say, 50 diffusion steps, the U-Net refines the latent from pure noise towards an image that CLIP’s embedding would describe. The use of a latent U-Net makes generation faster – the model deals with smaller 64×64 (or similar) feature maps instead of 512×512 pixel images, yet these latent features encode the high-level structure needed to form a detailed picture.

- VAE Decoder – Reconstructing the Image: Once the U-Net has finished the denoising steps, we have a final latent representation of the image. The last piece is the VAE’s decoder, which converts this latent vector back into a full-resolution image in pixel. The decoder essentially “paints” the image by filling in all the details at the specified resolution, guided by what it learned during training about how latent features map to real images. The output is an image (say 512×512 pixels) that hopefully matches the prompt given. The VAE decoder can be thought of as the translator that takes the U-Net’s abstract imagination and makes it a concrete picture.

It’s worth noting that Stable Diffusion’s design was heavily influenced by the Latent Diffusion Models paper. By compressing images with a VAE, the U-Net focuses on important features instead of getting lost in pixel-level noise. This approach makes training and generation much more tractable on everyday hardware. The text encoder, U-Net, and VAE operate in harmony: the text encoder provides context, the U-Net generates and refines the latent image with that context in mind, and the VAE turns the refined latent into a detailed image.

To summarize, when you feed Stable Diffusion a prompt, it is encoded into a text embedding; a U-Net then uses that embedding to denoise latent variables over many iterations; and finally a decoder produces the image. This modular architecture – language in, image out – is powerful. It means parts of the system can be improved or swapped out independently (for example, one could plug in a different text encoder or try a different decoder), and it provides a clear framework to add new capabilities (like image-to-image variations or extra conditioning, as we’ll see later).

3. Prompt Engineering

When using text-to-image AI, how you phrase your request can be as important as what you ask for. “Prompt engineering” refers to the craft of writing prompts to get the desired output from the model. These AI systems don’t truly understand language like humans do – they respond to keywords and learned associations in the training data. Crafting an effective prompt is partly an art and partly a science.

A good prompt typically paints a clear picture of the desired scene or style. Users have discovered that including certain modifiers – specific art styles, adjectives, or references – can dramatically influence the result. For example, asking for “a portrait of a warrior, digital painting, artstation style, cinematic lighting” will push Stable Diffusion to produce something akin to concept art, because terms like ArtStation (a popular art portfolio site) and cinematic lighting are associated with high-quality digital art in the training data.

In the early days, users compiled prompt guides listing “magic words” that trigger particular aesthetics. These might include medium descriptors (photo, oil painting, pencil sketch), lighting (softbox lighting, golden hour), artists (Picasso, Van Gogh style), and more. By mixing and matching these, you can guide the model’s imagination. The structure of a prompt often matters too: one recommended approach is to explicitly list aspects like subject, style, environment, and lighting, rather than writing a single run-on sentence. This helps ensure the model catches each important detail. In practice, “A painting of a cute goldendoodle wearing a suit, natural light, in the sky, with bright colors, by Studio Ghibli” yields a more coherent result than a vague sentence about a dog, thanks to those explicit modifiers.

Moreover, these models pay attention to word order and emphasis. Generally, words appearing earlier in the prompt can have a stronger influence on the composition. If you start with “Astronaut cat in a nebula, watercolor”, the model will likely focus on making an astronaut cat because it was mentioned first, then style it with a nebula background in watercolor as secondary elements. If you reversed it (“Watercolor painting of a nebula with an astronaut cat”), you might get a broader nebula scene where the cat is less prominent. Experienced prompters exploit this by ordering terms from most to least important, and by repeating or weighting terms that really must appear. For instance, adding parentheses or special syntax in some interfaces can increase a token’s weight (e.g., (dragon:1.3) might boost the presence of a dragon). This idea of prompt weighting allows fine control: the model can be nudged to emphasize certain concepts more than others. Under the hood, this works by scaling the corresponding embedding vector – effectively telling the model “make this feature 20% stronger”.

Another powerful aspect of prompt engineering is the use of negative prompts – specifying what not to include. Stable Diffusion and others allow you to provide a negative prompt (e.g., “no text, no watermark, no blur”) which guides the model away from those elements. By steering the model’s imagination, negative prompts help fix common issues. For example, a prompt for a person might accidentally yield extra fingers or strange text; adding a negative prompt “deformed hands, text” can reduce those unwanted artifacts. Midjourney introduced a --no option for a similar purpose, allowing users to prune out aspects (like --no plants to avoid any foliage in the scene).

It’s fascinating to see how each model has its own “vocabulary” of prompt terms that work best. Stable Diffusion tends to be quite literal and gives the user a lot of control. You can dial in exact artist names or camera models, and with the right phrasing, you can achieve photorealism or a specific art style. It also means Stable Diffusion might require a longer, more detailed prompt to get a very polished result, but it will obey those details closely. On the other hand, Midjourney has become known for its creative interpretations. A short Midjourney prompt can yield an image with striking composition and color, because the model has been tuned for aesthetic impact. As one prompt guide noted, “Midjourney stands out for its artistic interpretations and the ability to blend styles and concepts in unique ways,” often surprising users with visually stunning outputs. Stable Diffusion, by contrast, “offers a level of precision and control... with advanced syntax options for fine-grained manipulation”, which appeals to those who want to meticulously shape the result. In other words, Stable Diffusion might be the choice when you have a very clear idea and need exactitude (it’s like a skilled illustrator who follows your specs), whereas Midjourney is like an inspired artist who will wow you if you give it a bit of freedom.

The craft of prompt engineering continues to evolve. Communities share new discoveries – for instance, certain obscure words from the training data can trigger a style or fix a problem. There are even automated prompt generators (one project uses GPT-3 to suggest prompt refinements for Stable Diffusion). But at its heart, prompt engineering is about understanding how the AI “thinks.” By speaking its language of tokens and embeddings, users guide these systems to produce amazing art from just a line of text. It’s a new form of dialogue between human intention and machine imagination, where finding the right words can make all the difference between a mundane image and a masterpiece.

4. Midjourney's Advancements

Midjourney arrived on the scene with a reputation for jaw-dropping visuals and a penchant for the fantastic. Although its underlying technology is not fully public, Midjourney is widely believed to use a diffusion-based generative model (or a blend of models) similar in spirit to Stable Diffusion, with significant proprietary improvements. Over successive versions (v3, v4, v5, etc.), Midjourney has honed in on greater aesthetic coherence, color harmony, and lighting consistency in its outputs. Users often note that Midjourney’s images have a distinct polish: compositions feel balanced, colors are richly tuned, and elements like lighting and depth come out more consistently cinematic or dramatic.

One of Midjourney’s breakthroughs has been its ability to produce high-quality results with minimal prompt engineering. While Stable Diffusion might need a carefully crafted prompt with multiple style cues to get a similar level of detail, Midjourney often “excels at generating high-resolution images with incredible detail” from even simple prompts. It’s as if Midjourney has a built-in sense of art direction. Part of this comes from how Midjourney’s model was trained and fine-tuned. The Midjourney team likely curated a training set that emphasizes artistically composed images and also incorporated extensive feedback. David Holz, Midjourney’s founder, noted that with version 3 they introduced a feedback loop: they analyzed which images users loved or upvoted, and used that data to improve the model. In his words, “We didn’t add more art [data]; we just took the data about what images the users liked... and that actually made it better.”. This kind of human-in-the-loop fine-tuning steers the AI towards preferred aesthetics. In essence, Midjourney learned what makes an image appealing to people (at least the people using Midjourney), and it internalized those preferences.

Midjourney also seems to maintain cohesiveness in tricky scenarios. For example, getting multiple subjects or a full scene in Stable Diffusion can sometimes lead to oddities (like a person with too many fingers or inconsistent lighting between foreground and background). Midjourney’s model often handles such complexity with more grace – faces, anatomy, and lighting are usually more consistent. It’s possible that Midjourney uses larger neural networks or ensemble models that capture more context, or that it applies clever techniques under the hood (some speculate it might integrate generative adversarial networks or other model types to post-process and enhance realism, but the company hasn’t confirmed details). What users do see is that Midjourney rarely needs heavy negative prompting or strict instructions to avoid errors – it has a strong “default” quality.

Another advancement is Midjourney’s user experience: it generates four variations for each prompt by default, encouraging exploration of different interpretations. Users can then upscale their favorite or request new variations. This workflow itself can lead to more refined outcomes, since you can pick the most coherent of the four and iterate. In contrast, when using Stable Diffusion, especially locally, you often generate one image at a time (unless you manually script for multiples or use our favorite interface: EasyDiffusion). Midjourney’s approach of offering variations might mask some of the model’s imperfections – there’s a good chance one of the four is great, even if the others have issues. It’s an intelligent way to use the model’s creativity while letting the user curate the final result. To truly appreciate Midjourney’s edge, let’s compare it with Stable Diffusion on the same prompt. Suppose we use the prompt “Mysterious forest” on both:

As the above comparison shows, Midjourney’s rendering (first image) tends to have a more harmonious and “wow-factor” quality – the lighting and focus draw the eye, and it feels like a magical photograph. Stable Diffusion’s version (second image) is certainly coherent (both clearly depict a green forest with waterfalls), but side by side, artists often find Midjourney’s output more artistically compelling. This aligns with observations that, generally, under the same prompt words, Stable Diffusion’s pictures are “not as good as Midjourney, both in terms of photographic aesthetics and image quality”. Midjourney’s training and tuning give it an edge in making images that are immediately striking.

That said, the gap has been closing. Open-source models have continued to improve (Stable Diffusion’s newer versions like SDXL have narrowed the quality gap significantly). And if one leverages extensions and fine-tuning with Stable Diffusion (like custom models, better prompt tuning, upscaling, etc.), it can produce results comparable to Midjourney. The advantage of an open model is its extensibility: the community can train it further, fix flaws, and add features. We’ll touch more on those in the next section.

Midjourney’s advancements also come with certain limitations by design. It has a strict content filter and rules (no graphic violence, no adult content, etc.) to maintain what Holz calls “one beautiful social space” for art creation. Stable Diffusion, being open-source, can be run without such restrictions (which is both powerful and problematic, depending on the use case). Midjourney has chosen to prioritize a moderated, curated experience, which likely also influences the kind of art it produces – generally skewing towards fantastical, concept-art styles and avoiding the grotesque or hyper-realistic violence. In practice, this means Midjourney might refuse certain prompts that Stable Diffusion would allow. But for the average user interested in making stunning art, Midjourney’s guardrails are hardly noticed, apart from the model’s learned bias towards beauty.

Finally, Midjourney’s closed nature means we rely on anecdote and partial info for its tech details. We know it uses big models (billions of parameters) trained on billions of images. It likely uses large cloud GPU clusters (Holz mentioned each image requiring “thousands of trillions of operations” – a petaFLOP-scale computation – which underscores the computational heft behind Midjourney). All that compute and data is marshaled to deliver a service where, as Holz quipped, “a regular person is using this much compute” to create an image – something unprecedented historically. In summary, Midjourney’s magic comes from a blend of technical prowess and curated refinement: it stands on the shoulders of diffusion models like Stable Diffusion, but then goes further by baking in aesthetic sensibilities and user-friendly dynamics that make it a favorite for effortless creativity.

5. Industry Applications

Text-to-image AI has quickly moved from research labs into the toolkits of artists, designers, and businesses. The ability to conjure visuals from text is transforming creative workflows across many industries. Rather than replacing human creatives, these systems often act as amplifiers of imagination – speeding up tasks and inspiring new directions – though they also bring disruption and challenging questions.

In graphic design and advertising, generative image models are being used for concept mockups, mood boards, and even final assets. Advertising agencies have run headline-grabbing campaigns using AI-generated art. For example, in 2023 Heinz created an “AI Ketchup” campaign by prompting DALL-E 2 with ketchup-related queries; amusingly, every result looked like a Heinz bottle, leading to the tagline “This is what ketchup looks like to AI.”. The campaign won awards and even invited customers to generate their own ketchup art, illustrating how marketing teams are embracing AI as a fresh creative angle. Coca-Cola launched a “Create Real Magic” contest that encouraged people to make Coke-themed art with generative models. These stunts aside, on a day-to-day level, design teams are using tools like Midjourney and Stable Diffusion to brainstorm visuals in seconds – a process that used to involve hours of searching for reference images or sketching ideas. A prompt like “slick 3D icon of a rocket made of gold and glass” can yield instant concepts for a branding exercise, which a designer can then refine. According to an Adobe survey, 81% of creative professionals have already used generative AI tools (DALL-E, Midjourney, Stable Diffusion, etc.) in their work. Importantly, 62% of those using generative AI said it reduced time spent on tasks by ~20%, allowing them to iterate faster, and 69% felt these tools opened new ways to express creativity. In practice, this might mean a graphic artist can try multiple compositions for a poster and get inspired by the AI’s unexpected ideas, then combine the best aspects into the final design.

In the entertainment and gaming industry, text-to-image models are accelerating concept art, storyboarding, and asset creation. Game studios can generate dozens of environment concepts (say, “ancient temple in a jungle, overgrown with alien flora”) to quickly explore the look of a level. Film makers are using AI to storyboard scenes, getting a quick feel for camera angles and lighting before a single real shot is taken. A recent industry survey of entertainment companies found that nearly half (47%) plan to use generative AI for developing 3D assets, and 38% plan to use it for 2D concept art and storyboards in the next few years. The result is that concept artists now often work alongside AI: an artist might generate a batch of rough concepts with Stable Diffusion, then paint over or refine their favorite one. This hybrid workflow can be a huge time-saver. However, it’s also disruptive – some routine jobs (like junior concept artist roles or storyboard cleanup) may become less common. In fact, three-fourths of surveyed entertainment execs said these tools have already led to elimination or consolidation of some jobs in their business. At the same time, entirely new roles are emerging, like AI art director or prompt designer, and artists who embrace these tools can supercharge their productivity.

Beyond art departments, architects and product designers use generative models to visualize ideas from early in the design process. An architect might type “futuristic skyscraper, parametric facade, sunset lighting” to get a quick visualization of a building concept to show a client. Industrial designers, as one wrote, are “constantly in awe” seeing Midjourney generate concept variants in real-time, calling it “an almost scarily powerful tool for concept generation”. They can generate multiple design prototypes (cars, gadgets, furniture) and evaluate which forms are worth pursuing, compressing what used to be weeks of sketching into a short session.

In illustration and media, some publications have used AI-generated images for articles or book covers (with human oversight to edit any weird details). AI art tools have empowered individual creators to produce comics, children’s book illustrations, and surreal art films without large budgets – though often the human artist still needs to curate and compose the final pieces. There have been instances of music videos and short films that consist entirely of AI-generated visuals set in motion, using a combination of image generators and interpolation techniques.

Traditional creative software companies are also integrating these capabilities. Adobe, for instance, introduced its own generative image model (Firefly) and integrated it into Photoshop as Generative Fill, allowing users to extend images or make quick alterations by simply typing what they want. This is built on concepts similar to Stable Diffusion’s inpainting. There are also plugins that let you use Stable Diffusion inside Photoshop or Blender, bringing the power of text-to-image directly into the established workflows of designers. This integration is key – rather than replacing Photoshop or 3D software, AI features are augmenting them. Designers can seamlessly generate textures, fill backgrounds, or create variations without leaving their editing program.

Of course, the adoption of text-to-image AI isn’t without challenges. One major concern is intellectual property and ethics: these models learned from millions of images on the web, including artwork by professionals who never consented. This has sparked debates and legal questions – e.g., Getty Images is suing Stability AI for alleged misuse of its photos in training data. Artists have raised concerns about AI mimicking their style. Midjourney responded by banning some specific artist names from prompts after public outcry. The question of who owns an AI-generated image is also tricky. Midjourney’s policy grants users full usage rights for images they generate (if they are paid subscribers), and Stable Diffusion outputs are generally considered property of the user as well, but the training data origins make it a gray area. From an economic standpoint, some clients might opt for AI-generated illustrations instead of hiring an artist, especially for low-budget projects, which creates pressure on some freelance jobs. However, others argue it can democratize art by allowing those with ideas but no art skills to create (or at least prototype) their vision.

Another challenge in professional settings is quality control. AI images can have subtle weirdness – maybe an extra limb, text that looks like gibberish instead of legible words, or just a slightly off-putting composition. For critical uses, human oversight is needed to catch and correct these. In fields like fashion or product advertising, AI can generate ideas, but a human designer will likely recreate it to ensure every detail is right and legally safe (e.g., the AI might unintentionally produce a trademarked logo in an image – you have to watch out for that). Moreover, companies need to make sure the AI’s output aligns with brand guidelines and doesn’t introduce bias or offensive elements (bias in AI image generation is an ongoing issue; e.g., prompts for certain professions might yield only men unless specified otherwise, reflecting training data biases).

Despite these challenges, the trend is clear: generative image AI is becoming a staple in creative industries. It’s shifting the skills demanded – knowing how to “speak” to the AI (write good prompts) and how to edit AI output is now valuable. Economically, it’s reducing the cost of concept ideation and allowing smaller firms or individual creators to punch above their weight in terms of visual production. We’re also seeing hybrid approaches: photographers use AI to enhance or alter shots (so-called “augmented photography”), filmmakers use AI to generate backgrounds or special effects elements, and game developers incorporate AI-upscaled or AI-generated textures to expedite content creation.

In sum, text-to-image systems like Stable Diffusion and Midjourney have unleashed a new wave of creative automation. They are the intuitive sketch partners, the infinite reference library, and the imaginative concept artists rolled into one. Professionals who integrate these tools can iterate faster and often find themselves exploring ideas they wouldn’t have conceived alone. Yet, they also must navigate the ethical and practical hurdles of this technology – from attributions and rights to the simple fact that AI can be unpredictable. As with any disruptive tech, the creative world is adapting: some roles evolve, new roles appear, and the very definition of “artistic workflow” broadens.

6. Methodology and Commentary

How do we practically use these AI models, and what do experts say about their future? Let’s briefly peek into the typical pipeline and then consider the limitations and future directions of text-to-image AI.

Using a model like Stable Diffusion is now as simple as calling an API or running a few lines of code. Libraries such as Hugging Face’s Diffusers provide high-level interfaces to load the pre-trained model and generate images. For instance, here’s a short Python snippet that loads Stable Diffusion and generates an image from a prompt:

from diffusers import StableDiffusionPipeline

# Load the Stable Diffusion model (requires internet for first time download, then runs locally)

pipe = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1")

pipe = pipe.to("cuda") # or "cpu" if no GPU available

prompt = "a scenic landscape in autumn, vibrant colors, sunset glow"

result = pipe(prompt, num_inference_steps=30) # denoise over 30 steps

image = result.images[0]

image.save("autumn_landscape.png")

In these few lines, the StableDiffusionPipeline object takes care of the entire process – it contains the text encoder, U-Net, VAE, and scheduler (the algorithm that decides how to step through the diffusion). The output image is a PIL image object with the generated picture. Public APIs and cloud services also exist where you can POST a prompt and get back an image, making integration into apps straightforward. Midjourney, being a closed service, is accessed via its Discord bot or web interface rather than a local library. You send a command to the bot (e.g., /imagine prompt: a castle on a hill...) and it returns the images. There isn’t an official public API for Midjourney as of this writing, which is one reason many developers lean towards open models like Stable Diffusion for custom applications, even if Midjourney might give more artistic results out-of-the-box.

From a research and development perspective, open-source models have spurred an explosion of innovations. Since Stable Diffusion’s release, developers have extended it with features like ControlNet (which lets you guide generation with sketches or pose skeletons for figures), model fine-tuning methods like DreamBooth to teach the model new concepts (e.g., a specific person or a new art style) with just a few examples, and various sampler algorithms to improve how diffusion steps are taken for faster or sharper results. These extensions allow professionals to integrate text-to-image AI in tailored ways: a fashion company might fine-tune a model on its catalog images to generate on-brand clothing renders, or an animation studio might use ControlNet to generate backgrounds that match a hand-drawn layout.

Limitations and Future Directions

Despite their impressive capabilities, today’s generative models have notable limitations. First, they don’t truly “understand” the world – they have no concept of physics, intent, or commonsense beyond statistical correlations in data. This can lead to images that look plausible at first glance but upon closer inspection have impossible or nonsensical elements (e.g., an Escher-like staircase or a person with an eerie smile and extra teeth). Consistency across multiple images is also a challenge; if you ask for a comic strip with the same character in five panels, the character may look different in each – these models have no inherent memory or identity tracking (each image generation is independent).

There’s also the issue of bias and appropriateness. The models will reflect biases present in their training data. Prompts for certain professions might return predominantly a particular gender or ethnicity, unless specified, because of historical biases in images. Without careful prompting or fine-tuning, a generic prompt might inadvertently yield an image that reinforces a stereotype. Research and community efforts are ongoing to document and mitigate these biases, but it’s a complex problem since the training data is so vast and varied. Both Stable Diffusion and Midjourney implement some content filters (Midjourney’s is stricter by default). Stable Diffusion’s official releases actually blur or block certain explicit content generations. However, once the model weights are out, users can choose to re-enable capabilities, which raises concerns about misuse (e.g., creating deepfakes or harmful imagery). The community generally frowns upon using these tools to create non-consensual images or realistic fake persons, but the possibility exists, which is why the ethical use and perhaps regulation of generative media is a hot topic.

On the technical side, one limitation has been resolution and fine detail. Models like the original Stable Diffusion v1.4 generate 512×512 images. For larger images or prints, one must use upscaling techniques or newer models (SDXL can do higher res, Midjourney also improved this, and there are specialized upscaler models). They can also struggle with text within images (like signage or UI screenshots) – the jumbled text is a meme in AI art circles. This happens because the model didn’t really learn to spell; it learned “textures” of text. However, models are slowly improving: some newer ones manage short words or logos better as they’ve seen them enough to overfit those details.

So, what does the future hold? We’re likely to see continued convergence and integration. Imagine a design tool where you sketch a layout, type a description, and the AI fills in the rest – we’re almost there. Adobe’s Generative Fill is one step, and tools like Canva and Figma are also adding AI generation features. The models themselves will get better at understanding context; for instance, a future text-to-image model might incorporate a language model (like GPT-style reasoning) to better parse complex prompts or follow multi-step instructions (OpenAI’s recent DALL-E 3 took a step in this direction by integrating with ChatGPT for more accurate prompt following). We might also see hybrid models that combine diffusion with other approaches: perhaps using NeRF-like 3D understanding so that generating a scene implicitly creates a 3D model (so you can get consistent images from different angles – some early research is happening here).

One exciting direction is moving beyond single images to sequences – generating videos or interactive 3D worlds. The jump to video is non-trivial (temporal consistency is hard), but early prototypes (like Runway’s Gen-2 or text-to-video research at Google) are showing that short AI-generated videos are feasible. In a few years, we might describe a full animated scene and have the AI render it out, effectively doing on-the-fly CGI.

For artists and industry, a big question is how human and AI collaboration will settle. Many emphasize that AI should be a tool, not a replacement. A thoughtful report on generative AI’s impact noted that AI outputs are inherently limited by what they’ve seen – they recombine and interpolate existing content. If the creative process shifts entirely to machines, there’s a risk of a feedback loop of sameness, as the AI can only remix what already exists. As the report put it, “GenAI output is constrained by its inputs... If responsibility to generate content shifts away from humans to machines... the availability and uniqueness of new content will become more limited.”. In other words, without fresh human imagination and perspective, AI art could stagnate. The ideal future is one where AI expands human creativity, not replaces it – using these models to venture into new creative territories, while keeping humans in charge of steering the vision and injecting true originality (and heart) into the work.

Many AI researchers and artists are optimistic. They see a future where mundane creative grunt work is handled by AI, freeing humans to focus on higher-level creativity. An illustrator might spend less time perfecting lighting and more time devising the story and composition, letting the AI handle the rendering of various lighting setups to choose from. A game developer might describe a scene and get a decent asset, then customize it – much faster than modeling from scratch. The economic and workflow shifts will require adaptation (training, new job roles, ethical guidelines), but history has shown that creatives tend to adapt tools in ways that often create entirely new art forms (photography didn’t kill painting; it spawned cinematography and digital art, etc.).

In conclusion, the journey from Stable Diffusion to Midjourney showcases the rapid evolution of generative AI – from a brilliant research idea of noise gradually becoming art, to widely accessible tools that have permeated creative fields. These systems are far from perfect, but they’ve opened a door to a new kind of creative collaboration between humans and machines. We are learning that with the right prompt, a bit of guidance, and a critical eye, AI can be a partner in visual imagination, translating our ideas into pixels. As we refine these technologies, we face the challenge of guiding them responsibly. The canvas of the future is being sketched now: one where our words, enhanced by AI, can paint worlds – and where the act of creation is limited less by technical skill and more by the boundlessness of our imagination.

References

[1] Sohl-Dickstein, J., Weiss, E. A., Maheswaranathan, N., & Ganguli, S. (2015). Deep unsupervised learning using nonequilibrium thermodynamics. International Conference on Machine Learning (ICML), 2256–2265. PMLR.

[2] Ho, J., Jain, A., & Abbeel, P. (2020). Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33, 6840–6851.

[3] Rombach, R., Blattmann, A., Lorenz, D., Esser, P., & Ommer, B. (2022). High-resolution image synthesis with latent diffusion models. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 10684–10695.

[4] Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., et al. (2021). Learning transferable visual models from natural language supervision. International Conference on Machine Learning (ICML), 8748–8763. PMLR.

[5] Schuhmann, C., Beaumont, R., Vencu, R., Gordon, C., Wightman, R., Cherti, M., Coombes, T., et al. (2022). LAION-5B: An open large-scale dataset for training next generation image-text models. arXiv preprint arXiv:2210.08402.

[6] Esser, P., Rombach, R., & Ommer, B. (2021). Taming transformers for high-resolution image synthesis. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 12873–12883.

[7] OpenAI. (2021). CLIP: Contrastive Language–Image Pre-training. Retrieved from https://github.com/openai/CLIP

[8] Holz, D. (2023). Midjourney v5 and Beyond: Advancements in AI-Assisted Art. Midjourney Official Blog. Retrieved from https://midjourney.com/blog

[9] Getty Images v. Stability AI. (2023). Complaint for copyright infringement. United States District Court for the District of Delaware. Retrieved from https://newsroom.gettyimages.com

[10] Adobe. (2023). Introducing Firefly: Adobe’s family of creative generative AI models. Retrieved from https://www.adobe.com/sensei/generative-ai/firefly

[11] Heinz “AI Ketchup” Campaign. (2023). Using text-to-image to reveal how AI imagines ketchup. The Drum. Retrieved from https://www.thedrum.com/news

[12] Runway. (2023). Gen-2: Advancing text-to-video diffusion. Retrieved from https://research.runwayml.com/gen2

[13] DreamBooth: High-Fidelity Subject-Driven Generation. (2022). Google Research. arXiv preprint arXiv:2208.12242.

[14] Adobe. (2023). The future of creativity: AI in design workflows. Adobe Creative Cloud Reports. Retrieved from https://blog.adobe.com

[15] Feldman, N. (2023). AI in the entertainment industry: Case studies and workflow transformations. Entertainment Tech Journal, 9(4), 104–118.

[16] Mollick, E. (2022). The rise of generative AI: Economic shifts and societal impact. Future of Work Quarterly, 17(2), 29–42.

[17] OpenAI. (2023). DALL·E 3: Integrating advanced language models for refined image generation. Retrieved from https://openai.com/blog/dall-e-3

[18] Zhao, S., Li, Y., & Wang, B. (2023). ControlNet: Conditional control for stable diffusion. arXiv preprint arXiv:2302.05543.

[19] Adobe. (2023). Generative Fill in Photoshop: Enhancing creativity through integrated AI. Retrieved from https://www.adobe.com/creativecloud/photoshop

[20] Midjourney. (2022). Community galleries and user prompts. Retrieved from https://midjourney.com/community