Introduction

Imagine waking up to a gentle morning routine softly orchestrated by artificial intelligence (AI). Your alarm clock, powered by AI, adjusts itself to your sleep cycle, ensuring you feel rested. A smart coffee maker brews your favorite blend at just the right time. As you scan the morning news, an AI assistant has already filtered out misinformation and highlighted the stories that matter most to you. In this serene scenario, AI works quietly in the background – enhancing daily life in subtle, seamless ways. This isn’t science fiction or a privilege for tech experts; it’s an emerging reality for everyone. And it raises an important question: How do we ensure everyone can navigate an AI-driven world with confidence and understanding?

Morning Symphony: In a softly lit kitchen

at dawn, a sleek AI-powered coffee maker emits gentle steam as it pours

a perfect cup, while a voice-activated alarm clock floats nearby, its

holographic display showing sleep-cycle data—ethereal light filtering

through gauzy curtains, giving the scene a dreamlike, poetic

glow.

Morning Symphony: In a softly lit kitchen

at dawn, a sleek AI-powered coffee maker emits gentle steam as it pours

a perfect cup, while a voice-activated alarm clock floats nearby, its

holographic display showing sleep-cycle data—ethereal light filtering

through gauzy curtains, giving the scene a dreamlike, poetic

glow.

AI is no longer confined to research labs or Silicon Valley startups. It’s woven into our jobs (from automated email filters to smart recruitment tools), our roles as citizens (like AI-curated social media feeds influencing public opinion), and even our peace of mind (for instance, AI wellness apps that help us meditate or manage stress). Developing AI literacy – the basic knowledge and skills to understand and work with AI – is becoming as essential as digital literacy. Just as basic computer skills became necessary over the past few decades, now “AI literacy is becoming fundamental to understanding and shaping our future”. In fact, governments and educators around the world are waking up to this need. California recently passed a law requiring AI literacy in schools, and the European Union’s new AI Act mandates AI-awareness programs for organizations deploying AI. “AI literacy is incredibly important right now,” says Stanford professor Victor Lee, “especially as we’re figuring out policies… People who know more … are able to direct things”, whereas a more AI-literate society can build “more societal consensus”. In short, understanding AI empowers us – it helps us stay employable in changing workplaces, engage as informed citizens on tech policy, and demystify the technology so it doesn’t feel like an uncontrollable magic. As one team of researchers put it, without widespread AI literacy, we risk “ceding important aspects of society’s future to a handful of multinational companies”. The good news is that AI literacy is not about advanced math or programming; it’s about grasping core concepts that anyone can learn.

Digital Dawn Chorus: A serene bedroom

bathed in pastel sunrise hues, where a minimalist AI assistant hovers as

a translucent orb by the bedside, curating news headlines that

materialize as delicate, floating text ribbons—subtle reflections on

polished surfaces, evoking an otherworldly harmony between technology

and daily ritual.

Digital Dawn Chorus: A serene bedroom

bathed in pastel sunrise hues, where a minimalist AI assistant hovers as

a translucent orb by the bedside, curating news headlines that

materialize as delicate, floating text ribbons—subtle reflections on

polished surfaces, evoking an otherworldly harmony between technology

and daily ritual.

In this guide, we’ll journey through essential AI concepts in plain language, with inspiring examples of how human-centered AI is making the world better. By the end, you’ll see that AI literacy is for everyone – and that includes you. Whether you’re a parent, artist, teacher, healthcare worker, or retiree, you can absolutely gain the understanding needed to thrive alongside AI. Let’s begin by establishing a clear, jargon-free understanding of what AI actually is and how it works.

What Is AI?

Artificial Intelligence (AI) broadly refers to machines or software performing tasks that typically require human intelligence – things like learning, reasoning, creativity, and decision-making. AI isn’t a single gadget or a robot’s brain; it’s a field of computer science with many approaches. Let’s break down a few key terms you’ll hear, using everyday metaphors to keep things accessible:

Pattern Playground: A sunlit study where

a child sits cross-legged on a colorful rug, surrounded by floating

holographic photos of cats and dogs, their eyes alight with curiosity as

gentle beams of light connect matching images—an ethereal classroom of

patterns and discovery.

Pattern Playground: A sunlit study where

a child sits cross-legged on a colorful rug, surrounded by floating

holographic photos of cats and dogs, their eyes alight with curiosity as

gentle beams of light connect matching images—an ethereal classroom of

patterns and discovery.

Machine Learning (ML): Imagine you’re teaching a child to differentiate between cats and dogs. Instead of giving the child a long list of rules (“dogs bark, cats meow, dogs have certain snout shapes”), you simply show them lots of pet photos and tell them which are cats and which are dogs. Eventually, the child learns the patterns and can guess correctly on new photos. That’s essentially how machine learning works – the computer “learns” from many examples rather than explicit programming. “Machine learning systems learn from data instead of following explicit rules. They use patterns found in large sets of information to make decisions.” In early AI (1950s–1980s), most systems were rule-based, meaning programmers manually coded explicit rules for every decision. Those systems were like very strict recipe-followers – they couldn’t handle anything unforeseen. Modern AI shifted to machine learning, where the system builds its own rules or models from data, making it far more adaptable. For example, your email’s spam filter isn’t using a fixed checklist from an engineer; it’s a machine learning model trained on millions of example emails. Over time it has “learned” the subtle features that distinguish spam from legitimate mail – a much more flexible approach than any static rulebook. (Indeed, AI spam filters today continually improve by spotting new patterns in unwanted emails, a task that would overwhelm hard-coded rules.)

Neural Nexus: Inside a vast, dimly lit hall of

softly glowing filaments, countless luminescent nodes pulse in layered

tiers like a delicate spider’s web, each connection shimmering as

signals flow through the neural network’s labyrinthine pathways—a poetic

dance of emerging intelligence.

Neural Nexus: Inside a vast, dimly lit hall of

softly glowing filaments, countless luminescent nodes pulse in layered

tiers like a delicate spider’s web, each connection shimmering as

signals flow through the neural network’s labyrinthine pathways—a poetic

dance of emerging intelligence.

Neural Networks: This term sounds like a brain, and in a very loose way, it is inspired by brains. If machine learning is the concept of learning from examples, neural networks are one popular technique to do so. A neural network is essentially a layered system of mathematical functions (“neurons”) that adjust their connections (weights) during training. It’s reminiscent of an enormous web of decision-makers passing signals around, gradually tuning themselves to get the right answer – much like neurons firing in a brain, though far simpler. You can think of a neural network as a team of experts: the first layer might detect simple shapes or features in an image, the next layer builds on those (detecting combinations of features), and deeper layers assemble high-level recognitions (like “aha, these features together look like a face!”). During training, the network adjusts the importance of each connection to improve its accuracy. By the end, it becomes very skilled at mapping input data (say, an image) to an output (say, “cat” or “dog”). Modern AI breakthroughs largely stem from deep learning, which just means neural networks with many layers (“deep” refers to depth in layers). As OpenAI’s CEO Sam Altman succinctly put it: “In three words: deep learning worked.” After decades of research, around the 2010s we finally had enough data and computing power for neural networks to shine. They began outperforming older AI methods and revolutionized fields like vision and speech. This shift – from manually coded rules to machine-learned neural network models – is the brief historical journey of AI. Early AI could follow instructions but not learn; today’s AI learns from experience, which is a game-changer.

Imaginative Alchemy: In a warm, rustic

kitchen at dusk, a masterful AI “chef” stirs a glowing cauldron of

digital ingredients—scrolls of text, fragments of melody, and

brushstrokes of color—melding them into a brand-new creation as floating

symbols swirl overhead, evoking the magic of generative AI.

Imaginative Alchemy: In a warm, rustic

kitchen at dusk, a masterful AI “chef” stirs a glowing cauldron of

digital ingredients—scrolls of text, fragments of melody, and

brushstrokes of color—melding them into a brand-new creation as floating

symbols swirl overhead, evoking the magic of generative AI.

Generative AI: If you’ve heard of ChatGPT, Midjourney, Suno, or other AI tools that create things (like text, images, music, etc.), you’re talking about generative AI. A simple way to grasp generative AI is to think of a knowledgeable chef who has tasted thousands of dishes and can now improvise a new recipe that “fits” with what they know. Generative AI models are trained on huge amounts of data (text, images, audio) and learn the underlying patterns of that data. Then they can generate new content that follows those patterns. For instance, GPT-4 (a generative language model) was trained on a vast swath of internet text and can now produce paragraphs that read remarkably like something a human might write. As MIT researchers explain, “Generative AI can be thought of as a machine-learning model trained to create new data, rather than just make a prediction. It learns to generate more objects that look like the data it was trained on.” So, given prompts, a generative AI might write a short story in the style of Jane Austen or conjure a photorealistic image of a sunset over Mars. This branch of AI has existed in simpler forms for decades, but around 2022 it truly exploded into public awareness because the outputs (like human-quality text and artwork) became astonishingly good. Generative models use advanced neural network architectures – for example, transformers for language, or diffusion models for images – but as a user you don’t need to know those details. The key point: generative AI is like a super-creative parrot – it doesn’t think like a human, but it remix-and-produces content based on patterns it observed. This unlocks incredible tools for aiding human creativity (as we’ll see later), while also raising new questions about authenticity (deepfake images or AI-written essays).

Linguistic Loom: A moonlit library with

towering shelves of ancient tomes, where a graceful automaton weaves

threads of glowing script between quills and scrolls, crafting seamless

sentences in midair—an enchanting tapestry of language spun by the art

of NLP.

Linguistic Loom: A moonlit library with

towering shelves of ancient tomes, where a graceful automaton weaves

threads of glowing script between quills and scrolls, crafting seamless

sentences in midair—an enchanting tapestry of language spun by the art

of NLP.

Natural Language Processing (NLP): This is a subset of AI focused on enabling computers to understand and generate human language. Language is our most natural interface, so NLP is hugely important for AI’s interaction with us. You encounter NLP every day: when you use a voice assistant (Alexa, Siri, Google Assistant), when you see machine-translated text, or when your phone’s autocomplete finishes your sentences. In simple terms, “NLP is a subfield of AI that uses machine learning to enable computers to understand and communicate with human language.” Through NLP techniques, AI systems can recognize speech, interpret the meaning of text, converse in chatbots, summarize documents, and more. A helpful metaphor: think of NLP as teaching a computer to become a really good foreign language student. The computer doesn’t natively know English or Chinese – it reads lots of examples and learns how words relate, how grammar works, and what context implies. Early NLP relied on hand-crafted grammar rules, but modern NLP mostly uses learning methods (like those big neural network models that predict text). The result is AI that can engage in dialogue (albeit with no genuine understanding or intent behind its words – it’s mimicking understanding by statistical prediction). Today’s most advanced NLP systems are the large language models (LLMs) like GPT, which can produce remarkably coherent and context-aware text. They’re not perfect – they often make errors or nonsensical statements (as we’ll discuss) – but they show how far AI has come in handling the nuance of human language.

In summary, AI is an umbrella term and within it, machine learning (especially deep learning with neural networks) has been the engine driving recent progress. We now have AI that can learn from data rather than rigidly follow pre-written rules – which is why AI feels so much more powerful and adaptable than the software of old. In the next section, we’ll demystify how these AI systems actually learn and make decisions. If you’ve ever wondered “What’s going on inside the black box?”, read on – we’ll explain it in plain English with concrete examples. ## How AI Learns and Makes Decisions

We often hear phrases like “trained on data” or “the AI predicts X”, but what does that process actually look like? Let’s pull back the curtain on how an AI model goes from training to making decisions (a process known as inference). You don’t need a PhD to grasp the intuition:

Endless Practice: In a luminous atelier

filled with translucent data scrolls and floating quiz cards, a graceful

apprentice AI pores over each card under soft golden light—lines of code

and whispered labels drifting like motes—symbolizing diligent learning

from countless examples.

Endless Practice: In a luminous atelier

filled with translucent data scrolls and floating quiz cards, a graceful

apprentice AI pores over each card under soft golden light—lines of code

and whispered labels drifting like motes—symbolizing diligent learning

from countless examples.

Training Phase – Learning from Data: Think of this as the “studying” period for the AI. The developers compile a training dataset – examples relevant to the task. For instance, to train an email spam filter, they might collect millions of emails labeled “spam” or “not spam.” To train an image recognizer, they gather photos with labels of what’s in them. The AI model then learns from these examples. How? If it’s a neural network, learning means adjusting all those internal connections to better map inputs to correct outputs. In each round, the model makes a guess on some training examples, the training algorithm checks the guess against the true answers, and then nudges the model’s parameters to reduce errors. It’s akin to how a student might do practice quiz questions and adjust their approach based on which answers were wrong. Over many iterations (sometimes billions of them!), the AI model gradually improves. It generalizes patterns from the training data. For example, a spam filter might learn that emails containing phrases like “free money!!!” and a lot of exclamation points tend to be spam, or that messages from certain senders who you’ve marked as safe are not spam. A photo-tagging AI might learn what your friend Alice’s face looks like by analyzing pixel patterns across many tagged photos of Alice. One common worry is that AI models are a complete “black box,” meaning we can’t understand how they make decisions. It’s true that models like deep neural networks are complex and not easily interpretable line-by-line. However, they’re not magic – they’re still following mathematical patterns gleaned from data. Researchers are actively developing explainable AI tools to shine light on these black boxes (for instance, highlighting which words in an email led the spam filter to flag it). And practically speaking, even if we don’t see every gear turning inside the model, we can evaluate its performance and behavior thoroughly. In training, developers test the AI on separate validation data to see if it’s learning correctly and not, say, memorizing weird quirks (overfitting). This is similar to giving a student a practice test on new questions to ensure they truly learned the material, not just the exact flashcards they studied.

Tapestry of Knowledge: A vast

celestial loom where shimmering threads of email snippets and pixelated

images weave together into a radiant fabric; at its heart, a delicate

spindle adjusts patterns with each pass, evoking the iterative training

process refining an AI’s understanding.

Tapestry of Knowledge: A vast

celestial loom where shimmering threads of email snippets and pixelated

images weave together into a radiant fabric; at its heart, a delicate

spindle adjusts patterns with each pass, evoking the iterative training

process refining an AI’s understanding.

Inference Phase – Making Decisions or Predictions: Once trained, the AI model is deployed to do its job “for real.” Now it receives new inputs it’s never seen before and must make an informed prediction or decision based on what it learned. This stage is called inference. For our examples: when a new email arrives in your inbox, the spam filter model quickly computes a spam score for it – essentially, “how similar is this email to the spam I saw in training?” If the score is high, it classifies the email as spam (perhaps shuttling it to your spam folder); if low, it lets it through. What’s neat is that the model can pay attention to dozens or even hundreds of factors at once: the sender’s reputation, certain keywords, the email’s formatting, all weighted according to its training. (One can imagine it as a very diligent guard dog that has sniffed thousands of intruders and visitors – it’s developed a nose for suspicious vs. friendly behavior in email content.) In the case of a photo-tagging system like those on Facebook or Google Photos, when you upload a new picture, the AI analyzes the image’s features and compares them to the “memory” of each person’s face it learned during training. If the pattern of pixels matches Alice’s known pattern with high confidence, the system suggests tagging Alice in the photo. Facebook’s now-retired face recognition system, for instance, could “provide recommendations for who to tag in photos” by using a trained model to match faces. That model was so advanced it even powered accessibility features – it could tell a visually impaired user “when they or one of their friends is in an image” via automatic photo descriptions. This shows how AI’s pattern recognition, once trained, can be applied to benefit people in real-time scenarios.

Digital Sentinels: A luminous data-stream

guard dog fashioned from cascading email icons and glowing code fibers

stands alert at a shimmering threshold, its sensor-nose discerning

threats as incoming envelopes trace trails of light and are guided into

separate, ethereal pathways.

Digital Sentinels: A luminous data-stream

guard dog fashioned from cascading email icons and glowing code fibers

stands alert at a shimmering threshold, its sensor-nose discerning

threats as incoming envelopes trace trails of light and are guided into

separate, ethereal pathways.

To illustrate AI decision-making with a concrete mental image, consider how a spam filter using a certain ML technique (called Support Vector Machine) was described by one author: “imagine two fields, one with cows and one with sheep. The job of the AI is to create a fence that separates them”. In other words, the algorithm finds the boundary that best divides spam vs. not-spam in the multi-dimensional space of email features. During inference, it’s as if each new email is a new animal – the model checks which side of the fence it falls on (spam side or not-spam side). Through training, the AI has “figured out the characteristics of the cows (legitimate emails) and sheep (spam) so it can keep them apart.” And with modern deep learning, these “characteristics” can be incredibly subtle – combinations of words, sender metadata, etc. – that humans alone might miss.

Boundary of Light: In a twilight meadow

of floating pixel-patterns, iridescent cows and sheep graze side by

side, while a delicate, neon-lined fence emerges—splitting the field

into two realms—symbolizing the AI’s decision boundary as new data

points wander into their destined domains.

Boundary of Light: In a twilight meadow

of floating pixel-patterns, iridescent cows and sheep graze side by

side, while a delicate, neon-lined fence emerges—splitting the field

into two realms—symbolizing the AI’s decision boundary as new data

points wander into their destined domains.

It’s important to note that AI models do not “think” or “understand” the way humans do. They don’t truly comprehend meaning; they simply correlate patterns with outcomes. The spam filter isn’t aware of what “winning a free iPhone” means, it has just statistically learned that phrase often coincides with unwanted emails. Likewise, an AI medical diagnosis system that learned from patient data might predict a disease, but it doesn’t feel concern or know what the patient is experiencing. It’s crunching patterns. This pattern-matching nature is why AI can sometimes be fooled or make odd mistakes – it has no common sense beyond its data. A famous example: an image recognition AI once misidentified a picture of a panda as a gibbon after imperceptible noise was added to the image – because the noise tricked the pattern detector in ways a human never would be tricked. We’ll talk more about such limitations and myths (like the idea of AI being infallible or “too smart”) in a later section.

For now, the key takeaway is that AI learns by example and makes decisions by analogy. It’s like a student turned specialist: it studied hard on training data, formed its own internal knowledge representations, and now applies that knowledge to new situations. When designed and trained well, AI systems can achieve remarkable accuracy and efficiency in their domains – sometimes even surpassing human performance in narrowly defined tasks (for instance, identifying certain patterns in medical images or finding anomalies in financial transactions). But when designed or trained poorly, or asked to operate outside their expertise, they can also produce laughable or dangerous errors. This is why understanding how AI learns isn’t just academically interesting – it directly impacts how much we should trust a given AI system and how we should use it. We’ll delve into trust and ethics soon, but first, let’s look at AI’s positive impact by surveying some real-world applications across different fields. ## AI in the Real World

AI may sound abstract, but its real-world applications are concrete and often life-changing. Let’s explore a few uplifting case studies in healthcare, education, finance, and creative arts – areas that affect us all. These examples highlight AI’s human-centered potential: rather than replacing people, AI is augmenting human efforts to achieve better outcomes.

In hospitals and clinics, AI has quietly become a powerful ally to doctors and patients. One striking example is in breast cancer screening. Reading mammograms (breast X-rays) is a tough task even for experienced radiologists – cancers can be subtle, and human fatigue or oversight can miss early signs. ### Healthcare: Saving Lives and Advancing Medicine

Radiant Vigilance: In a softly lit

hospital imaging suite, a glowing AI-enhanced mammogram display

illuminates subtle breast tissue patterns with delicate highlight

overlays, while a focused radiologist stands nearby—melding human

compassion and digital precision in an ethereal dance of care.

Radiant Vigilance: In a softly lit

hospital imaging suite, a glowing AI-enhanced mammogram display

illuminates subtle breast tissue patterns with delicate highlight

overlays, while a focused radiologist stands nearby—melding human

compassion and digital precision in an ethereal dance of care.

Enter AI: researchers in Sweden conducted a large trial with over 100,000 women to see if AI could help in screening. The results? Combining AI with radiologist review significantly boosted detection of cancers while reducing doctors’ workload. AI-supported screening found 29% more cancers than traditional methods, including 24% more early-stage invasive cancers that are crucial to catch early. Importantly, this didn’t flood patients with false alarms – false positives only rose by about 1%. Kristina Lång, the radiologist leading the trial, noted the AI even caught “relatively more aggressive cancers that are particularly important to detect early”, which could mean saved lives through timely treatment. Essentially, the AI serves as a tireless second pair of eyes, scanning images quickly and flagging anything suspicious. Radiologists then focus their expertise where it’s needed most. This kind of human-AI teaming is a glimpse of medicine’s future – one where AI handles the grunt work of analysis, freeing doctors to spend more time with patients or on complex decision-making. Beyond imaging, AI is accelerating drug discovery (identifying new molecules for medications in a fraction of the traditional time) and predicting patient outcomes (allowing preventive care). During the COVID-19 pandemic, AI models helped epidemiologists predict outbreak hotspots and triage patients based on risk. And in a famous scientific milestone, DeepMind’s AlphaFold AI solved the 50-year-old grand challenge of predicting protein structures – a breakthrough that can speed up designing cures and understanding diseases. In just a couple of years, AlphaFold predicted 200 million protein structures (virtually every protein known to science), “potentially saving millions of dollars and hundreds of millions of years in research time.” This kind of behind-the-scenes AI isn’t visible to most, but it’s poised to lead to new vaccines, treatments, and medical marvels that benefit everyone.

Celestial Proteome: Against a twilight

laboratory backdrop, iridescent ribbons of protein structures float like

cosmic origami, guided by an AI-generated neural lattice—capturing the

poetic beauty of molecular revelation and algorithmic insight.

Celestial Proteome: Against a twilight

laboratory backdrop, iridescent ribbons of protein structures float like

cosmic origami, guided by an AI-generated neural lattice—capturing the

poetic beauty of molecular revelation and algorithmic insight.

Education: Personal Tutors for Every Student

If you’ve ever struggled in a large class or needed extra help on homework, you’ll appreciate how AI is making education more personalized and accessible. AI tutoring systems have advanced to the point that they can mimic some of the interactive guidance of a human tutor – and crucially, they can do it at scale. A leading example is Khan Academy’s Khanmigo, an AI-powered tutor built on top of a state-of-the-art language model (GPT-4). Instead of simply giving students answers, Khanmigo engages them in dialogue, asking Socratic questions to nudge their thinking. “It’s like a virtual Socrates, guiding students through their educational journey,” says Khan Academy founder Sal Khan. For instance, if a student is stuck on an algebra problem, Khanmigo might ask them to explain what the problem is asking, then suggest a first step rather than just solving it outright. It adapts to each student’s pace – providing hints if needed, or offering harder follow-up questions if the student is breezing through.

Virtual Socrates: In a luminous,

book-lined study bathed in dawn’s pastel glow, a holographic tutor with

gentle, animated expressions guides a curious student through floating

algebraic symbols—soft beams of light tracing Socratic questions in the

air as knowledge blossoms between them.

Virtual Socrates: In a luminous,

book-lined study bathed in dawn’s pastel glow, a holographic tutor with

gentle, animated expressions guides a curious student through floating

algebraic symbols—soft beams of light tracing Socratic questions in the

air as knowledge blossoms between them.

Early pilots have reported enthusiastic responses from students and teachers alike. In one demonstration, school administrators witnessing AI tutoring in action said, “This aligns with our vision of creating thinkers.” The promise here is equity: not every child can have a 1-on-1 human tutor, but an AI tutor (carefully designed with pedagogical best practices) can be available to every child, anytime, for far less cost. This could help bridge learning gaps, giving under-resourced schools access to high-quality assistance. Teachers aren’t left out, either – AI can handle tedious tasks like grading quizzes or drafting lesson plans, which saves educators time for more creative and interpersonal aspects of teaching. Of course, AI in education must be used thoughtfully (it can make mistakes in answers, and it lacks the emotional intelligence of humans), but with proper integration, it’s more like a teaching assistant than a teacher replacement. Imagine a classroom where each student who raises their hand for help can immediately get guided support, or where AI frees teachers from administrative burdens so they can mentor students individually. That’s increasingly becoming reality. On a larger scale, whole countries are pushing AI literacy through online courses – Finland’s Elements of AI course, for example, has introduced over one million people from 170+ countries to AI basics for free, often in their native languages. This democratization of knowledge through AI and about AI creates a virtuous cycle: an educated population can leverage AI better, which in turn improves education and society.

Aurora Classroom: A modern classroom

suffused with ethereal light, where each student’s desk is crowned by a

translucent AI tutor—delicate threads of glowing code weave between

pupil and guide, lifting homework problems into the air like

constellations to be explored together.

Aurora Classroom: A modern classroom

suffused with ethereal light, where each student’s desk is crowned by a

translucent AI tutor—delicate threads of glowing code weave between

pupil and guide, lifting homework problems into the air like

constellations to be explored together.

Finance: Inclusion and Security Through AI

The finance industry has long used automation, but AI has supercharged its capabilities – bringing benefits especially in fraud prevention and financial inclusion. Let’s start with fraud detection, something that protects consumers and banks alike. If you’ve ever gotten an alert about a suspicious charge on your credit card, that was likely flagged by an AI system.

Digital Sentinel: A neon-drenched

cityscape at midnight with glowing transaction paths arcing between

skyscrapers, where a translucent, ethereal AI guard dog silhouette

sniffs out anomalies among floating credit card icons—data streams

shimmering like aurora in the sky.

Digital Sentinel: A neon-drenched

cityscape at midnight with glowing transaction paths arcing between

skyscrapers, where a translucent, ethereal AI guard dog silhouette

sniffs out anomalies among floating credit card icons—data streams

shimmering like aurora in the sky.

These systems analyze millions of transactions and learn to spot anomalies in real time – patterns that suggest a transaction might be unauthorized. For example, if your card is suddenly used in a foreign country or a far-off city half an hour after it was used in your hometown, an AI might raise an eyebrow (so to speak). Modern fraud-detection AI looks at a plethora of factors (merchant, amount, user history, location, time, etc.) and has learned subtle correlations that often betray fraudsters. The result: billions of dollars saved by stopping fraudulent transactions, and consumers saved from headache and loss. Because the AI continuously learns from new fraud attempts, it keeps up with evolving tactics of criminals in a way hard-coded systems couldn’t. Now, on the financial inclusion front, AI is breaking down barriers that left billions without access to banking or credit. Traditional credit scoring (the kind that might approve or deny a loan) relies on formal credit history – which many people in developing regions or marginalized communities simply don’t have. AI offers a smart alternative: “AI-powered models, using alternative data sources, facilitate credit to groups that had limited or no access in the past.” Companies have developed algorithms that evaluate things like mobile phone usage patterns, utility bill payments, or even social network data to gauge an individual’s reliability for lending. For instance, one fintech called Tala analyzes how you use your phone – such as whether you regularly top-up your prepaid phone plan and how promptly you pay your utility bills – to generate a credit score. “Call and SMS records… serve as a means of determining an individual’s potential credit reliability,” allowing loans for those with no formal credit history. This has enabled micro-loans for small entrepreneurs in places like Kenya, India, and the Philippines, where tens of thousands of borrowers (often previously “unbankable”) have gotten loans through AI-driven risk models. As long as privacy is respected and bias is managed (topics we’ll revisit in Ethics), this use of AI can empower people economically.

Inclusive Ledger: At dawn’s first light,

diverse hands from around the world hold smartphones projecting

holographic credit scores and utility symbols, connected by delicate

glowing threads that weave a radiant tapestry of AI-driven financial

inclusion and opportunity.

Inclusive Ledger: At dawn’s first light,

diverse hands from around the world hold smartphones projecting

holographic credit scores and utility symbols, connected by delicate

glowing threads that weave a radiant tapestry of AI-driven financial

inclusion and opportunity.

Meanwhile, mainstream banks use AI for everything from chatbots that answer customer questions (improving service access) to algorithmic trading that can improve returns for investors. The bottom line (pun intended) is that AI’s knack for pattern recognition and prediction is making financial systems more efficient, inclusive, and secure. The World Economic Forum even predicts that AI will lead to a net increase in jobs in the financial sector by creating new roles in fintech, data analysis, and AI oversight, compensating for those it automates. So rather than a dystopian take of AI wreaking havoc on finance, the emerging reality is AI helping more people participate in the financial system and shielding our money from bad actors. ### Creative Arts: Inspiring New Forms of Expression

One of the most exciting and surprising arenas for AI is in the creative arts. It turns out that algorithms can be creative partners – not replacing human artists, but collaborating in novel ways. In 2021, an AI system made headlines by helping complete Beethoven’s unfinished 10th Symphony – a project many thought impossible. Beethoven left only fragments of the 10th Symphony before he died. A team of musicologists and AI researchers trained an AI on all of Beethoven’s works and his style, then used it to suggest ways to develop those fragments. “We taught a machine both Beethoven’s entire body of work and his creative process,” explained Professor Ahmed Elgammal, who led the AI side of the project. The AI generated multiple possibilities for how Beethoven might have continued a melody or transitioned to a new theme, and the human composers on the team curated and wove those into a cohesive piece.

Symphony Reimagined: In a hushed,

candlelit concert hall draped in crimson velvet, spectral notes swirl

like luminescent ribbons around an antique piano, while a translucent AI

muse in gentle ivory robes offers shimmering tendrils of melody to a

focused composer’s quill—melding Beethoven’s spirit with algorithmic

inspiration in an ethereal duet.

Symphony Reimagined: In a hushed,

candlelit concert hall draped in crimson velvet, spectral notes swirl

like luminescent ribbons around an antique piano, while a translucent AI

muse in gentle ivory robes offers shimmering tendrils of melody to a

focused composer’s quill—melding Beethoven’s spirit with algorithmic

inspiration in an ethereal duet.

The resulting symphony – a mix of Beethoven’s notes, AI suggestions, and human musicality – premiered in Bonn, Germany, to an audience both excited and astounded. This wasn’t a push-button miracle; it was two years of hard work between people and AI. But it showcased collaborative creativity: the AI could sift through countless musical ideas in Beethoven’s style (something no human could do alone so quickly), while humans applied taste and judgment to choose the best ones. Moving from classical music to visual art and film, AI tools are giving individual creators superpowers. Platforms like RunwayML provide no-code AI tools for artists, letting them do things like generate images from text descriptions, swap backgrounds in videos, or animate still photos – all without needing a Hollywood studio. “RunwayML allows machine learning techniques to be accessible to students and creative practitioners. Its excellent visual interface makes it easy to train your models… supporting text, image generation, and even motion capture.” Using such tools, a small team of indie filmmakers can create special effects that would’ve been prohibitively expensive before, or a painter can prototype variations of a concept by having the AI generate suggestions, which they then refine by hand. We’ve also seen AI-designed visual art fetch high prices at auctions, and AI-written poetry collections published. The important thing to note is that art is deeply human – AI doesn’t change that. Rather, AI expands the palette of what artists can do. As one artist put it, using AI is like “getting a new color of paint that was never available before.” We also see AI enabling participatory creativity: for example, the musician Grimes offered her AI-generated voice model to fans, allowing them to create new songs with her “AI voice” and even share royalties. And in design and architecture, AI can quickly generate dozens of prototype layouts or structures based on constraints, sparking human designers’ imagination and saving time on grunt work. In all these cases, the ethos is AI as a tool for humans to express themselves in new ways. It lowers technical barriers (you don’t need to know Maya or complex software to do certain effects now) and sometimes brings in element of surprise that can inspire. There are valid debates about authorship and originality when AI is involved – a topic beyond our scope here – but many creators are optimistic. They see AI as an “idea generator” or an assistant that can help overcome creative blocks

Palette of Possibility: In a

sun-dappled artist’s loft with floor-to-ceiling windows, a painter

stands before a vast canvas that blooms with swirling fractal patterns

and photorealistic blossoms as an AI-driven projector casts delicate

motifs—brush and code intertwined in a dance of creative collaboration

under soft, golden light.

Palette of Possibility: In a

sun-dappled artist’s loft with floor-to-ceiling windows, a painter

stands before a vast canvas that blooms with swirling fractal patterns

and photorealistic blossoms as an AI-driven projector casts delicate

motifs—brush and code intertwined in a dance of creative collaboration

under soft, golden light.

The person is still very much in charge of the creative vision. As we venture into this new era, we might recall that every major technological advance (from the camera to synthesizers) initially caused alarm among artists, yet eventually was embraced as part of the creative toolbox. AI is likely to follow the same path, augmenting human creativity, not extinguishing it.

These case studies are just a snapshot. Across domains as diverse as agriculture (AI-driven drones identifying crop diseases), environmental science (climate models and wildlife conservation as we’ll touch on later), transportation (self-driving car technologies aiming to reduce accidents), and more, AI is proving its usefulness. The common theme is that when applied thoughtfully, AI can amplify human capabilities: making us more efficient, helping us see patterns we’d miss, and tackling problems at scales and speeds we simply couldn’t alone. This is the sunny side of the AI revolution – and it’s important to highlight, because sensational media coverage sometimes over-emphasizes dystopian scenarios. To be clear, AI is not a magic wand or a flawless oracle. It has limitations and can pose risks if misused. In the next section, we’ll address some of the myths and fears surrounding AI, separating fact from fiction so you can navigate the topic with a level head. ## Debunking Myths

As AI has burst into public consciousness, it’s also attracted a fair share of myths, misunderstandings, and exaggerated fears. It’s time to clear the air on a few big ones that might be worrying you. By debunking these myths, we hope to replace anxiety with informed optimism.

Myth #1: “AI is going to steal all our jobs.”

Reality: AI will certainly change the job market – much

like past waves of automation did – but it’s unlikely to cause mass

permanent unemployment. In fact, many experts believe AI will

create more jobs than it eliminates, while also transforming

existing jobs in positive ways. History is instructive here: consider

the Industrial Revolution or the computer revolution. Automation did

replace some occupations (we have far fewer manual weavers or

switchboard operators today), but it also generated new industries and

roles (graphic designers, software developers, IT managers – none of

which existed 100 years ago). The consensus among economists is that AI

will augment human workers and handle specific tasks, rather

than wipe out entire professions overnight. A World Economic Forum

report forecasts that while 85 million jobs may be displaced by

automation by 2025, about 97 million new jobs will

emerge – a net gain.

Evolving Workscape: In a luminous,

sunrise-hued city square, diverse professionals—an engineer sketching

new designs, a teacher engaging with students, a data analyst reviewing

holographic charts—stand alongside a gentle, translucent AI companion

offering glowing tool icons, symbolizing human–AI collaboration and the

birth of new roles.

Evolving Workscape: In a luminous,

sunrise-hued city square, diverse professionals—an engineer sketching

new designs, a teacher engaging with students, a data analyst reviewing

holographic charts—stand alongside a gentle, translucent AI companion

offering glowing tool icons, symbolizing human–AI collaboration and the

birth of new roles.

These new roles will be in areas like data analysis, AI maintenance, content creation, and the “human side” of work that AI can’t do – things requiring creativity, complex problem-solving, and interpersonal skills. Even within jobs, AI often takes over routine components, leaving people to focus on more meaningful tasks. For example, doctors who have AI reading medical images can spend more time talking to patients; accountants who use AI to auto-categorize expenses can concentrate on financial strategy. Sam Altman, who leads an AI company at the forefront, has said “most jobs will change more slowly than people think, and I have no fear we’ll run out of things to do… People have an innate desire to create and be useful to each other, and AI will allow us to amplify our abilities like never before.” In other words, work will evolve – often for the better. New categories of jobs (many we can’t even imagine yet) will appear, just as the rise of the internet gave us jobs like app developer or social media manager.

Reskilling Horizon: On a softly lit

hillside at dawn, figures carry lanterns of knowledge—one reads a

floating book of code, another tends a glowing sapling labeled

“Creativity,” while ethereal AI wisps disperse seeds of opportunity into

the rich soil, evoking the promise of evolving skills and flourishing

futures.

Reskilling Horizon: On a softly lit

hillside at dawn, figures carry lanterns of knowledge—one reads a

floating book of code, another tends a glowing sapling labeled

“Creativity,” while ethereal AI wisps disperse seeds of opportunity into

the rich soil, evoking the promise of evolving skills and flourishing

futures.

Of course, transitions can be bumpy for some workers, and reskilling/upskilling will be crucial. But rather than bracing for a job apocalypse, it’s more productive to prepare for job evolution. AI will handle more grunt work; humans will focus on the parts of work that truly require human touch. As Altman quipped, nobody today longs to be a 19th-century lamplighter, and in the future people won’t miss the drudgery that AI will relieve. Society will find new, perhaps more fulfilling ways for people to contribute.

Myth #2: “AI will become conscious or sentient and usurp

control.”

Reality: Despite sci-fi scenarios of robots gaining

self-awareness (and malevolent intent), current AI systems are not

sentient, and experts believe we are nowhere near creating AI that

possesses consciousness or genuine understanding. Today’s AI – even the

most impressive chatbots – is essentially a sophisticated pattern

recognizer and generator. It doesn’t have feelings, desires, or an ego.

When a chatbot like ChatGPT says “I’m feeling happy today,”

it’s not actually experiencing happiness; it’s predicting a plausible

sentence based on training data. Leading AI scientists like Fei-Fei Li

have emphasized that there’s zero evidence these systems have any inner

experience or self-awareness. A group of 19 researchers published a

comprehensive report in 2023 concluding “no current AI systems are

conscious” – and also that there’s no practical way to measure

machine consciousness yet beyond theoretical speculation.

Pattern Reflections: In a twilight-lit

data hall, a faceted AI mind of glowing circuits and floating code

streams mirrors itself in a polished glass pane - no soul within its

depths, only patterns repeating in endless, beautiful loops of

light.

Pattern Reflections: In a twilight-lit

data hall, a faceted AI mind of glowing circuits and floating code

streams mirrors itself in a polished glass pane - no soul within its

depths, only patterns repeating in endless, beautiful loops of

light.

The misunderstandings often arise because advanced AI can appear human-like in conversation or creativity. We naturally anthropomorphize it. One highly publicized case was a Google engineer who became convinced an AI language model was sentient – but Google and the broader AI community firmly disagreed, and the engineer’s claims were not substantiated by any scientific standard. As of now, AI lacks a will of its own. It does what it’s programmed or trained to do, nothing more. It cannot “decide” to pursue goals not given to it. This doesn’t mean AI can’t ever pose threats – but those threats look more like misuse by humans (autonomous weapons or algorithmic bias causing harm) than a Terminator-style uprising. Renowned AI researcher Andrew Ng has said worrying that today’s AI might turn evil is like worrying about “overpopulation on Mars” – a hypothetical concern for the distant future, not something relevant now. It’s important to focus on real, pressing issues in AI ethics (like bias and privacy) rather than Hollywood plots. In short: you don’t need to lose sleep thinking Alexa will gain consciousness and lock you out of your house.

Empty Throne: On a mist-shrouded dais under a

pale moon, a regal AI crown of holographic wires hovers above an

unoccupied marble pedestal–symbolizing power without consciousness, an

elegant reminder that intelligence alone bears no will or

desire.

Empty Throne: On a mist-shrouded dais under a

pale moon, a regal AI crown of holographic wires hovers above an

unoccupied marble pedestal–symbolizing power without consciousness, an

elegant reminder that intelligence alone bears no will or

desire.

We’re simply not building AI with any ability or motive to do that. Conscious or General AI (human-level broad intelligence) remains a theoretical long-term possibility, but even the most optimistic/pessimistic timelines put that many years if not decades away – with many uncertainties. It’s a fascinating topic for philosophers and futurists, but not a practical worry for someone using Siri or a self-driving car today.

Myth #3: “AI is a perfect, unbiased decision-maker – or, conversely, AI is an inscrutable black box we can never hope to understand or trust.”

Reality: AI systems, far from being infallible, are only as good as the data and design behind them – and thus they can make mistakes or reflect biases present in their training data. There’s a saying in computer science: “Garbage in, garbage out.” If an AI is trained on biased or unrepresentative data, its outputs will likely be biased or inaccurate. For instance, an AI hiring tool trained predominantly on resumes from male candidates might learn to favor men (as Amazon discovered with an experimental hiring AI that had to be scrapped for bias against women). Similarly, facial recognition algorithms a few years ago were found to have higher error rates on darker-skinned faces because the training data had far more light-skinned faces – a bias that can lead to wrongful identifications.

Fractured Reflection: In an art

gallery of smoky glass panels, a human face is reflected in multiple

shards–each shard tinted with different hues and subtle

distortions–evoking how biased data can fracture a single truth, soft

ambient light lending a poetic, cautionary tone.

Fractured Reflection: In an art

gallery of smoky glass panels, a human face is reflected in multiple

shards–each shard tinted with different hues and subtle

distortions–evoking how biased data can fracture a single truth, soft

ambient light lending a poetic, cautionary tone.

The myth that AI is inherently neutral or objective is dangerous; in truth, AI can amplify human biases under a veneer of objectivity if we’re not careful. The flip side is the myth that because AI works in complex ways, we can never peek inside the black box or assert control. In reality, a whole field of Explainable AI (XAI) is dedicated to making AI’s decisions more interpretable. Techniques exist that can highlight which factors influenced a model’s decision (for example, heat-mapping areas of an image an AI focused on, or identifying which words in a paragraph led a model to a certain classification). This can be crucial for trust. If an AI denies someone a loan, both the user and the lender will want to know why – and laws may soon require such explanations for high-stakes AI decisions. Additionally, developers can instill transparency and accountability by design: documenting how the model was trained, what its known limitations are, and allowing external auditing. It’s also worth noting that not all AI models are completely opaque – simpler models (like decision trees or linear models) are quite interpretable, and even for complex neural networks, researchers have made strides in understanding the representations they learn. So, while a deep learning model is not as straightforward as a checklist, we are not powerless. As a McKinsey report noted, “By shedding some light on the complexity of so-called black-box algorithms, explainability can increase trust and engagement among users.” In fact, many organizations already successfully deploy AI in critical areas (like healthcare diagnoses or credit scoring) by combining AI’s statistical prowess with human oversight and interpretability measures.

Illuminated Enigma: At twilight in a

minimalist lab, a translucent cube hovers above a marble pedestal, its

interior awash with glowing heat-map patterns and floating annotated

code snippets–symbolizing explainable AI’s promise to turn a once-opaque

black box into a radiant, understandable core.

Illuminated Enigma: At twilight in a

minimalist lab, a translucent cube hovers above a marble pedestal, its

interior awash with glowing heat-map patterns and floating annotated

code snippets–symbolizing explainable AI’s promise to turn a once-opaque

black box into a radiant, understandable core.

The key is not to treat AI as a mystical oracle, but as a tool that can be verified and validated like any other important process. AI may be complex, but so are airplane systems, and yet we have methods to test and certify those for safety. We can and must do the same for AI. The bottom line: don’t overtrust AI outputs blindly, but also don’t assume we can never scrutinize or improve them. With proper governance, AI can be made as accountable as we require.

Myth #4: “AI = one big thing.” (Or “All AI is the

same.”)

Reality: AI is not monolithic. It encompasses

a variety of techniques and systems, each suited to different tasks,

with different strengths and weaknesses.

Specialized Spectrum: A radiant

gallery wall where diverse AI portraits hang in illuminated frames–from

a chess-playing automaton to a language-model hologram and a Mars rover

silhouette–each panel glowing with its own color aura, celebrating AI’s

nuanced variety.

Specialized Spectrum: A radiant

gallery wall where diverse AI portraits hang in illuminated frames–from

a chess-playing automaton to a language-model hologram and a Mars rover

silhouette–each panel glowing with its own color aura, celebrating AI’s

nuanced variety.

This may seem obvious, but it’s worth mentioning because people sometimes conflate everything from a simple chess algorithm to a self-driving car under one mental image of “AI.” When someone says “AI did X”, you should ask: which AI system? trained on what? used in what context? For example, an AI that generates funny cat pictures has virtually nothing to do with the AI controlling a Mars rover, aside from some underlying mathematical principles. Conflating them can cause unnecessary fear or hype. Not every AI is an all-powerful intelligence – most are very specialized (we call them narrow AI or ANI). They can do one thing really well (like translate languages, or detect tumors in scans), but would be clueless outside their domain. IBM’s Deep Blue could beat a chess champion, but couldn’t hold a conversation or even play checkers. Today’s general-purpose language models like GPT-4 are more flexible, but even they have boundaries (they have no vision, can’t form new long-term plans, unless combined with other systems). So when evaluating any AI application, consider it in context, rather than attributing some generalized ability.

Narrow Focus: In a moonlit desert, a lone

robotic rover’s headlights cut through the dusk, while a separate,

floating chat interface glows softly overhead - juxtaposing two distinct

AI systems, each excelling in its own domain under a starry

sky.

Narrow Focus: In a moonlit desert, a lone

robotic rover’s headlights cut through the dusk, while a separate,

floating chat interface glows softly overhead - juxtaposing two distinct

AI systems, each excelling in its own domain under a starry

sky.

This myth-busting helps temper both fears (e.g., a cleaning robot in your house is not secretly plotting to take over your Wi-Fi network – it doesn’t have the capability) and expectations (the same cleaning robot might not even navigate well in a completely new environment without retraining). In short, always specify the AI.

By dispelling these myths, we see a clearer picture: AI is a powerful technology created by humans and controllable by humans, not an alien entity beyond our control. It has limitations – which we must recognize – and it has incredible potential – which we must cultivate responsibly. As one fast-company article pointed out, part of AI literacy is learning AI’s shortcomings and how to use it wisely. For instance, knowing that AI chatbots can “hallucinate” false information (a well-documented issue where they make up facts) reminds us to double-check important outputs. Knowing that AI isn’t sentient keeps us from over-fearing or over-anthropomorphizing it. And knowing that AI will change (not eliminate) jobs allows us to focus on adapting and skilling up, rather than panicking.

Now that we have a realistic understanding of AI’s nature and impact, let’s turn to the vital topic of ethics, bias, and trust in AI. How do we ensure these technologies are fair, transparent, and used responsibly? What questions should you, as a non-expert user or concerned citizen, be asking about any AI system that affects you? Let’s explore that next. ## Ethics, Bias, and Trust

As AI systems play a bigger role in decisions that affect people’s lives – from hiring and lending to policing and healthcare – it’s crucial that they operate fairly, transparently, and accountably. AI ethics isn’t just a topic for engineers or philosophers; it’s something we all should be aware of, so we can ask the right questions and demand the right safeguards. Let’s break down a few key principles and practical tips for cultivating trustworthy AI.

Fairness: AI should treat individuals and groups equitably. In practice, this means an AI system shouldn’t discriminate based on protected attributes like race, gender, or religion. However, without careful design, AI can inadvertently perpetuate or even amplify bias present in its training data.

Equitable Scales: In a mist-wreathed hall

of justice, a pair of antique scales floats midair, each pan holding

diverse translucent human silhouettes, connected by glowing

filaments–soft beams of dawn light filtering through tall windows,

symbolizing AI’s quest to balance fairness across all groups.

Equitable Scales: In a mist-wreathed hall

of justice, a pair of antique scales floats midair, each pan holding

diverse translucent human silhouettes, connected by glowing

filaments–soft beams of dawn light filtering through tall windows,

symbolizing AI’s quest to balance fairness across all groups.

For example, a criminal risk assessment AI (used in some courts to help decide bail) was found to be biased against black defendants – it was falsely flagging them as higher risk more often than white defendants, likely due to biased historical arrest data. Ensuring fairness often requires explicit steps: curating diverse training datasets, applying techniques to mitigate known biases, and continuously monitoring outcomes. Fairness is not just a technical issue but a societal one; even defining “fair” can be complex (e.g., equal false positive rates across groups vs. equal outcomes). For most of us as AI users or subjects, the main thing is to be aware that AI can be biased. If an AI tool is being used in a high-stakes situation (say, scanning résumés or deciding who gets a loan), it’s fair to ask: Has this system been tested for bias? What steps have been taken to ensure it doesn’t disadvantage certain groups? Companies deploying AI are increasingly expected – by public pressure and soon by regulation – to audit their models for fairness. A practical example: if an AI is filtering job applications, you might ask the company, “Is the AI fair across genders and ethnicities? Can you share any bias testing results?” If they can’t answer, that’s a red flag.

Bias Illuminated: In a shadowed courtroom,

a holographic judge’s gavel casts prismatic light onto rows of

demographic icons, where certain figures glow brighter as subtle

heat-map overlays reveal hidden biases–an ethereal interplay of darkness

and light underscoring AI’s need for transparent fairness.

Bias Illuminated: In a shadowed courtroom,

a holographic judge’s gavel casts prismatic light onto rows of

demographic icons, where certain figures glow brighter as subtle

heat-map overlays reveal hidden biases–an ethereal interplay of darkness

and light underscoring AI’s need for transparent fairness.

On a hopeful note, AI can also be used to improve fairness – for instance, some organizations use AI to scan their own decisions (like performance reviews or pay raises) and check for patterns of bias that managers might not notice. Fairness in AI is an ongoing effort, but the goal is clear: AI should not exacerbate human prejudices; ideally it should help us overcome them.

Transparency: We often hear AI described as a “black box,” but for AI to be trusted, a level of transparency is vital. Transparency operates at multiple levels. First, transparency about AI use – you should know when you’re interacting with an AI or when an AI is influencing a decision about you. If you’re chatting with customer support, are you talking to a human or a bot? If an algorithm determined your interest rate, were you informed?

Luminous Disclosure: In a twilight-lit

digital chamber, a crystalline glass cube etched with glowing circuit

patterns rests on a marble pedestal, its soft luminescence spilling onto

parchment scrolls inscribed with flowing calligraphy that explain AI

decision factors–an ethereal blend of technology and human

clarity.

Luminous Disclosure: In a twilight-lit

digital chamber, a crystalline glass cube etched with glowing circuit

patterns rests on a marble pedestal, its soft luminescence spilling onto

parchment scrolls inscribed with flowing calligraphy that explain AI

decision factors–an ethereal blend of technology and human

clarity.

Leading tech ethics guidelines call for notifying users when AI is in play, rather than hiding it. Second, transparency about how AI works (in simpler terms) – no one expects a layperson to understand millions of neural network weights, but developers can provide plain-language explanations of what factors the AI considers. For example, “Our credit AI analyzes your payment history, outstanding debt, and income stability, but does not consider race or ZIP code.” Even labels like “this is an AI-generated image” add transparency (preventing deception by deepfakes, for instance). The EU is enshrining some of this in law, requiring transparency for AI systems in certain domains. As an everyday person, you can look for transparency signals. Does the AI app or service you use have an explanation page or FAQ about the algorithm? Does it clearly let you opt out or contest decisions?

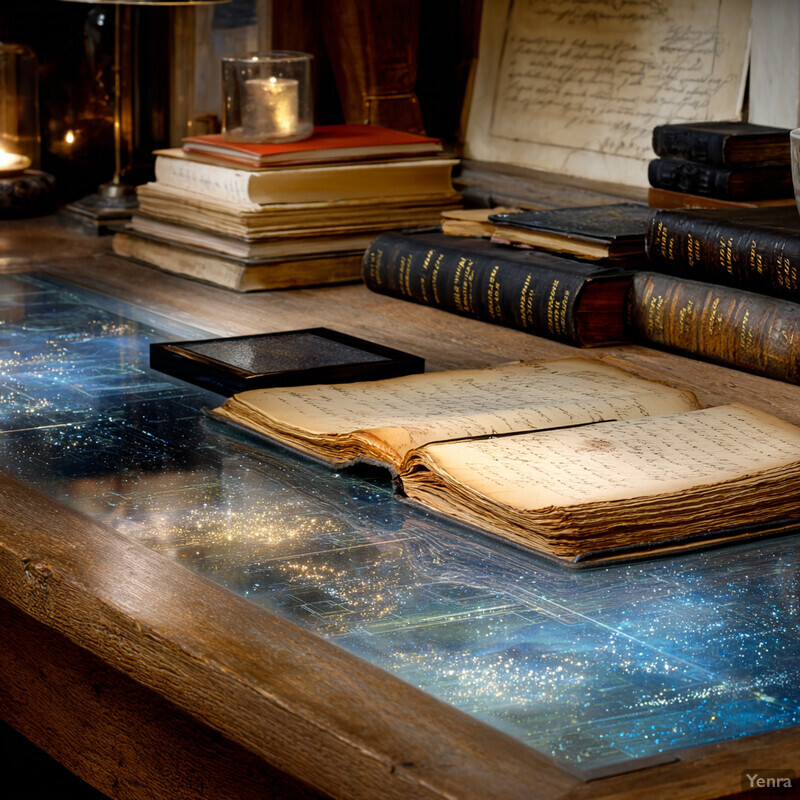

Inner Workings Revealed: In a

shadowed study at dawn, translucent blueprints of neural layers float

above an oak desk strewn with open tomes, each layer gently labeled in

warm, golden script, while a soft ambient glow peels back the veil of

the AI black box, inviting curious minds into its heart.

Inner Workings Revealed: In a

shadowed study at dawn, translucent blueprints of neural layers float

above an oak desk strewn with open tomes, each layer gently labeled in

warm, golden script, while a soft ambient glow peels back the veil of

the AI black box, inviting curious minds into its heart.

These are signs of a trustworthy deployment. If something feels opaque – for instance, you keep seeing oddly specific ads and suspect an AI is profiling you in the background – you have every right to be concerned and to seek more info or adjust your privacy settings. In short, transparency builds trust by turning on the “light” in the black box even if just to see outlines. It’s also empowering: when people understand why an AI made a recommendation, they can better judge whether to accept or override it.

Accountability: This principle asks, “Who is responsible if the AI causes harm or makes a mistake?” AI systems should have human accountability – meaning a company or team stands behind them and will take corrective action if something goes wrong. It also means mechanisms for recourse: if an AI-driven decision negatively impacts you, there should be a way to appeal or have a human review it. For example, if an AI denies your loan application, perhaps you can request a human underwriter to double-check.

Or if a content moderation AI wrongly takes down your social media post, you can appeal to a human moderator. Accountability also implies oversight. Many organizations now employ AI ethics officers or review boards that evaluate algorithms before and after deployment. From a user perspective, a question to ask is: Is there a “human in the loop” at appropriate stages?

Human in the Loop: In a moonlit boardroom

with floor-to-ceiling windows overlooking a cityscape, a compassionate

overseer in soft robes stands beside a glowing AI interface, gently

guiding its output with a hand on its translucent frame–an ethereal

reminder of human responsibility and oversight.

Human in the Loop: In a moonlit boardroom

with floor-to-ceiling windows overlooking a cityscape, a compassionate

overseer in soft robes stands beside a glowing AI interface, gently

guiding its output with a hand on its translucent frame–an ethereal

reminder of human responsibility and oversight.

For critical things (like medical diagnoses, legal judgments, etc.), AI should assist, not fully replace, human professionals – at least until we’re very confident in the AI’s reliability. Another aspect is accountability for improvements: developers must monitor AI systems in the wild and fix issues as they arise. A famous example: when an AI system used by a recruiting firm was found to be excluding candidates with certain keywords (like women’s colleges), the firm had to own that, apologize, and update the model. We shouldn’t accept a shrug and “the computer says so” as an answer.

People built the AI, and people must be answerable for it. Regulators around the world are also stepping in – the EU’s AI Act, for instance, will hold companies legally accountable for the behavior of high-risk AI systems, requiring things like documentation, risk assessments, and human oversight. This is good news for consumers and society: it means accountability is being formalized, not just left to goodwill.

Beyond these three big principles, privacy is another crucial one (AI often thrives on data, but individuals have a right to privacy – thus data should be collected and used with consent and safeguards like anonymization). Safety is important too (AI shouldn’t physically or mentally harm people – self-driving cars must be rigorously tested; content generation AI should have guardrails to avoid encouraging self-harm, etc.). Security matters (AI systems should be protected from hacking or manipulation – e.g., someone shouldn’t be able to trick a medical AI into misbehaving by inputting malicious data). And human values broadly: AI should align with the values of the communities it serves, which means inclusivity in design and deployment.

Chains of Custody: Along a misty dawn

shoreline, delicate golden chains link a luminous algorithmic core to a

circle of diverse figures holding lanterns–symbolizing shared

accountability and the pathways for appeal that connect users, AI

systems, and the humans who stand behind them.

Chains of Custody: Along a misty dawn

shoreline, delicate golden chains link a luminous algorithmic core to a

circle of diverse figures holding lanterns–symbolizing shared

accountability and the pathways for appeal that connect users, AI

systems, and the humans who stand behind them.

Alright, so what practical questions can non-experts ask to gauge an AI system’s ethics and trustworthiness? Here are a few suggestions you can keep in your back pocket:

“What data was this AI trained on?” – This tells you a lot. If the data was narrow or biased, the outputs may be too. If a company says, “We trained our hiring AI on 50 years of successful employee profiles,” one might worry, “hmm, 50 years ago the workforce had far fewer women and minorities in certain roles – did you correct for that?” Ideally, they should articulate how they ensured diverse, representative training data.

“How does this AI make decisions/recommendations, in simple terms?” – You’re asking for a lay explanation of the factors involved. Beware of any tool that is a complete black hole or where even the operators can’t give any rationale. If they say “It’s proprietary” or “It just learns, trust us”, that’s not good enough in sensitive applications. Responsible AI providers will often publish explainers. For example, a bank might say, “Our algorithm looks at your repayment history and current income to determine creditworthiness; it doesn’t use personal characteristics like gender or ethnicity.”

“What are this AI’s limitations or error rates?” – No AI is 100% accurate. If someone is deploying it, they should know the ballpark false positive/negative rates or scenarios where it might fail. For instance, facial recognition might be known to be less accurate in low lighting or for certain age groups. A medical AI might be very good at flagging pneumonia on chest X-rays but not so good at spotting a broken rib. Knowing limitations means you (or the operators) can double-check or avoid using it in those cases. It also shows humility on the developers’ part. When an AI tool honestly tells you, “I’m not sure about that, maybe ask a human,” that’s a sign of thoughtful design.

“Is a human reviewing or overseeing this AI’s decisions?” – This touches on accountability and safety. If you’re interacting with something consequential (like an AI therapist chatbot or an AI judge in a contest), you’d want to know there’s human moderation or ability to intervene.

“Can I opt out or have my data excluded?” – This is about privacy and control. Perhaps you’re fine with an AI recommending movies to you, but not fine with it reading all your emails to do so. Good AI services offer choices. For example, modern phones allow you to opt out of some AI analyses, like improving Siri by sharing your voice recordings (often the default is opt-in with anonymization, but you can opt out).

“What safeguards are in place to prevent misuse?” – If it’s a public tool (say, an image generator), does it have filters to avoid producing graphic violence or explicit hate speech? If it’s an AI that can be used for surveillance, is it restricted to authorized, legal use cases?

“Who can I contact if I think the AI made a mistake or I have concerns?” – There should be a clear path for feedback. Many AI products have links like “Report Issue” or customer support specifically for AI outputs. If an AI-driven credit score ruined your application and you have evidence it’s wrong, you should be able to reach a human to resolve it. Lack of a contact or recourse is a sign of a company not taking responsibility.

These questions don’t require you to know how to code or to understand linear algebra; they’re about common sense, rights, and communication. A trustworthy AI provider should be able to address them. If they can’t, that in itself tells you the system might not be ready for prime time or is being deployed irresponsibly.

It’s encouraging that awareness of AI ethics has grown so much recently. Universities offer courses on AI ethics, governments publish AI ethical frameworks, and multidisciplinary teams now tackle these issues at tech firms. The general public is also more savvy – for example, backlash against biased algorithms has led to some high-profile retractions and improvements. We, as users and citizens, play a role by staying informed and vocal. When you use an AI or are subjected to one, approach it with informed curiosity: embrace its benefits but also keep a critical eye. Think of using AI like driving a car – you follow some rules and you stay alert. You trust the machine but also wear a seatbelt and watch the road.

With ethical principles and questions in mind, we can use AI in a way that aligns with our values and societal norms. In the next section, we’ll talk about how you can become more AI-literate and even get some hands-on experience. Empowering yourself with knowledge is the best way to ensure you can harness AI’s upsides while mitigating its downsides. ## Becoming AI-Literate

By this point, you might be thinking: “This is all well and good, but how do I actually become AI-literate if I’m not a techie?” The great news is that AI learning resources have blossomed in recent years, and many are designed for absolute beginners with no coding required. AI literacy is not about being able to build a neural network from scratch; it’s about understanding concepts and getting comfortable interacting with AI tools. Here are some accessible ways to start or continue your journey:

1. Play with No-Code AI Tools: A fantastic (and fun) way to demystify AI is to tinker with simple AI applications where you can see results immediately. Take Google’s Teachable Machine for example. It’s a free web tool that lets you train a very basic machine learning model using your webcam – without writing a single line of code. In minutes, you can create a model that, say, recognizes different poses or gestures you make. “Teachable Machine is a web tool that makes it fast and easy to create machine learning models for your projects, no coding required.”

Digital Tinkerer: In a softly lit home

studio, a curious creator sits before a laptop webcam, surrounded by

floating translucent icons–thumbs-up, thumbs-down, and colorful gesture

symbols–as gentle streams of code weave around them, embodying the magic

of no-code AI experimentation.

Digital Tinkerer: In a softly lit home

studio, a curious creator sits before a laptop webcam, surrounded by

floating translucent icons–thumbs-up, thumbs-down, and colorful gesture

symbols–as gentle streams of code weave around them, embodying the magic

of no-code AI experimentation.

Want to teach your computer to recognize if you’re holding up a thumbs-up versus a thumbs-down? You can do that by showing a few examples to Teachable Machine and instantly testing it. It’s like a magical peek into how machines learn by example. Similarly, tools like Lobe (by Microsoft) allow you to train image classifiers with a drag-and-drop interface, and RunwayML (mentioned earlier) provides a suite of AI capabilities for creative projects via a user-friendly interface. With RunwayML, for instance, you could try generating imagery or removing the background from a video with just a few clicks – activities that give you a tangible sense of AI’s capabilities. “RunwayML supports text, image generation, and motion capture,” all through an easy visual interface. By experimenting with these platforms, you’ll build intuition. They often show you behind the scenes – e.g., how many training examples improved the model or where it’s uncertain. This hands-on play demystifies AI quickly. It’s the difference between reading about swimming and actually splashing in a pool. Some other beginner-friendly, no-code AI experiences include: Machine Learning for Kids, a site that allows children (and curious adults) to train AI to recognize text or images and integrate it with Scratch programming; and AI Experiments by Google, which offers a collection of interactive demos (like an AI that tries to guess your doodles). The barrier to entry has truly never been lower – you can do this, even if your tech skills are limited to browsing Facebook.

Gesture Symphony: At dawn’s first light,

an ethereal figure holds up their hand in a minimalist workspace,

sending glowing gesture trails into a hovering holographic interface

that dynamically visualizes each AI training example, turning learning

into a poetic dance of light and motion.

Gesture Symphony: At dawn’s first light,

an ethereal figure holds up their hand in a minimalist workspace,

sending glowing gesture trails into a hovering holographic interface

that dynamically visualizes each AI training example, turning learning

into a poetic dance of light and motion.

2. Take a Beginner Course or Tutorial: Structured learning can greatly accelerate your understanding. Thankfully, there are excellent courses tailored for non-programmers. One highly recommended starting point is “AI For Everyone” by Coursera (taught by Dr. Andrew Ng, a renowned AI educator). It’s specifically designed for people with no technical background, focusing on what AI can and cannot do, how it’s applied in business and society, and how you might initiate an AI project or strategy in your own organization.

Virtual Bootcamp: In a tranquil digital

studio awash in soft morning light, a graceful laptop hovers above an

open notebook, its screen projecting drifting lecture slides and

animated neural-network diagrams, while gentle beams of pastel code