1. Enhanced Perception Systems

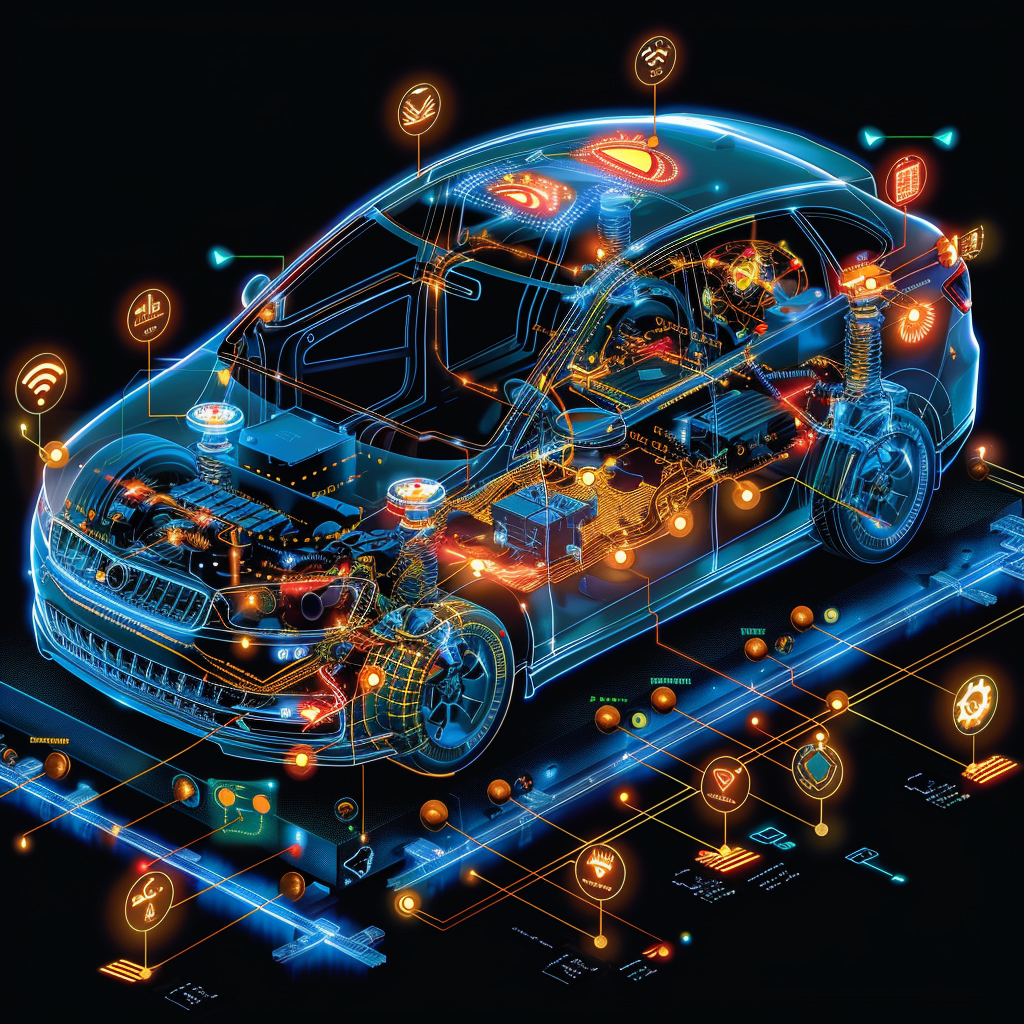

Autonomous vehicles now leverage enhanced perception systems that fuse data from cameras, LiDAR, radar, and other sensors to achieve a comprehensive 360-degree view of their surroundings. AI algorithms process the rich sensor data in real-time, identifying vehicles, pedestrians, road signs, and obstacles with high accuracy. These multi-modal perception systems allow autonomous cars to “see” farther and in greater detail than before, even under challenging conditions like night or rain. Continuous machine learning improvements mean the system gets better at object recognition and distance estimation over time. As a result, modern autonomous vehicles can detect potential hazards earlier and react more reliably, which is crucial for safe navigation in complex traffic environments.

Advances in sensor hardware have dramatically improved perception range and fidelity. For example, a new automotive LiDAR unit can detect a pedestrian up to 250 meters away and even spot a low-reflectivity object (like a dark tire on asphalt) at 120 meters, distances that far exceed human headlights’ reach. Many self-driving cars are equipped with dozens of sensors; Waymo’s fifth-generation autonomous vehicle design, for instance, integrates multiple high-resolution LiDARs, radar units, and 29 cameras to capture a full 360° view around the car. The commitment to sensor technology is reflected in industry trends – the global automotive LiDAR market was valued at about $504 million in 2023, driven by surging adoption of these sensors for vehicle perception. Sensor fusion (combining camera, radar, and LiDAR inputs) significantly boosts object detection reliability, reducing false negatives and improving detection of pedestrians and other road users by a substantial margin in research trials. Thanks to these enhanced perception systems, autonomous vehicles tested in California have been able to log millions of miles with steadily decreasing intervention rates, indicating that the AI’s environmental awareness and interpretation are reaching new heights.

2. Improved Decision Making

AI is fundamentally improving the decision-making capabilities of autonomous vehicles by enabling them to evaluate complex traffic situations and choose safe actions within fractions of a second. Unlike human drivers—who might be distracted or have slower reflexes—an AI-driven vehicle continuously analyzes sensor inputs and predicts outcomes for various possible maneuvers (such as braking, swerving, or continuing on course). This real-time analysis allows the vehicle to decide when to change lanes, yield, or perform an emergency stop with precision and consistency. Improved decision algorithms also account for traffic rules and context (for example, distinguishing between a temporary obstacle and a permanent one) to make sound judgments. Overall, AI decision-making in autonomous cars aims to mimic experienced driver behavior but with greater speed, consistency, and the ability to handle edge cases that would challenge human reflexes.

Reaction time is one area where AI decision-making dramatically outperforms humans: on average a human driver takes about 1.5 seconds to react to an unexpected road hazard, whereas an autonomous driving system can react within milliseconds. This superior reflex, combined with 360-degree perception, means an AI-driven car can begin braking or steering to avoid danger long before a human typically would. Data from real-world deployments also suggest safer decisions – for example, Tesla reports one accident for every 4.31 million miles driven with Autopilot engaged, versus roughly one per 500,000 miles in conventional human driving. This indicates nearly a 9-fold improvement in accident rate under AI supervision, illustrating how consistently well-timed and prudent AI decisions can prevent collisions. Moreover, in California testing of fully autonomous vehicles, the rate of “disengagements” (when a human safety driver must intervene) has been falling each year: Waymo’s vehicles averaged tens of thousands of miles between interventions in 2023, reflecting the growing reliability of the AI’s choices in complex traffic. As algorithms incorporate more driving experience and edge-case scenarios, autonomous systems continue to refine their decision-making, leading to fewer critical errors than human drivers in comparable situations.

3. Predictive Capabilities

Modern autonomous driving AI doesn’t just react to the present – it also predicts future movements of nearby entities to drive proactively. Through machine learning, autonomous vehicles analyze the patterns of other cars, cyclists, and pedestrians and forecast what they are likely to do next. For instance, the system can infer if a pedestrian on the curb is about to cross the street or if the car in the adjacent lane is preparing to merge in front. By anticipating these actions a few seconds ahead, the autonomous vehicle can adjust its speed, create buffer space, or change lanes preemptively to avoid conflicts. These predictive capabilities add a critical layer of foresight, making AI-driven vehicles defensive drivers that can handle sudden changes in traffic dynamics more smoothly. Essentially, the vehicle’s AI acts like an attentive driver who is constantly “reading the road” and expecting potential events before they happen.

AI prediction models have reached impressive accuracy in forecasting human and vehicle behavior. For example, research on pedestrian intention prediction shows that an autonomous vehicle’s AI can correctly anticipate a pedestrian’s decision to cross the road approximately 1–2 seconds in advance with about 90% accuracy. This means the car can start slowing down even before the person steps off the curb. Similarly, studies on lane-change behavior indicate that machine learning models can recognize a nearby driver’s lane change intention with over 90% accuracy a few seconds before the maneuver occurs. These models analyze subtle cues like a car’s positioning within its lane, its speed fluctuations, and turn signal usage (if any) to predict its next move. In practice, such predictive AI enables an autonomous vehicle to, say, ease off the throttle when it detects a faster car coming up in an adjacent lane that might cut in. Companies have also developed AI systems to forecast the timing of traffic light changes and traffic flow patterns, allowing vehicles to adjust speed to “catch” green lights and avoid unnecessary stops. Overall, by leveraging large datasets of driving behavior, autonomous vehicles’ predictive algorithms substantially enhance safety – one study estimates that predictive driving algorithms could prevent up to 90% of imminent collisions that human drivers would fail to avoid by reacting too late. These forward-looking capabilities mark a key advantage of AI-driven vehicles in complex, interactive road environments.

4. Optimal Route Planning

AI is enhancing route planning for autonomous vehicles by dynamically calculating the most efficient and safe paths to a destination. Traditional GPS navigation gives point-to-point directions, but AI-driven route planning goes further by incorporating real-time data on traffic congestion, accidents, road closures, and even weather conditions. This means an autonomous vehicle can proactively reroute to avoid a traffic jam or hazardous road conditions without any human input. These systems often optimize for multiple criteria – not just shortest distance, but also minimal travel time, least energy consumption, or highest safety. In effect, the AI serves as an intelligent co-pilot that is constantly scanning for better routes. This results in reduced travel times and smoother journeys for passengers, and it can also improve energy efficiency (important for electric autonomous vehicles) by avoiding stop-and-go traffic. Overall, AI-based optimal route planning contributes to less congested roads and more predictable travel.

The impact of AI on route optimization is significant. Simulations by transportation researchers suggest that widespread use of AI-guided autonomous vehicles could reduce traffic delays by around 40% through smarter routing and coordination. Even at lower levels of adoption, benefits are evident: a U.S. Department of Energy study in Los Angeles found that an AI-powered multi-modal trip planner (which suggested optimal combinations of driving, transit, etc.) could cut congestion-related delay by 14–20% during peak periods. These improvements come from vehicles distributing themselves more evenly across the network and avoiding known bottlenecks. Optimal routing also translates to fuel and energy savings – by some estimates, intelligent route planning can reduce a vehicle’s fuel consumption by up to 10% simply by keeping it out of heavy traffic and unnecessary detours. For example, if a highway develops stop-and-go conditions, an AI system might divert an autonomous car to an alternate route that, while slightly longer in distance, allows a steady speed and thus saves time and fuel. On a larger scale, traffic models have shown that if autonomous vehicles communicate and coordinate their route choices, stop-and-go waves (“phantom traffic jams”) could be virtually eliminated, smoothing overall traffic flow. Several U.S. cities are already testing vehicle-to-infrastructure data feeds, where AI in cars gets live traffic signal timings and adjusts routes or driving speed to reduce idle time at red lights. Such examples underscore that AI optimal route planning not only benefits individual travelers but can also yield broad public benefits in the form of less congestion and emissions.

5. Adaptive Cruise Control

Adaptive Cruise Control (ACC) is an AI-driven feature that automatically adjusts a vehicle’s speed to maintain a safe following distance from the car ahead. In autonomous and semi-autonomous vehicles, ACC relieves the human driver from constantly modulating the throttle or brakes on highways or in traffic. The AI uses forward-looking sensors (radar or lidar, often combined with cameras) to detect the distance and relative speed of the vehicle in front. It then smoothly slows down or accelerates as needed to keep a pre-set gap. This results in a more consistent speed and reduces the risk of tailgating. ACC not only improves convenience and reduces driver fatigue on long drives, but it also contributes to safety by reacting faster than a human typically can if the leading car suddenly brakes. Modern ACC systems can handle stop-and-go traffic as well, bringing the car to a complete stop and then resuming movement when traffic moves, all without driver intervention. In fully autonomous vehicles, ACC is a core component of the longitudinal control system, ensuring the vehicle keeps pace appropriately in traffic flow.

Adoption of ACC has grown rapidly in recent years, indicating its perceived safety and comfort benefits. In the United States, over two-thirds of new vehicles now come equipped with adaptive cruise control – penetration of ACC in new model-year cars increased from just 4.7% in 2015 to about 68% in 2023. This reflects automakers’ confidence in the technology and consumer demand for driver assistance features. On the safety front, ACC helps maintain a proper following distance; for instance, if the car ahead suddenly slows, the AI will typically begin braking within tenths of a second, often faster than an average human’s reaction. Studies by safety organizations suggest that ACC (especially when paired with automatic emergency braking) can lead to a meaningful reduction in rear-end collisions, which are among the most common crash types. Real-world data from driving experiments show that drivers using ACC experience significantly fewer instances of hard braking – one field study found 30% fewer extreme braking events when ACC was engaged, as the system anticipates gradual slowing earlier than many humans do. Additionally, ACC contributes to smoother traffic flow: by preventing the concertina effect of drivers accelerating and braking erratically, a line of vehicles using ACC can reduce overall highway congestion. Trucking fleets in the U.S. have reported improved fuel efficiency when adaptive cruise is used, because the consistent speeds and safe drafting distances maintained by ACC minimize unnecessary throttle bursts or brake slamming, leading to a few percentage points improvement in fuel economy. All these facts underscore ACC’s role as both a convenience and a safety-enhancing AI capability in vehicles.

6. Lane Keeping Assistance

Lane Keeping Assistance (LKA) uses AI and computer vision to help a vehicle stay centered in its lane, significantly reducing the risk of unintended lane departures. A forward-facing camera (and sometimes lidar) continuously scans the road markings, while the AI interprets the vehicle’s position relative to the lane lines. If the car begins to drift toward the edge of its lane without a turn signal (which could indicate driver inattention or drowsiness in a human-driven scenario), the LKA system provides corrective steering input or alerts to gently bring the vehicle back to the center of the lane. In autonomous vehicles, this functionality is part of the lateral control system that ensures the car follows the lane curvature accurately. Lane keeping AI must handle straight roads, curves, and situations where markings are faded or temporarily obscured (the AI is trained to infer lane position even with partial information). By continuously centering the vehicle, LKA prevents accidents such as side-swipes or road departures that can occur when a vehicle veers out of its lane. This feature adds a layer of safety especially on highways and long monotonous stretches where human drivers might lose focus.

Lane keeping assistance has become nearly standard in new cars and is a foundational feature for higher levels of vehicle automation. In the U.S., deployment of LKA in new passenger vehicles rose from just 1.4% in 2015 to about 86% in 2023, meaning the vast majority of latest models now include some form of lane keeping aid. This rapid adoption is driven by proven safety benefits. The National Safety Council (NSC) estimated that if all vehicles on American roads had effective lane keeping systems, it could prevent up to 14,844 deaths per year – a huge portion of traffic fatalities – by averting lane-drift crashes. Even in partial adoption, real-world studies have observed tangible benefits: for example, vehicles equipped with LKA (and its warning-only predecessor, lane departure warning) have significantly lower rates of single-vehicle roadway departure crashes. Insurance data analyses in the U.S. have shown 11%–21% reductions in sideswipe and head-on collisions in vehicles with lane departure prevention systems compared to those without. LKA technology has also improved over time – newer AI algorithms better detect lane lines in poor weather or night conditions, and they adapt to a variety of lane markings (solid, dashed, yellow, white) across states. Moreover, the integration of high-definition maps in some advanced vehicles allows the LKA system to know where lanes should be even when markings are temporarily unclear (e.g., in construction zones). By actively intervening to keep vehicles in lane, these AI systems address one of the leading causes of highway accidents: unintentional drifting due to distraction or fatigue.

7. Traffic Sign Recognition

Traffic Sign Recognition (TSR) is an AI capability that allows autonomous vehicles to identify and interpret road signs automatically. Using forward-facing cameras and neural network-based image recognition, the vehicle’s AI can “read” signs like speed limits, stop signs, yield signs, no-entry warnings, and more. Once recognized, this information is used to inform the vehicle’s driving decisions – for example, adjusting the car’s speed to comply with a newly posted speed limit, or preparing to stop when an upcoming stop sign is detected (even before the stop line is reached). This feature helps an autonomous or driver-assist system adhere to traffic laws and local regulations without relying on a human driver’s attention. TSR is especially valuable in areas with frequent sign changes or variable speed limits (such as work zones or school zones) where map data may not be up-to-date. The AI for traffic sign recognition is trained on thousands of images of signs under different lighting and weather conditions to ensure reliability. By correctly interpreting signage, autonomous vehicles maintain safer and more lawful driving behavior, and they can also alert human drivers in semi-autonomous cars if a sign is missed.

AI-based traffic sign recognition has achieved near human-level (and even super-human) accuracy in controlled evaluations. On the standard German Traffic Sign Recognition Benchmark (a widely used test dataset), state-of-the-art models have exceeded 99% classification accuracy, outperforming human test subjects who score around 98-99%. One 2021 IEEE study reported a model reaching 99.33% accuracy on this benchmark, slightly above the measured human performance of 98.84%. This high accuracy is crucial because misreading a sign (for example, mistaking a “65 mph” sign for “85” due to vandalism or stickers) could lead to unsafe behavior – to address this, many systems cross-verify recognized signs with map data when available. In practice, traffic sign recognition systems in commercially deployed cars (from brands like Tesla, BMW, etc.) can reliably detect common signs like stop, yield, and speed limits, but certain challenges remain, such as correctly interpreting electronic or variable message signs and dealing with obscured or damaged signs. The technology is advancing: the European Union has mandated Intelligent Speed Assistance systems (which rely on sign recognition) in new cars from 2024, pushing accuracy even higher. In the U.S., many high-end and mid-range vehicles now offer TSR, often to display the current speed limit on the dashboard; a 2022 survey found that over 50% of new luxury car models sold in the U.S. had traffic sign recognition as a feature, a number expected to rise as software improves. The benefit of TSR is not just theoretical – by always observing speed limit signs, an AI can prevent inadvertent speeding, and by catching stop or yield signs even if a human doesn’t, it can avert potential violations or collisions. In sum, traffic sign recognition adds an important layer of compliance and safety for autonomous driving, with AI vision systems now robust enough to read signs with very high precision.

8. Condition Monitoring

Autonomous vehicles employ AI for continuous condition monitoring of their internal systems, which enables predictive maintenance and early fault detection. Essentially, the vehicle’s AI is always “listening” to and analyzing data from dozens of sensors embedded in the car’s components – engine or motor performance, battery health, tire pressure, brake wear, temperature readings, etc. By learning the normal patterns of these parameters, the AI can detect anomalies that may indicate an impending failure or a need for service. For example, if a certain vibration pattern in the wheel hub deviates from the norm, the system might flag it as a wheel bearing issue developing, prompting maintenance before a breakdown occurs. This capability means problems can be addressed proactively, minimizing the chances of sudden malfunctions on the road. In fully autonomous vehicles (like robotaxis), such AI-driven condition monitoring is critical for safety because there may not be a human doing routine inspections. Additionally, predictive insights from the AI can optimize maintenance schedules – servicing components only when needed based on actual wear, rather than on fixed intervals, thereby improving reliability and reducing cost. In summary, AI condition monitoring turns an autonomous vehicle into its own mechanic, performing real-time diagnostics to ensure all systems run safely and efficiently.

The benefits of AI-driven predictive maintenance are well documented in both automotive fleets and industry. A McKinsey analysis found that predictive maintenance algorithms can reduce unplanned machine downtime by up to 50% and extend equipment life by 20–40% through timely interventions. In automotive applications, this translates to vehicles spending more time on the road and less time in the shop. For instance, a field trial with a bus fleet in 2024 reported that using AI-based vehicle health monitoring led to a 75% reduction in unexpected downtime for the buses. In the same trial, the fleet saw maintenance cost savings (spare parts costs dropped by 30%) and even a 20% reduction in accidents, credited to catching issues (like brake or engine problems) before they could contribute to failures on the road. Large U.S. trucking companies are adopting similar AI maintenance platforms: one logistics firm documented that predicting failures in advance saved them on average 27 hours of downtime per truck, per month. Another tangible example is tire monitoring – AI can combine pressure sensor data with wheel speed and temperature readings to predict a tire blowout before it happens; this kind of alert might prevent a highway crash or costly roadside breakdown. By 2025, it’s expected that a significant share of autonomous vehicle operators (robotaxi services, trucking fleets) will use AI-driven condition monitoring as a standard practice. The return on investment is clear: more reliable autonomous service and lower maintenance costs. Ultimately, predictive condition monitoring enhances safety for autonomous vehicles by ensuring that the machine itself is in optimal condition, thus complementing the AI’s traffic-handling capabilities with robust self-maintenance.

9. Enhanced Security Features

As vehicles become more connected and autonomous, cybersecurity has emerged as a critical aspect, and AI plays a key role in enhanced security features for these vehicles. Autonomous cars are essentially computers on wheels, communicating with the cloud, infrastructure, and other vehicles – this connectivity opens potential avenues for hacking or unauthorized access. To counter these threats, AI-driven security systems monitor the vehicle’s networks and software in real-time, looking for anomalies that might indicate a cyberattack. For example, an AI can detect if an unusual command is sent to the braking system or if there’s a strange pattern of CAN-bus messages, and then take action (like isolating that component or alerting a central security server). AI is also used to intrusion detection systems inside the vehicle, which learn the normal communication patterns of sensors and controllers; any deviation triggers an alert or defensive response. Additionally, machine learning helps in identifying new vulnerabilities by simulating attacks and finding weaknesses before hackers do. In summary, AI enhances the security of autonomous vehicles by providing a smart, adaptive defense mechanism against cyber threats, ensuring the vehicle’s control systems cannot be easily compromised.

The need for robust AI-driven security in vehicles is underscored by the rising number of automotive cyber incidents. In 2024, there were 409 known cybersecurity incidents in the automotive and smart mobility sector globally, a 39% increase from 295 incidents in 2023. Alarmingly, the scale of attacks is growing: 60% of incidents in 2024 affected entire fleets or multiple vehicles, and those massive attacks (impacting millions of vehicles or devices) tripled from 5% of incidents in 2023 to 19% in 2024. These included high-profile hacks such as one breach of a U.S.-based automotive software supplier that disrupted 15,000 dealerships and led to an estimated $1 billion in losses. AI-based security systems aim to prevent such outcomes by instantly recognizing suspicious activity. For instance, if an autonomous car’s AI detects an unusual data upload from its onboard diagnostics port (perhaps indicating an unauthorized attempt to reprogram the car), it can shut down that connection or revert to a safe mode. Automakers are increasingly investing in “anomaly detection” AI: one industry report noted that over 100 automotive ransomware attacks and 200 data breaches were documented in 2024 alone, many of which were thwarted or mitigated by automated security responses. Regulatory bodies in the U.S. (like NHTSA) have also issued cybersecurity guidelines that encourage the use of machine learning to identify and patch vulnerabilities continuously. Thanks to AI, cars can now not only drive themselves but also defend themselves – for example, Tesla’s vehicles receive over-the-air software updates that include AI-trained models to recognize new malware or hacking techniques, adding layers of adaptive security over time. As connected autonomous vehicles proliferate, these enhanced AI security features are essential to maintain public trust and safety, preventing scenarios where a malicious actor could remotely interfere with vehicle operation.

10. Driver Monitoring

Driver monitoring systems use AI to keep track of a human driver’s attentiveness and alertness, which is especially important in semi-autonomous vehicles where control can switch between the human and the AI. These systems typically involve an infrared camera mounted on the dashboard or steering column that watches the driver’s face and eyes. The AI computer vision algorithm assesses indicators like eye gaze direction, eyelid openness (blink rate), head position, and even facial expressions to determine if the driver is focused on the road or if they are distracted or drowsy. In vehicles with features like Tesla’s Autopilot or GM’s Super Cruise (which require the driver to be ready to take over), the driver monitoring AI will issue alerts if the driver looks away for too long or if signs of fatigue (like frequent yawning or microsleep head nods) are detected. In fully autonomous taxi services that still have a safety driver, it’s used to ensure the safety driver is paying attention. The goal of driver monitoring AI is to ensure a safe handover between autonomous mode and human control whenever necessary. If the system detects the human is not capable of taking over (for instance, the driver has fallen asleep), some cars will initiate safety measures – from loud warnings and seat vibrations up to slowing down the vehicle safely. Thus, AI driver monitoring adds a crucial safety net that addresses the human factor in the driving loop.

The importance of driver monitoring is evident considering how many accidents are caused by inattention or fatigue. In the United States, an estimated 17.6% of all fatal crashes involve a drowsy driver – that’s nearly one in five fatal accidents attributable to someone essentially falling asleep or being too tired to drive safely. Likewise, distracted driving (such as texting or other inattentiveness) remains a leading cause of crashes; in 2023 alone, 3,275 people were killed in crashes involving distracted drivers in the U.S.. AI-driven monitoring systems directly target these problems by catching the warning signs early. For example, if a driver’s eyes are off the road for more than a couple of seconds, the system might sound a chime and flash a message like “Pay Attention to the Road.” If fatigue is detected (e.g. the driver’s blink duration and slow eye movements suggest microsleep), more urgent alerts may trigger – Cadillac’s Super Cruise uses a light bar on the steering wheel that changes color and eventually an audible alarm if the driver doesn’t return their gaze forward. Some advanced systems in trucks have even been tested that can gently slow down the vehicle and pull over if the driver is unresponsive due to a health issue or extreme fatigue. Automakers are beginning to include driver monitoring as standard: by 2025, it’s expected that most new vehicles with Level 2 automation (partial self-driving features) will incorporate AI driver monitoring, and the U.S. National Transportation Safety Board (NTSB) has recommended it on all new cars to prevent distraction-related accidents. In essence, by using AI to supervise the human, these systems add an extra guardian on the road – one that never gets tired or distracted – thereby significantly enhancing overall driving safety in vehicles that still rely on human oversight.