1. Object Recognition

AI-driven object recognition enables AR systems to identify and label real-world objects in real time, anchoring digital content to the environment. Modern computer vision models (e.g. convolutional neural networks and transformers) integrated into AR can detect and track a wide range of object types under varying conditions. This capability underpins AR applications from gaming (placing virtual items on recognized surfaces) to assistive tools (providing names or information about objects in view). The challenge is balancing accuracy and speed: AR demands near-instant recognition to maintain immersion, which AI algorithms are increasingly able to deliver on-device. Thanks to training on large image datasets and sensor fusion, AR object recognition has improved in robustness, handling different lighting or angles so that virtual overlays remain correctly attached to physical objects.

A 2024 study demonstrated that an AI-enhanced AR system could achieve high object detection performance even in challenging conditions. After extended training, the system reached about 85–86% detection accuracy on small objects in scenes with rain, comparable to clear conditions. This shows that AI can maintain strong object recognition in AR despite environmental noise. By using oriented bounding boxes and deep neural networks, the researchers improved average precision for detecting tiny objects in cluttered environments. Such results underscore how AI advances are boosting AR object recognition accuracy to levels suitable for reliable real-time use.

2. Scene Interpretation

Beyond individual objects, AI helps AR interpret whole scenes — identifying layouts, contexts, and relationships in the environment. This involves semantic segmentation (labeling regions as floor, wall, sky, etc.), spatial mapping, and context recognition (e.g. recognizing a kitchen versus an office). AI models can analyze visual data to construct a “scene graph” of the AR user’s surroundings, enabling more context-aware augmentations. For instance, an AR guide can understand it’s in a classroom setting and highlight the whiteboard or exits accordingly. By combining depth sensors and neural networks, AR devices achieve real-time scene understanding, recognizing surfaces for virtual content placement and interpreting environmental cues (like lighting or obstacles). This semantic comprehension allows AR to tailor its behavior to the scene — ensuring virtual objects interact believably with the real world (resting on tables, occluding behind walls) and providing relevant information about the overall context.

Vision-language AI models are beginning to give AR systems high-level scene understanding capabilities. In tests with diverse AR imagery, a state-of-the-art model could correctly identify and describe visible AR content with up to 93% true-positive rate for detection and about 71% true-positive rate for generating descriptive labels. This means the AI accurately recognized most virtual and real objects in complex scenes and was able to describe them in context. Such performance is noteworthy across varying scene complexities, indicating that modern AI can grasp the semantic context in AR settings. However, the same study noted that these models sometimes struggle with subtle context integration, revealing an ongoing challenge in achieving human-level scene interpretation. Nonetheless, the high detection rates showcase the potential of AI to reliably interpret AR scenes, paving the way for more context-aware and explanatory AR applications.

3. Gesture Recognition

AI-enabled gesture recognition lets users interact with AR using natural hand and body movements instead of controllers or touch screens. Computer vision algorithms (often leveraging deep learning) track a user’s hands, identifying gestures like swipes, pinches, or waves, which AR applications map to commands. This creates intuitive, touchless interfaces — for example, pinching fingers in mid-air to zoom a hologram or waving to switch modes. AI improves the robustness of gesture recognition by learning from large datasets of hand poses, enabling AR systems to recognize diverse gestures and even complex sign languages in real time. The result is a more immersive AR experience where users can seamlessly manipulate virtual objects as if they were real, using only their movements. Ensuring low latency and high accuracy is crucial; recent AI models have achieved impressive reliability in detecting gestures quickly enough to feel instantaneous.

AI research in 2024 reached new heights in gesture recognition accuracy for AR interactions. One real-time system using a single wearable IMU sensor and neural networks was reported to recognize hand gestures with 95% accuracy. This high precision means the vast majority of user gestures were correctly identified, a key milestone for dependable AR controls. Such performance was achieved by combining motion tracking and deep learning classification, showing that even with minimal hardware (just a wristband sensor) AI can interpret gestures reliably. In practice, this level of accuracy allows AR systems to respond correctly to virtually all user hand signals, greatly improving the usability of AR interfaces. Continued improvements, including camera-based AI tracking multiple joints, are making gesture-based AR control both practical and highly responsive.

4. Personalization

AI enables AR experiences to be personalized to each user’s preferences, behavior, and context. By learning from a user’s past interactions and data, AI can tailor content — for example, an AR museum app might show exhibits likely to interest a particular visitor, or an AR shopping app could recommend items that fit the user’s style. Personalization makes AR more engaging and relevant: the system can adjust difficulty in an educational AR game to match the learner’s skill, or change an AR tour’s route based on what the user finds most interesting. This adaptive approach relies on AI models (like recommendation systems or user modeling algorithms) to predict what a user will find valuable. Importantly, AI-driven personalization in AR also considers context (time, location, weather) to provide information or visuals that suit the moment. By delivering a “custom-fit” AR experience for each individual, AI increases user satisfaction and the effectiveness of AR content. It turns one-size-fits-all AR applications into dynamic ones that evolve with the user.

Personalization has been shown to significantly influence user engagement in AR. In a 2024 user study for a cosmetics shopping app, 80.4% of users reported using the app’s AI-driven personalized product recommendations after trying its AR virtual try-on feature. In other words, a large majority of shoppers who experienced AR (viewing how makeup looks on them virtually) went on to interact with the tailored recommendations that AI provided. This suggests that AR can boost the uptake of personalized content — likely because the immersive try-on builds user trust and interest, making them receptive to customized suggestions. The study underscores AI’s role in linking AR engagement to user-specific outcomes: AR not only captured attention but also funneled users into personalized recommendation systems at a very high rate. Such findings imply that AI-driven personalization, when combined with AR’s interactive visuals, can enhance user experience and potentially conversion (in e-commerce or learning) far more than generic AR content.

5. Speech Recognition

Integrating AI-based speech recognition into AR allows users to control and interact with augmented reality content through voice commands. This makes AR applications more accessible and hands-free – a user can simply speak to search for information about an object they’re seeing or dictate a message while in an AR overlay. Modern speech-to-text AI (automatic speech recognition, ASR) systems have reached human-like proficiency in understanding spoken language, enabling AR assistants to reliably transcribe and respond to user queries in real time. For example, an AR wearable could display subtitles of conversations or translate spoken language on the fly (using recognized speech as input). AI handles challenges like accent, noise, and natural language understanding to ensure the AR system correctly interprets the user’s voice. As a result, speaking to one’s AR glasses or phone becomes as effective as tapping menus. This voice interface is especially valuable in situations where the user’s hands or eyes are busy – say, an engineer can ask AR glasses for specs while working, or a driver can get AR navigation updates via voice. Overall, AI-driven speech recognition makes AR interactions more natural and fluid, reducing reliance on touch or gaze controls.

Thanks to AI advances, speech recognition in recent years has achieved very high accuracy, though performance can vary by context. In controlled benchmarks, AI speech-to-text systems have reached word error rates around 5%, approaching human-level transcription accuracy. (For instance, Microsoft reported about 5.1% error on a standard Switchboard phone conversation task – meaning ~95% of words correctly recognized.) In practical AR settings, however, noise and spontaneous speech can increase errors – studies of live lectures found error rates ranging roughly 12% to 31% for different services. This indicates current speech recognition is extremely accurate under ideal conditions and still quite usable in more complex environments, though not perfect. Importantly for AR, ongoing AI improvements (including better noise robustness and on-device models) are steadily shrinking the gap. Already, devices like AR headsets can reliably execute voice commands and transcribe speech in real time with only a small chance of misinterpretation in quiet conditions. As AI models incorporate more contextual understanding and noise cancellation, we expect near-human speech recognition performance to become standard in AR across various real-world scenarios.

6. Semantic Understanding

AI gives AR systems a deeper semantic understanding of what the user is seeing, going beyond raw recognition to grasp meaning and context. This involves linking visual data with knowledge – for example, not just detecting a building in view, but knowing it’s a restaurant and retrieving its reviews, or recognizing that a combination of objects (knife, cutting board, vegetables) implies the user is in a cooking context. AI models like image captioners, scene classifiers, and knowledge graphs enable AR to interpret scenes at a conceptual level. Consequently, AR applications can provide more meaningful assistance: an AR assistant could answer complex questions about the scene (“Is this device safe to touch?”) or highlight relevant information (like showing nutritional info when you look at food). Achieving this requires multimodal AI – combining computer vision with natural language processing. Recent foundation models (e.g. CLIP, GPT-4 Vision) are adept at aligning images with text, which AR leverages to annotate scenes with rich descriptions or to understand spoken/written queries about the visual environment. In essence, semantic understanding empowers AR to not just see the world, but to comprehend it in human-like terms, enabling far more intelligent and context-aware interactions.

A notable 2023 advancement in this domain is Meta AI’s Segment Anything Model (SAM), which showcases how semantic understanding can be generalized. SAM was trained on an unprecedented dataset of over 1 billion segmentation masks across 11 million images, giving it the ability to segment and identify virtually any object in an image without prior training on that specific object. In evaluation, this AI model’s zero-shot performance on segmentation tasks was often on par with specialized, fully-supervised models. This means SAM can delineate objects and regions it has never seen during training, purely based on the concept of “objectness” it learned from massive data – a strong semantic capability. For AR, such a model can instantly pick out and label arbitrary items in the user’s view (from pets to furniture to tools) once prompted, providing a foundation for understanding novel scenes. The success of SAM points to the power of large-scale AI in endowing AR with flexible semantic understanding: rather than being limited to a predefined list of objects, AR systems can identify and work with virtually anything the user encounters, enhancing their knowledge about the environment in real time.

7. Real-time Translation

AI-driven translation is a game changer for AR, allowing live translation of text and speech within the user’s view. Imagine pointing your phone at a sign in a foreign language and seeing it instantly displayed in your native language, or wearing AR glasses that provide subtitles for someone speaking another language. AI makes this possible by combining computer vision (to detect and extract text or recognize speech) with machine translation models to convert between languages on the fly. In AR, the translation isn’t static: the system continuously updates the translated overlay as you move or as new text/speech appears, truly in real time. Modern translation AI, often neural machine translation, handles over a hundred languages and can preserve context and tone fairly well. AR enhances the effect by blending the translated text into the world – for example, matching the font style or background of the original sign so it looks natural. This creates an illusion that the world itself is speaking your language. The result is a powerful tool for travel, global collaboration, and accessibility: language barriers are reduced as AR provides instantaneous translation of menus, conversations, instructions, and more, all thanks to the underlying AI’s linguistic capabilities.

Real-world deployments of AR translation are already impressively capable as of mid-2020s. Google’s AR Translate feature in Lens, for instance, can translate over 100 languages in real time on a smartphone. A user can hover their camera over printed text (like street signs or documents) and see the text re-rendered in a chosen language almost immediately. In early 2023, Google rolled out an update that uses AI to seamlessly blend translated text into the scene, so that the result preserves the look of the original sign or poster. This means the translated overlay does not stick out awkwardly; instead, it appears painted into the environment, greatly improving readability and user experience. Such advances are backed by robust machine learning – for example, using generative adversarial networks to match fonts and backgrounds. Furthermore, AR translation isn’t limited to text: AI speech recognition combined with translation now allows conversational AR translation, with one demo showing AR glasses displaying live-transcribed and translated subtitles for multilingual dialogue. These developments illustrate how far AI-driven AR translation has come: it’s fast, supports a vast array of languages, and is contextually aware enough to present results in an intuitive visual manner.

8. Enhanced Navigation

AI enhances AR navigation by providing more intuitive, context-rich guidance for moving through the world. Instead of following a flat map, users can see directional arrows and indicators overlaid on the real environment (e.g. an arrow on the sidewalk showing where to turn). AI contributes at several levels: it helps recognize landmarks and the user’s precise location via computer vision (visual positioning), it can predict the user’s route preferences, and it adjusts guidance dynamically (if you detour, it will reroute the AR overlay accordingly). Importantly, AI-backed AR navigation can increase safety and efficiency. For example, in driving or aviation, an AR heads-up display can highlight hazards or points of interest without the operator having to look down at instruments. Machine learning models can also learn from large amounts of navigation data to give smarter directions – like telling you to walk through a building to save time on a route if the AI knows it’s open and faster. AR navigation is also improving indoors: AI can interpret building layouts and signs to guide users inside malls or airports, where GPS is unreliable. By keeping navigational cues in the user’s line of sight and tailoring them to context (such as pedestrian vs. driving, weather conditions, etc.), AI-powered AR makes finding one’s way more natural and less error-prone.

Studies show that AR displays can significantly improve navigation performance by keeping users more engaged with their environment. A 2023 experiment in maritime navigation found that adding AR heads-up overlays led navigators to spend far more time looking at their surroundings and less time looking down at instruments. Specifically, with AR assistance the head-down time was reduced by a factor of 2.67, and the number of times navigators glanced down at charts or screens dropped by 62% compared to traditional navigation without AR. This is a dramatic improvement – effectively, AR allowed navigators to maintain “eyes out” on the real world about two and a half times longer, which can translate to better situational awareness and faster reactions to real-world events. Despite this increase in head-up attention, the study noted no loss in navigation accuracy or situational awareness scores, implying that the AR overlays provided needed info without distraction. For everyday users, similar benefits have been observed: AR walking directions help people orient themselves more quickly and confidently, reducing wrong turns. The data from controlled trials like the maritime study quantitatively underscores AI-assisted AR navigation’s value in making wayfinding more efficient and keeping the user’s focus on the path ahead.

9. Adaptive Learning Environments

AI is transforming AR-based learning by making it adaptive to each learner’s needs. In an AR educational environment, AI can act as a personal tutor: monitoring the learner’s progress, determining which concepts they struggle with, and adjusting the difficulty or style of content accordingly. For instance, in an AR chemistry lab simulation, if a student repeatedly makes a mistake, the AI might alter the scenario or provide additional hints in the AR view. This real-time feedback loop keeps learners in their optimal challenge zone – not too easy (which would be boring) and not too hard (which causes frustration). AI-driven adaptive learning can also decide what content to present next: after mastering one topic in AR, the system can introduce a new concept that builds on it, tailoring the curriculum path. By utilizing techniques like knowledge tracing and reinforcement learning, the AI estimates the student’s knowledge state and decides the best AR intervention (e.g. a quick quiz, a visual demonstration, or a game challenge). Studies have shown that such personalized instruction significantly improves learning outcomes. With AR’s immersive visuals and AI’s personalized guidance, students often feel more engaged and supported. Essentially, AI makes AR learning environments more like a human tutor that knows the student well – but one that can conjure interactive 3D examples and practice exercises in any setting.

Adaptive learning platforms leveraging AI have reported substantial improvements in student performance. One notable result comes from the adaptive-learning company Knewton, which found that students’ test scores improved by 62% when using its AI-powered personalized learning program, compared to students who didn’t use the adaptive system. This large gain (nearly a two-thirds increase) illustrates the effectiveness of tailoring instruction to the individual. Although this statistic isn’t specific to AR, it highlights the impact of AI-driven adaptation in education. In the context of AR, one can expect similar or even greater benefits, as AR’s engagement combined with AI personalization can address different learning styles (visual, kinesthetic, etc.) more directly. Early trials of AI-AR integrations in classrooms have noted improved retention and reduced time to mastery for complex spatial topics, aligning with Knewton’s findings of higher achievement. The 62% jump in scores suggests that when learners receive content and support uniquely suited to them – something AI excels at – their understanding deepens significantly. As AR adaptive learning systems become more common, such statistics bode well for a future where education is not only immersive but also intelligently responsive to each learner.

10. Visual Enhancement

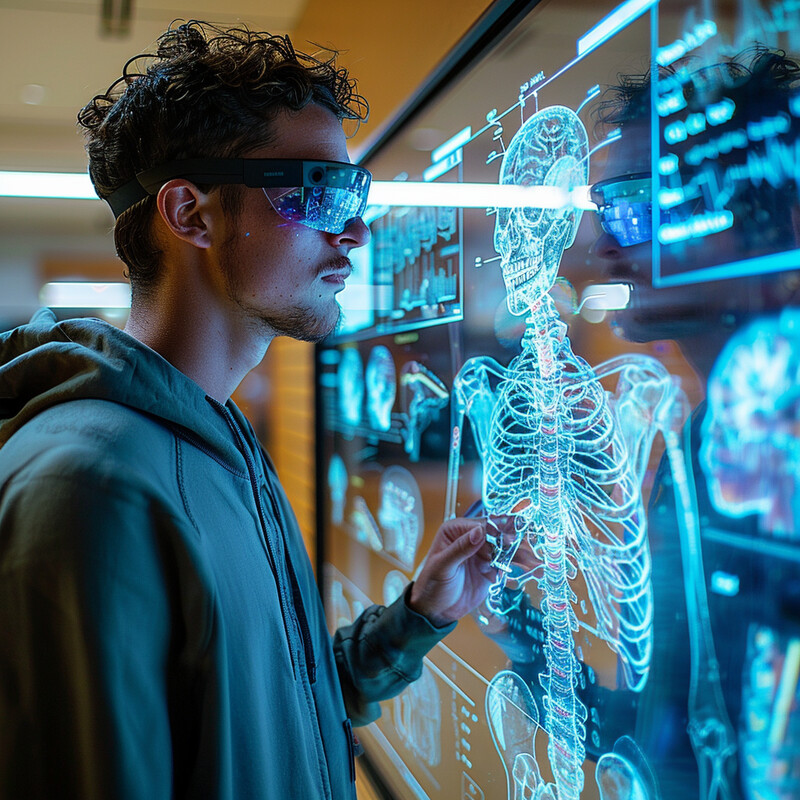

AI-powered AR can act as a visual assistive tool, enhancing or augmenting human vision in ways that improve perception and decision-making. For users with visual impairments, AR can highlight important features (outlining obstacles or reading text aloud), effectively compensating for lost vision. In professional settings, AR helmets or glasses can enhance vision by fusing different spectra – for example, overlaying thermal camera feeds or night vision onto the normal view, with AI picking out key hotspots or targets. AI also enables real-time image enhancement in AR: adjusting contrast, zoom, or clarity of details that the user might otherwise miss. A surgeon, for instance, might use an AR overlay that highlights blood vessels or tumors based on AI analysis of the imaging data. Similarly, a car’s AR windshield could identify and spotlight pedestrians in darkness. The AI’s role is to interpret raw sensor input and decide what visual modifications help the user most – whether it’s magnifying distant text, filtering out haze, or tracking a fast-moving object and annotating it. Essentially, AI in AR visual enhancement acts like a smart guide for vision, providing an annotated or clarified view of the world. This not only benefits those with low vision, but anyone who needs “superhuman” sight in certain tasks (pilots, surgeons, soldiers, etc.), by presenting information in the visual field that would be otherwise invisible or hard to discern.

An AI-assisted augmented reality microscope highlights a cancer region (green outline) on the live view, aiding in diagnosis. In medical contexts, visual-enhancement AR systems have achieved impressive accuracy and precision. A recent experiment integrating AI with AR for skin cancer surgery reported tumor segmentation accuracies of 95.5% (for distinguishing benign vs. malignant tissue) and an average error of only 0.64 mm in projecting guidance overlays onto the patient. In practice, this means the system’s virtual cut-line suggestions almost exactly matched the true tumor boundaries, within a sub-millimeter margin. The surgeons used the AR projections during 100+ operations and were able to resect tumors with a high degree of confidence and completeness. Another example is the augmented reality microscope (ARM) developed with the U.S. Department of Defense: in trials, pathologists assisted by the ARM’s AI-driven highlights showed higher accuracy and speed in identifying cancerous cells than unaided review. These outcomes demonstrate how AI-enhanced AR can significantly improve visual tasks – from guiding surgeons to remove all cancerous tissue while sparing healthy tissue, to helping experts spot subtle anomalies faster. The combination of AI’s analytical prowess with AR’s real-time visualization yields a powerful diagnostic and decision-support tool, essentially boosting human vision with machine intelligence for better outcomes.