1. Automated Image Generation

AI-driven image generation enables the automatic creation of artwork from text descriptions or other inputs. This technology gives artists and designers rapid ways to visualize concepts and iterate on ideas, dramatically shortening the concept art and prototyping process. Benefits include expanded creativity – artists can explore countless variations or styles that would be time-consuming manually – and greater accessibility, as non-experts can produce complex images through simple prompts. AI image generators (like DALL·E, Midjourney, and Stable Diffusion) have been integrated into creative workflows, augmenting human creativity rather than replacing it. Implications of this widespread use include debates over originality and authorship of AI-assisted art, as well as the need to address ethical issues like bias or misuse, but overall the tools are being embraced as powerful assistants in visual art creation.

Adoption of generative image AI has been extremely rapid in recent years. By mid-2023, over 15 billion images had been created using popular text-to-image platforms (Stable Diffusion, Firefly, Midjourney, DALL·E), a volume on the order of one-third of all images ever uploaded to Instagram. Creative professionals have incorporated these tools at high rates – a late-2023 survey found that 83% of creatives globally use generative AI in their work, with U.S. creatives showing an 87% adoption rate. In fact, image creation is the top use case: American creatives reported using generative AI for 78% of the images they produce. Mainstream design software now includes AI image generation (for example, Adobe’s Firefly system, which by 2025 had generated over 22 billion image assets), highlighting how quickly these tools have become standard. This explosion in usage illustrates AI’s practical impact on visual art, enabling faster content creation and unprecedented scalability in image production.

2. Style Transfer

Style transfer is an AI technique allowing the style of one image (e.g. a painting’s brushstrokes or color palette) to be applied to the content of another image. This lets artists experiment with transforming their photos or drawings into the look of various art masters or styles in seconds. AI style transfer tools thus provide a fast way to reimagine artwork in different aesthetics – for example, a digital illustration can be rendered in Van Gogh’s or Picasso’s style without manual repainting. Benefits include creative exploration and the ability to prototype different visual treatments quickly. However, implications for the art community are significant: while it opens new avenues for creativity and mashups, it also raises ethical concerns about appropriating living artists’ styles without permission. Many illustrators feel these tools only partially capture their unique style – AI can mimic surface elements but often misses the deeper “voice” or originality of an artist. Consequently, style transfer is viewed both as an inspiring creative aid and, when misused, a potential threat to artists’ intellectual property.

Recent studies highlight both the advancements and challenges of style transfer in practice. Technical progress has made style transfer more efficient – one 2025 experiment achieved a 76% reduction in processing time for neural style transfer, enabling near-real-time application of painterly effects without sacrificing quality. This improvement means artists can use style filters live, even on mobile devices, greatly expanding practical use cases. On the other hand, a 2025 human-centered study found that professional illustrators recognized AI’s ability to copy certain aesthetic fragments of their work but noted it still lacked the subtle “emergent” qualities that define their personal style. The same study raised ethical flags: the unauthorized replication of an illustrator’s signature style by AI was seen as problematic, underscoring concerns about consent and credit in creative labor. These findings show that while AI style transfer is technologically maturing and widely used in design (e.g. many photo apps now offer style filters), there is an ongoing conversation about respecting artists’ rights and the nuanced limits of what current style transfer can achieve creatively.

3. Colorization

AI-based colorization tools automatically add plausible color to grayscale images, such as old photographs or sketches. This capability is transforming workflows in photography, film restoration, and digital art by saving artists significant time. Instead of manually painting colors onto each region of an image (a tedious process), modern AI models learn from vast datasets of color images and can predict realistic colors for a black-and-white input. For artists, this means quickly exploring different color schemes on a drawing or previewing how a black-and-white concept might look in full color. In filmmaking and heritage preservation, AI colorization brings new life to historical archives – for example, turning century-old black-and-white footage into color can make it more vivid and relatable to contemporary audiences. The benefit is efficiency and creative flexibility, but a key implication is the need for human guidance: these algorithms can colorize in a generic way, but ensuring historical accuracy of colors often requires an expert’s input (to avoid miscoloring artifacts, clothing, etc.). Thus, AI colorization is typically used as a powerful assistive tool – automating the heavy lifting of coloring while artists or historians fine-tune the results for authenticity.

In the past two years, AI colorization has moved from experimental to practical application at scale. In 2023, researchers at Graz University of Technology (Austria) unveiled a system called RE:Color that can efficiently colorize old films with minimal manual intervention. The project developed an AI algorithm now integrated into restoration software, enabling largely automatic coloring of historical footage while still allowing user control for accuracy. This approach proved successful for archival film: an operator can specify the true colors on a single frame (for example, indicate a soldier’s uniform should be olive green) and the AI will propagate those colors throughout the scene, frame by frame. The result is a realistic colorization that previously would have required painstaking hand-coloring. On the consumer side, AI colorization has also seen viral popularity. A genealogy platform’s AI tool for colorizing family photos surpassed 1 million images colorized in its first 5 days when introduced (earlier in 2020), demonstrating public appetite for reviving black-and-white pictures. Today (2023–2025), major photo-editing programs like Adobe Photoshop include one-click colorize filters, and even smartphone apps offer instant colorization of old photos, reflecting how AI has made what used to be a highly specialized task into an accessible, everyday capability.

4. Music Composition

AI has become a collaborative partner in music composition, capable of generating melodies, chord progressions, or entire songs with minimal human input. Using techniques like deep neural networks trained on large collections of music, AI systems can compose in various styles – from classical pieces to pop beats – often in a matter of seconds. This assists musicians by providing a wellspring of ideas: for instance, a composer might use an AI-generated melody as a starting point or have AI suggest harmonies to flesh out a song. Benefits include increased productivity (songwriters can quickly get past “writer’s block” by letting AI propose material) and personalized music creation for content like video games, where adaptive AI-generated music can respond to gameplay. AI music tools also empower non-musicians to create original music for their projects (like background scores for videos) without extensive theory training. However, the rise of AI in music has implications such as questions of authorship and royalty rights for AI-produced works, and concerns among human artists about fair compensation and originality. Overall, in 2023–2025 we see AI composition being used not to replace artists, but to augment creative workflows – often the musician curates or edits AI outputs to fit their artistic vision, treating the AI like an assistant that can rapidly draft musical ideas.

The music industry has witnessed an explosion of AI-generated content and significant uptake of AI tools. An industry report found that in 2024, roughly 60 million people – about 1 in 10 consumers – had used generative AI to create music or lyrics. These range from hobbyists crafting songs with AI apps to professional producers using AI for inspiration and sound design. On streaming platforms, AI-composed music now represents a noticeable share: by early 2023, users of the AI music service Boomy had created over 14 million songs, which accounted for about 14% of the world’s recorded music tracks by volume. (This surge even led Spotify to remove tens of thousands of AI-made songs amid concerns of automated streaming inflation.) The adoption is not limited to amateurs – major media companies are embracing AI for efficiency. For example, video game studios have used AI to generate dynamic game soundtracks, and in 2023, the first album partly co-composed by AI debuted on classical music charts. In the U.S., legislative and industry discussions are underway about copyright and royalties for AI music, but many artists already see AI as a creative aid: surveys indicate about 30–50% of music producers have experimented with AI tools by 2024. This momentum is further evidenced by investment in the sector – AI music startups have collectively raised hundreds of millions of dollars, betting on a future where AI plays an integral role in music creation rather than a novelty.

5. Dynamic Brush Tools

“Dynamic brush” tools refer to AI-enhanced digital brushes that intelligently respond to the artist’s actions or context, making digital painting and drawing more intuitive. Traditional digital brushes already react to basic inputs like stylus pressure or tilt, but AI-powered brushes go further – for example, by adjusting their texture or shape in real time to mimic natural media or to follow the user’s sketch intent. In practice, this means an artist can scribble a rough line and an AI-assisted brush might refine it into a stroke with realistic oil paint texture or even auto-complete part of a sketch. The benefit is a more fluid creative process: artists get some of the “feel” of real-world painting (with organic, dynamic effects) while also gaining new capabilities (such as a brush that lays down intricate patterns procedurally). These tools can save time (by automating repetitive stroke details) and lower skill barriers (novices can achieve sophisticated effects). One implication is the evolving role of the artist – with AI brushes, the artist often guides the outcome at a high level while the tool fills in detail, which blurs the line between manual and algorithmic creation. Nonetheless, artists retain control: they can accept or adjust the AI’s suggestions, using these brushes as cooperative instruments that enhance human creativity rather than replace it.

Several AI-driven painting tools launched recently demonstrate how dynamic brushes are being used. For instance, NVIDIA’s Canvas application allows users to sketch with broad “material” brushes (like a stroke labeled “sky” or “rock”), and the AI instantly turns those strokes into photorealistic landscape elements – essentially the brush paints in context, producing detailed scenery from a simple line. This kind of AI-assisted brush dramatically speeds up concept art; artists can create a realistic background by doodling shapes and letting AI interpret them. Another example is the 2024 app SageBrush, which offers an interactive AI painter: as a user draws, the AI can automatically transform rough outlines into more elaborate imagery (for example, turning a stick-figure pose into a shaded figure). In SageBrush, creators can dial the AI’s influence up or down, effectively controlling how much the “brush” improvises versus follows the original drawing. Early user feedback from such tools notes that they enable a “co-creation” experience – one digital artist described working with an AI brush as similar to a jazz improv partner, where the tool may fill in flourishes the artist didn’t explicitly draw. While still emerging, these AI brushes are increasingly being integrated into mainstream software (Adobe has previewed “neural filters” that can adjust brushstroke style on the fly), pointing toward a near future where much of the tedious detailing in digital art can be handled by intelligent brush algorithms trained on artistic techniques.

6. Content-Aware Fill

Content-aware fill refers to AI-powered image editing tools that intelligently remove or add elements in an image by filling in the area in a context-aware manner. In photo editing, this means you can erase an unwanted object (like a tourist in the background or a power line across the sky), and the software will automatically synthesize new pixels to replace it, blending with the surrounding content as if the object was never there. Initially introduced in tools like Adobe Photoshop over a decade ago, content-aware fill has greatly improved with advanced AI – modern versions understand patterns, textures, and even semantic context, enabling not just object removal but also expansion of images (so-called “outpainting” or generative fill). The benefit is a drastic reduction in manual retouching work; tasks that once required skilled cloning and patching can now be done with a single command. For artists and photographers, this speeds up editing workflows and allows more creative flexibility (they can quickly try compositional changes or composite images seamlessly). Implications include the democratization of high-end image editing (even casual users can now achieve results that previously took expert skills) and a conversation about trust in images – as these tools make it trivial to alter reality in a photo, viewers have to be more cautious about authenticity. Overall, content-aware fill has become a standard feature that exemplifies AI augmenting human capabilities in digital art by handling the “boring” parts of cleanup and allowing creators to focus on their vision.

Usage of AI-powered fill tools has skyrocketed as their realism has improved. Adobe’s Generative Fill (an AI-enhanced version of content-aware fill introduced in Photoshop 2023) saw unprecedented adoption – Adobe reported that within months of release, Generative Fill had a 10× higher adoption rate among users compared to previous new features. By late 2023, Photoshop’s user base had used this AI fill to generate many millions of image edits, contributing to over 3 billion AI-generated images produced with Adobe’s Firefly model in just the first few months of its beta. Photographers now routinely use content-aware fill to do things like remove blemishes or distractions (for example, a wedding photographer might delete anachronistic exit signs in the venue with a click). Even smartphone apps have joined in – Google’s Magic Eraser on Pixel phones uses a form of content-aware fill to let users remove photo-bombers from shots, and this feature was made available to Google Photos users broadly in 2023, indicating mainstream demand. Case studies show significant time savings: one design firm reported cutting image cleanup time by up to 80% after integrating AI fill for product photos. The flip side is that doctored images are easier to create; in 2024, a few high-profile “hoax” images (such as a fake expansion of the Mona Lisa beyond its actual frame, made with generative fill) went viral, underscoring how convincing the technology has become. In response, companies are also developing tools to detect AI-edited images. Nevertheless, content-aware fill remains a beloved feature among creatives – a survey in 2025 found it ranked among the top 3 most-used AI tools by graphic designers, who praise how it seamlessly blends edits into the original.

7. Pattern and Texture Generation

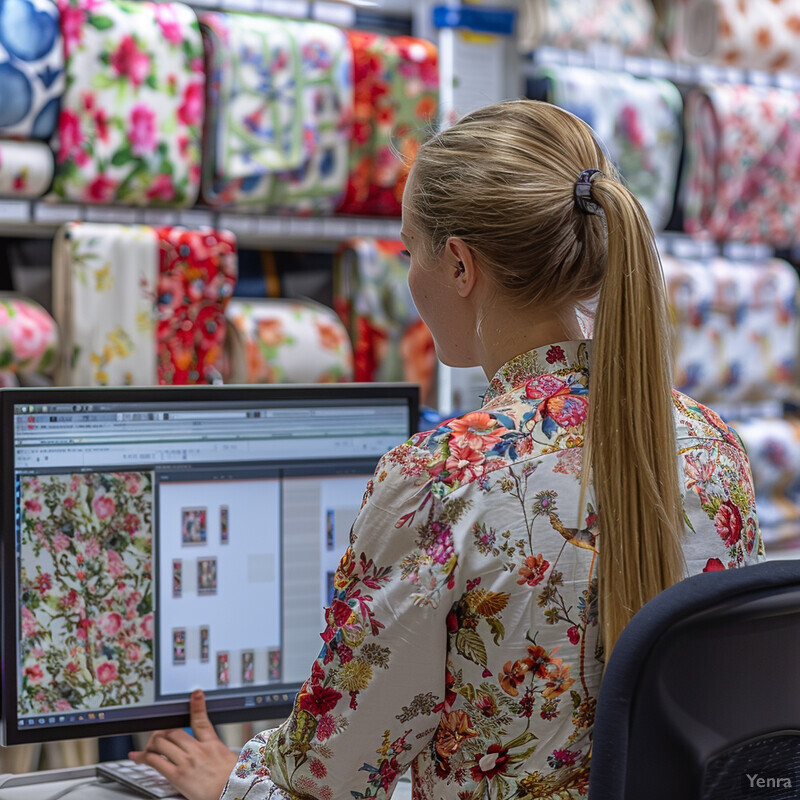

AI is increasingly used to generate novel patterns and textures, providing artists and designers with an essentially limitless library of digital materials. In fields like graphic design, fashion, interior design, and game development, creators often need repetitive patterns or surface textures (e.g. fabric prints, wallpapers, 3D object surfaces). AI models, especially generative adversarial networks (GANs) and diffusion models, can be trained on examples of patterns or textures and then produce new variations that maintain a coherent style. This means a textile designer, for instance, could use AI to instantly create dozens of colorfully intricate pattern ideas to print on fabric, far faster than sketching them manually. The benefits include speed and variety – AI can suggest designs one might not have thought of, spurring creativity. It also allows customization: a video game artist can generate a seamless texture that matches a specific aesthetic on demand, rather than searching through stock libraries. Implications involve shifts in the design process: rather than drawing every element from scratch, designers curate AI outputs, picking or tweaking generated patterns to finalize them. There is also a positive sustainability angle in industries like fashion – AI can help explore designs digitally (reducing physical sampling) and perhaps revive traditional motifs by blending them with modern styles. However, designers remain crucial in steering the AI (providing reference styles, selecting pleasing outputs, and ensuring the pattern fits the project’s needs). In summary, AI pattern generation serves as a smart assistant that expands the creative toolbox with endless visual possibilities.

The use of AI in pattern and texture creation is already yielding impressive results in real-world design workflows. In the fashion industry, for example, startups have deployed AI systems that dramatically accelerate print design for textiles. The CEO of one such company, MYTH AI, noted that their generative model can produce “hundreds of unique patterns in seconds,” far outpacing traditional designers, and each AI-generated pattern is checked to be at least 70% original to avoid simply copying existing designs. These AI-created patterns, backed by a diversity of training data, allow fashion brands to iterate through ideas at high speed, with the designer curating which outputs align with their brand’s style. Major software firms are also incorporating AI for patterns: in late 2023 Adobe introduced a Text-to-Vector AI in Illustrator that can generate vector graphics and repeating patterns from a text prompt, enabling graphic designers to instantly create new background designs or geometric motifs. Video game developers report using GAN-based texture generation to create realistic material surfaces (like wood grain or sci-fi metal panels) on the fly, reducing the need for hand-painted textures. A 2024 academic survey on computer-aided colorization and texturing noted that merging AI vision with graphics has opened “a wide range of applications including restoring original colors for legacy films and creating novel patterns for fashion and UI design”. In the U.S., some large retail fashion brands have begun piloting AI-generated prints in their collections – during New York Fashion Week 2024, a few designers quietly incorporated AI-generated fabric prints into their garments, treating AI as a part of the creative team. This evidence from industry and research underscores that AI pattern generation is not speculative; it’s actively improving productivity and creative breadth in design studios today.

8. Voice Synthesis

AI voice synthesis technology enables the generation of human-like speech from text, and even the cloning of specific voices. In the creative realm, this means AI can produce voice-overs or character voices without needing the speaker to physically record every line. Filmmakers, game developers, and animators are using AI-generated voices to prototype or even finalize dialog, especially when needing voices that aren’t readily available (for example, voicing numerous minor characters in a video game, or having a narrator voice in many different styles). High-quality AI voices now capture not just words but also intonation, emotion, and accents with remarkable fidelity. The benefits include cost and time savings – instead of scheduling a voice actor for every minor change, creators can type new lines and instantly get audio – and new creative flexibility, like adjusting the delivery (tone, pacing) via software or creating voices for fantastical creatures that no human could naturally produce. One important implication is the ethical and legal dimension: using someone’s voice model (especially a famous actor’s) requires permission, and the entertainment industry is developing guidelines to address voice cloning. However, many voice actors are also leveraging AI themselves, using it to modulate their voice or expand their range. Overall, AI voice synthesis in 2023–2025 is becoming a mainstream tool in content creation, allowing for efficient dialogue generation and localization (dubbing in different languages using the same “voice”) while prompting necessary conversations about consent and authenticity.

AI voice synthesis has advanced to the point of being used in high-profile productions and adopted widely by creatives. One notable case was in 2022, when the voice of Darth Vader in the Disney+ series Obi-Wan Kenobi was generated by an AI system (Respeecher) rather than performed live – the 91-year-old original actor James Earl Jones gave Disney permission to use AI to recreate his iconic 1970s Vader voice, which the AI did by training on his past recordings. Audiences heard a convincingly youthful Vader, demonstrating how AI can extend an actor’s vocal presence beyond what their current voice can do. Beyond film, AI voice tools have been rapidly adopted in media and business. By late 2024, one leading AI voice platform (ElevenLabs) reported its technology was being used by 41% of Fortune 500 companies for applications like audiobooks, video narration, and game development. The startup’s annual revenue grew from an estimated $25 million in 2023 to $90 million in 2024 on the strength of demand for AI voices, highlighting how quickly this tech is scaling. In the videogame industry, studios are now using AI to generate ambient NPC dialogue and battle cries, reserving human voice actors for main characters – a single RPG released in 2023 featured over 100 hours of AI-generated incidental dialogue, audio that would have been impractical to record manually. Meanwhile, concerns about deepfakes have led to new regulations (for instance, several U.S. states in 2024 proposed laws against using AI to impersonate someone’s voice without disclosure). Still, the creative use of AI voice synthesis continues to grow. A survey of media production professionals in 2025 found that 68% had tried AI voice tools for content creation, with most saying the quality was sufficient for at least rough cuts or placeholder dialog, and about 20% using AI voices in final released projects (often for minor roles or narration). This trend shows how AI voices are becoming an everyday element of the creative toolkit, much like stock music or stock imagery – useful when used appropriately, but warranting careful ethical use.

9. Choreography Assistance

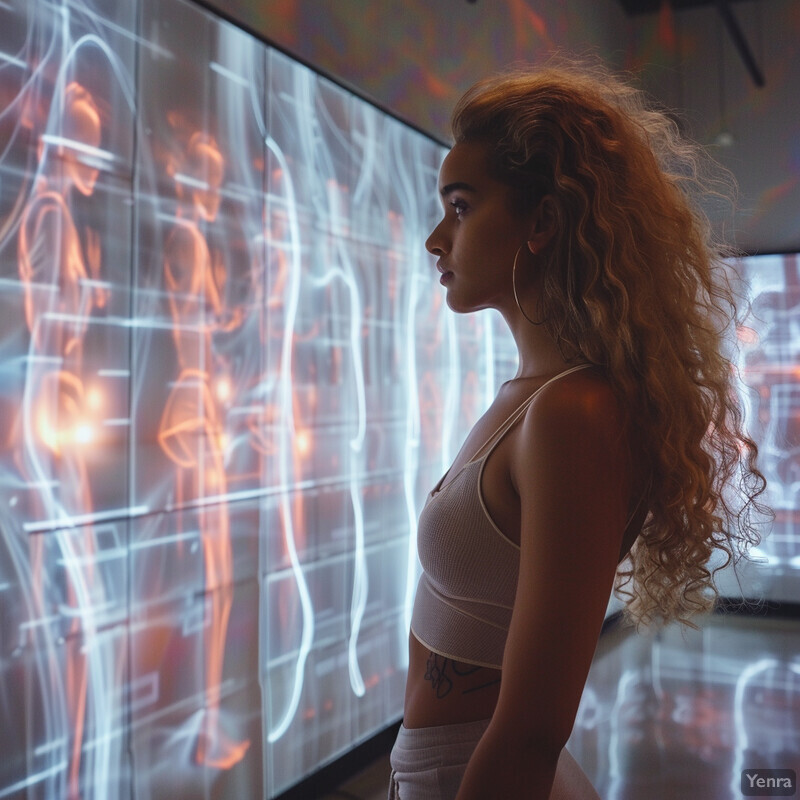

AI is emerging as a tool to assist choreographers in creating dance sequences, by analyzing music and suggesting movements or entire choreography drafts. Just as AI can generate text or images, researchers have developed models that generate human-like dance motions aligned to a given song’s rhythm and mood. In practical terms, a choreographer can input a piece of music and have the AI propose a set of dance moves or patterns – which the choreographer can then accept, modify, or use for inspiration. This can jump-start the creative process, offering fresh movement ideas that the choreographer might not have conceived alone. Benefits include efficiency (exploring many concepts quickly) and overcoming creative block by collaborating with an “AI dance assistant.” Some systems allow partial edits – for example, a choreographer can design part of a dance (say, a specific arm movement) and the AI will fill in the rest of the body’s motion in a realistic way, similar to an auto-complete for dance. The implications are intriguing: dance makers maintain creative control but gain a partner that never tires of brainstorming new sequences. It also democratizes choreography to a degree; dancers without extensive composition training could use AI tools to generate starting routines. However, much like other creative AI applications, there are discussions about authorship and style – an AI may not fully capture a choreographer’s personal style or the cultural nuances of certain dances, so human oversight and refinement remain crucial. Overall, AI choreography tools are seen as a extension of the choreographer’s toolkit, offering suggestions and possibilities that can be molded by human artistry.

In 2023, a Stanford University team introduced an AI system called EDGE (Editable Dance Generation) that illustrates the potential of choreography assistance. EDGE generates 3D dance animations for any input music and allows users to tweak the results – for instance, a user can adjust a dancer’s leg position, and the AI will intelligently re-compose the rest of the movement to be physically plausible and on beat. In evaluations, some of EDGE’s AI-crafted dance routines were so coherent that study participants preferred them over routines designed by human choreographers in certain cases. This is a remarkable indication of AI’s progress in capturing human-like expression through movement. On the live arts front, renowned choreographer Wayne McGregor has been using an AI-driven tool (developed with Google and called AISoma) that was trained on 25 years of his dance archives. He uses it to generate new choreography options in his style – in one project, the AI helped create unique variations of a piece that change with each performance, effectively co-choreographing with McGregor in real time. Furthermore, other dance artists are integrating audience interaction with AI: in an experimental performance in 2024, choreographer Alexander Whitley employed an AI system to respond to audience members’ movements and incorporate them into the on-stage dance via avatars. These examples show that from research labs to professional dance companies, AI is actively contributing to choreography. Early metrics are promising – one dance company reported that using an AI suggestion tool cut down the time to develop a new routine by about 50%, as it could generate skeleton sequences that choreographers then refined. As of 2025, at least a few live performances and films have credited AI in a choreography assistant role, marking the start of a trend where human and artificial creativity intertwine in the dance world.

10. Interactive Storytelling

AI is enhancing interactive storytelling by allowing narratives (in games, simulations, or interactive fiction) to dynamically adapt to user choices and even generate story content on the fly. Traditionally, choose-your-own-adventure stories or narrative games rely on pre-written branches. AI, especially large language models, can instead improvise plot developments, dialogue, and world details in real time in response to a player’s actions or requests. This creates a more personalized and seemingly boundless story experience – the story can go in directions not scripted in advance. The benefit is a new level of immersion: users feel like they are co-creating the story, because the AI will accept almost any input and continue the narrative from there. We’ve seen this in AI text adventure games, where players type any action and the AI narrative engine responds with unique outcomes. Such systems serve as infinitely flexible dungeon masters or storytellers. In design, this offers writers a tool to generate content variations and test storylines with less manual effort. The implications include a shift in how narrative content is created: it becomes a collaborative process between human designers (who set initial characters, settings, and maybe high-level plot arcs) and the AI (which fleshes out moment-to-moment events and reacts to the user). It raises questions of quality control – AI can sometimes produce inconsistent or less coherent storylines if not guided – so human oversight and editing remains important. Nonetheless, interactive AI narratives point toward entertainment experiences that are never the same twice, blurring the line between author and audience as both participate in story generation.

The impact of AI in interactive storytelling is evident from both the popularity of AI-driven story games and new experimental projects. AI Dungeon, an AI-powered text adventure game released in late 2019, demonstrated huge demand for AI storytelling – it reached over 1.5 million players by mid-2020, all drawn by the promise of an open-ended adventure where the AI would continue the story no matter what the player typed. This early success has spawned a wave of similar AI narrative platforms and features in mainstream games. By 2023, major game studios were investing in AI narrative design: for example, Ubisoft announced an AI tool (“Ghostwriter”) to generate dynamic NPC dialogue, and smaller developers like Operative Games created experiences where AI characters engage the player through natural language. One such experience, showcased at TED 2024, called Storyweaver, involved persistent AI-driven characters that could interact with an audience via phone calls and text messages to spin a personalized mystery story. Metrics from interactive fiction platforms underscore that users engage longer when the story can adapt fluidly – one platform reported that sessions with AI-driven narratives saw over 30% longer playtimes on average compared to static branching stories. Additionally, academic research is exploring this space: a 2023 paper described 1001 Nights, a co-creative storytelling game where an AI Dungeon Master (GPT-4) and the player collaboratively tell stories, and introduced the term “AI-narrative games” for this emerging genre. As of 2025, interactive storytelling AI is not only a novelty in tech demos but has been integrated into popular online role-playing experiences and narrative mobile apps (some AI story apps boast millions of user-generated stories). With companies like Netflix reportedly experimenting with AI to personalize TV show narratives for viewers, it’s clear that dynamically generated storytelling is poised to become a significant mode of entertainment alongside traditional authored stories.