The development of Multimodal Large Language Models (MLLMs) has advanced significantly, particularly in generating and understanding image-to-text content. However, progress is mainly limited to English due to a scarcity of high-quality multimodal resources in other languages, such as Arabic. This limitation hampers the creation of competitive models in Arabic. To address this, the authors present "Dallah," an Arabic multimodal assistant leveraging an advanced language model based on LLaMA-2. Dallah aims to facilitate multimodal interactions and demonstrate state-of-the-art performance in Arabic MLLMs, handling complex dialectal interactions that integrate both textual and visual elements.

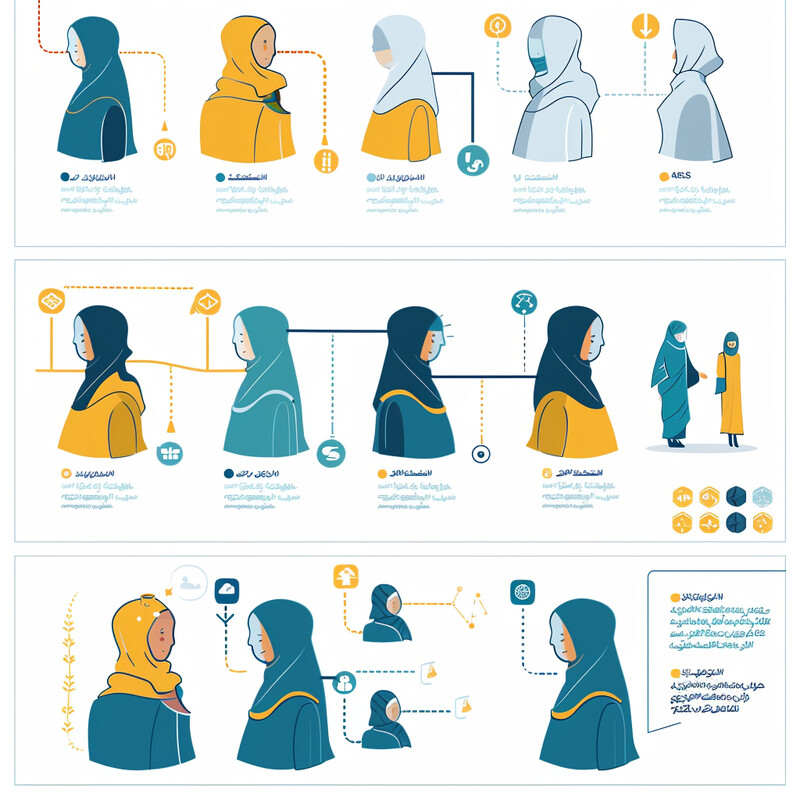

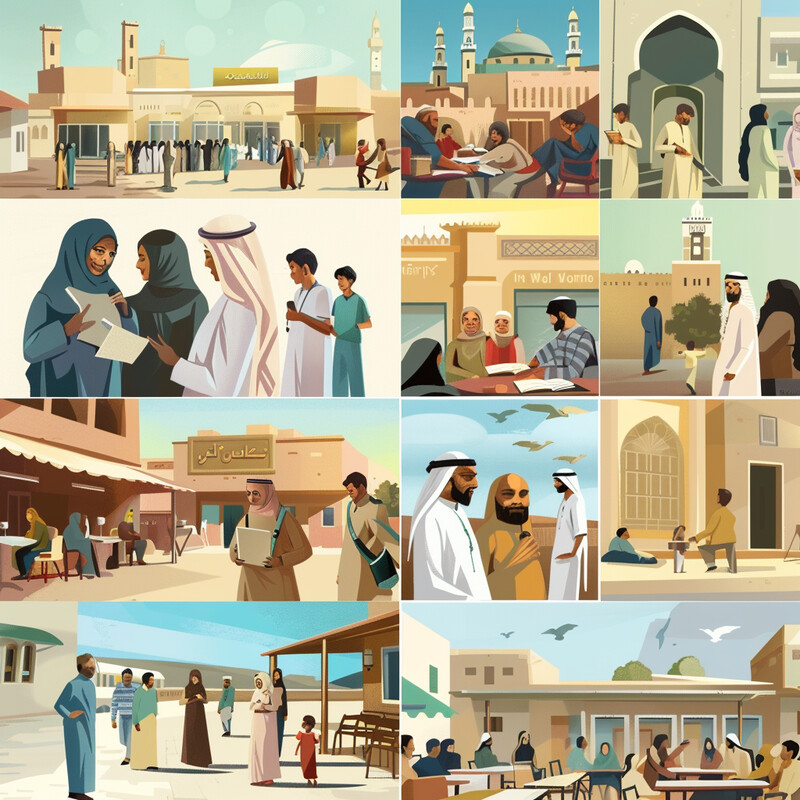

Arabic dialects present complex linguistic variations that standard NLP models, primarily designed for Modern Standard Arabic (MSA), often fail to address. This diversity necessitates specialized models that can navigate the rich tapestry of dialectal Arabic and its integration with visual data. Addressing these needs is crucial for enhancing user interaction and preserving linguistic heritage, especially for dialects underrepresented or at risk of diminishing. Dallah is designed to tackle these challenges by creating a robust multimodal language model tailored to Arabic dialects, ensuring their continued relevance and preserving linguistic diversity in the Arabic-speaking world.

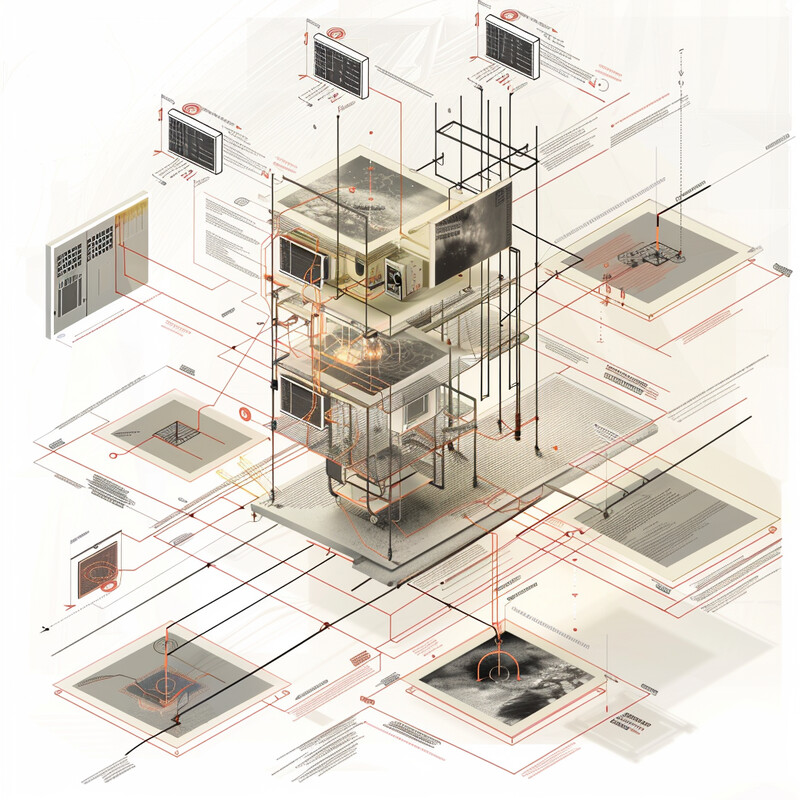

Dallah is built on the LLaVA framework and enhanced with the linguistic capabilities of AraLLaMA, proficient in Arabic and English. The model comprises three key components: a vision encoder (CLIP-Large model), a projector (two-layer multi-layer perceptron), and a language model (AraLLaMA). The training process includes pre-training with LLaVA-Pretrain data, visual instruction fine-tuning with LLaVA-Instruct data, and further fine-tuning using dialectal data from six major Arabic dialects. The model is evaluated using benchmarks tailored for MSA and dialectal responses to ensure high-quality, representative multimodal datasets.

Dallah's performance is evaluated using two benchmarks: LLaVA-Bench for MSA and Dallah-Bench for dialectal interactions. The evaluation includes model-based and human evaluations, comparing Dallah against baseline models like Peacock and PALO. Results indicate that Dallah outperforms baseline models in most dimensions, demonstrating strong reasoning capabilities and substantial knowledge in both MSA and various dialects. The evaluation highlights Dallah's effectiveness in generating accurate and contextually relevant responses across different dialects and real-world applications.

Dallah represents a significant advancement in Arabic NLP by offering a powerful multimodal language model tailored to Arabic dialects. Its robust performance in handling MSA and dialectal variations showcases its potential for diverse applications, from education to cultural preservation. The study also identifies several limitations, such as the need for more culturally diverse datasets and improved hallucination control. Future work will focus on expanding dialect coverage, refining evaluation metrics, and enhancing the model's capabilities in recognizing Arabic text within images, ensuring its relevance and effectiveness in preserving Arabic linguistic heritage.

Reference: Fakhraddin Alwajih, Gagan Bhatia, Muhammad Abdul-Mageed, "Dallah: A Dialect-Aware Multimodal Large Language Model for Arabic," arXiv:2407.18129v1 [cs.CL], 2024. https://arxiv.org/abs/2407.18129v1